WebLLM - Alternatives & Competitors

High-Performance In-Browser LLM Inference Engine

WebLLM enables running large language models (LLMs) directly within a web browser using WebGPU for hardware acceleration, reducing server costs and enhancing privacy.

Ranked by Relevance

-

1

BrowserAI Run Local LLMs Inside Your Browser

BrowserAI Run Local LLMs Inside Your BrowserBrowserAI is an open-source library enabling developers to run local Large Language Models (LLMs) directly within a user's browser, offering a privacy-focused AI solution with zero infrastructure costs.

- Free

-

2

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.OneLLM is a no-code platform enabling users to fine-tune, evaluate, and deploy Large Language Models (LLMs) efficiently. Streamline LLM development by creating datasets, integrating API keys, running fine-tuning processes, and comparing model performance.

- Freemium

- From 19$

-

3

LLM API Access 200+ AI Models with One Unified API

LLM API Access 200+ AI Models with One Unified APILLM API provides seamless access to over 200 leading AI models from top providers like OpenAI, Anthropic, Google, and Meta through a single, reliable API, empowering businesses and developers with infinite scalability.

- Usage Based

-

4

Avian API Fastest, production grade API for Open Source LLMs

Avian API Fastest, production grade API for Open Source LLMsAvian API is an enterprise-grade language model inference platform offering state-of-the-art LLMs with superior speed and competitive pricing, powered by Meta's Llama models and Nvidia H200 SXM technology.

- Usage Based

- From 3$

-

5

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

6

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.LlamaEdge is a lightweight and fast local LLM runtime and API server, powered by Rust & WasmEdge, designed for creating cross-platform LLM agents and web services.

- Free

-

7

OpenTools The API for Enhanced LLM Tool Use

OpenTools The API for Enhanced LLM Tool UseOpenTools provides a unified API enabling developers to connect Large Language Models (LLMs) with a diverse ecosystem of tools, simplifying integration and management.

- Usage Based

-

8

Ollama Get up and running with large language models locally

Ollama Get up and running with large language models locallyOllama is a platform that enables users to run powerful language models like Llama 3.3, DeepSeek-R1, Phi-4, Mistral, and Gemma 2 on their local machines.

- Free

-

9

Open Source AI Gateway Manage multiple LLM providers with built-in failover, guardrails, caching, and monitoring.

Open Source AI Gateway Manage multiple LLM providers with built-in failover, guardrails, caching, and monitoring.Open Source AI Gateway provides developers with a robust, production-ready solution to manage multiple LLM providers like OpenAI, Anthropic, and Gemini. It offers features like smart failover, caching, rate limiting, and monitoring for enhanced reliability and cost savings.

- Free

-

10

Allapi.ai Experience Advanced AI API Solutions for Web & Mobile Apps

Allapi.ai Experience Advanced AI API Solutions for Web & Mobile AppsAllapi.ai is an AI app development platform providing a unified API to access multiple AI models (like GPT-4, Claude 3, Gemini 1.5 Pro) and plugins, simplifying integration for developers and startups.

- Free Trial

-

11

neutrino AI Multi-model AI Infrastructure for Optimal LLM Performance

neutrino AI Multi-model AI Infrastructure for Optimal LLM PerformanceNeutrino AI provides multi-model AI infrastructure to optimize Large Language Model (LLM) performance for applications. It offers tools for evaluation, intelligent routing, and observability to enhance quality, manage costs, and ensure scalability.

- Usage Based

-

12

lm-studio.me Local LLM Running & Download Platform

lm-studio.me Local LLM Running & Download PlatformLM Studio is a user-friendly desktop application that allows users to run various large language models (LLMs) locally and offline, including Llama 2, PN3, Falcon, Mistral, StarCoder, and GEMMA models from Hugging Face.

- Free

-

13

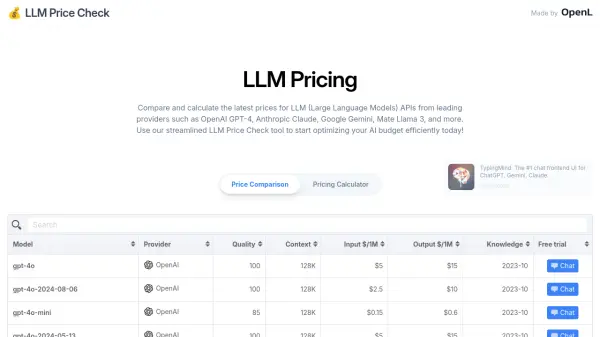

LLM Price Check Compare LLM Prices Instantly

LLM Price Check Compare LLM Prices InstantlyLLM Price Check allows users to compare and calculate prices for Large Language Model (LLM) APIs from providers like OpenAI, Anthropic, Google, and more. Optimize your AI budget efficiently.

- Free

-

14

FriendliAI Accelerate Generative AI Inference

FriendliAI Accelerate Generative AI InferenceFriendliAI provides a high-performance platform for accelerating generative AI inference, enabling fast, cost-effective, and reliable deployment and serving of Large Language Models (LLMs).

- Usage Based

-

15

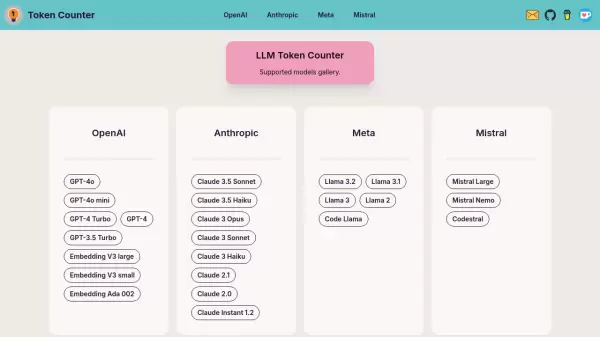

LLM Token Counter Secure client-side token counting for popular language models

LLM Token Counter Secure client-side token counting for popular language modelsA sophisticated token counting tool that helps users manage token limits across multiple language models including GPT-4, Claude-3, and Llama-3, with client-side processing for maximum security.

- Free

-

16

Browser Use Enable AI to control your browser

Browser Use Enable AI to control your browserBrowser Use empowers AI agents to interact with websites by extracting interactive elements, making web automation seamless. It offers advanced features like multi-tab management and vision + HTML extraction.

- Freemium

- From 30$

-

17

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

18

LLM Optimize Rank Higher in AI Engines Recommendations

LLM Optimize Rank Higher in AI Engines RecommendationsLLM Optimize provides professional website audits to help you rank higher in LLMs like ChatGPT and Google's AI Overview, outranking competitors with tailored, actionable recommendations.

- Paid

-

19

docs.litellm.ai Unified Interface for Accessing 100+ LLMs

docs.litellm.ai Unified Interface for Accessing 100+ LLMsLiteLLM provides a simplified and standardized way to interact with over 100 large language models (LLMs) using a consistent OpenAI-compatible input/output format.

- Free

-

20

NLKit Premium Toolkit To Ship AI Features Faster

NLKit Premium Toolkit To Ship AI Features FasterNLKit offers open-source JavaScript libraries for developers to embed conversational AI assistants into web applications with various backend integrations.

- Freemium

-

21

LLM Explorer Discover and Compare Open-Source Language Models

LLM Explorer Discover and Compare Open-Source Language ModelsLLM Explorer is a comprehensive platform for discovering, comparing, and accessing over 46,000 open-source Large Language Models (LLMs) and Small Language Models (SLMs).

- Free

-

22

Kalavai Turn your devices into a scalable LLM platform

Kalavai Turn your devices into a scalable LLM platformKalavai offers a platform for deploying Large Language Models (LLMs) across various devices, scaling from personal laptops to full production environments. It simplifies LLM deployment and experimentation.

- Paid

- From 29$

-

23

Browser Copilot Your AI Copilot Across the Web

Browser Copilot Your AI Copilot Across the WebBrowser Copilot is an AI-powered browser extension that understands website content and helps users automate tasks, providing real-time assistance across any webpage with features like email management, custom workflows, and document analysis.

- Paid

- From 19$

-

24

anythingllm.com The all-in-one AI application for local and offline use

anythingllm.com The all-in-one AI application for local and offline useAnythingLLM is a comprehensive desktop AI application that enables users to chat with documents, use AI agents, and leverage custom models with complete privacy and offline capabilities.

- Freemium

- From 50$

-

25

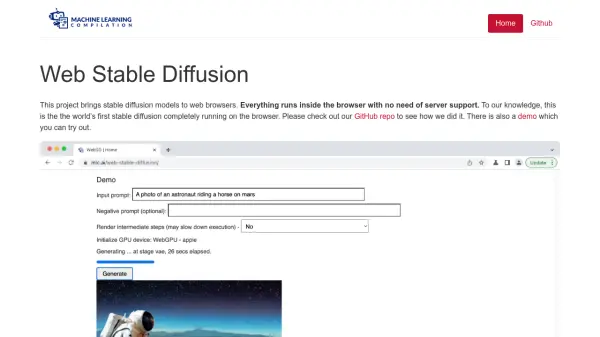

Web Stable Diffusion Run Stable Diffusion Models Directly in Your Web Browser

Web Stable Diffusion Run Stable Diffusion Models Directly in Your Web BrowserWeb Stable Diffusion is a project enabling stable diffusion models for text-to-image generation to run entirely within a web browser, eliminating server-side processing needs.

- Free

-

26

Interlify Connect Your APIs to LLMs in minutes—Not Weeks!

Interlify Connect Your APIs to LLMs in minutes—Not Weeks!Interlify seamlessly connects your existing APIs to Large Language Models (LLMs), enabling rapid integration and development of AI-powered features without complex coding.

- Freemium

- From 25$

-

27

LM Studio Discover, download, and run local LLMs on your computer

LM Studio Discover, download, and run local LLMs on your computerLM Studio is a desktop application that allows users to run Large Language Models (LLMs) locally and offline, supporting various architectures including Llama, Mistral, Phi, Gemma, DeepSeek, and Qwen 2.5.

- Free

-

28

Promptimize Become an AI Pro with One Click

Promptimize Become an AI Pro with One ClickPromptimize is a browser extension that enhances your prompts for better AI-generated content. It offers one-click enhancements, dynamic variables, and a prompt library, compatible with any LLM.

- Freemium

- From 12$

-

29

llmChef Perfect AI responses with zero effort

llmChef Perfect AI responses with zero effortllmChef is an AI enrichment engine that provides access to over 100 pre-made prompts (recipes) and leading LLMs, enabling users to get optimal AI responses without crafting perfect prompts.

- Paid

- From 5$

-

30

OxyAPI An AI Platform built for developers

OxyAPI An AI Platform built for developersOxyAPI provides developers with instant API access to a wide range of AI models, featuring global deployment and simple pay-as-you-go pricing.

- Usage Based

-

31

OpenLIT Open Source Platform for AI Engineering

OpenLIT Open Source Platform for AI EngineeringOpenLIT is an open-source observability platform designed to streamline AI development workflows, particularly for Generative AI and LLMs, offering features like prompt management, performance tracking, and secure secrets management.

- Other

-

32

LangSearch Connect your LLM applications to the world.

LangSearch Connect your LLM applications to the world.LangSearch is a Web Search API that offers natural language search and semantic reranking, providing clean and accurate context for LLM applications.

- Free

-

33

LiteLLM Unified API Gateway for 100+ LLM Providers

LiteLLM Unified API Gateway for 100+ LLM ProvidersLiteLLM is a comprehensive LLM gateway solution that provides unified API management, authentication, load balancing, and spend tracking across multiple LLM providers including Azure OpenAI, Vertex AI, Bedrock, and OpenAI.

- Freemium

-

34

Dialoq AI Run any AI models through one simple unified API

Dialoq AI Run any AI models through one simple unified APIDialoq AI is a comprehensive API gateway that enables developers to access and integrate 200+ Language Learning Models (LLMs) through a single, unified API, streamlining AI application development with enhanced reliability and cost predictability.

- Contact for Pricing

-

35

Window Use your own AI models on the web without API keys

Window Use your own AI models on the web without API keysWindow is a browser extension that enables users to choose and configure their preferred AI models for web applications, eliminating the need for API keys or backend setup.

- Free

-

36

Kolosal AI The Ultimate Local LLM Platform

Kolosal AI The Ultimate Local LLM PlatformKolosal AI is a lightweight, open-source application enabling users to train, run, and chat with local Large Language Models (LLMs) directly on their devices, ensuring complete privacy and control.

- Free

-

37

SmartestChild An AI chatbot running entirely in your browser.

SmartestChild An AI chatbot running entirely in your browser.SmartestChild is an AI chatbot powered by a Large Language Model that operates locally in the browser using WebGPU. No data is sent to servers, ensuring privacy.

- Free

-

38

Keywords AI LLM monitoring for AI startups

Keywords AI LLM monitoring for AI startupsKeywords AI is a comprehensive developer platform for LLM applications, offering monitoring, debugging, and deployment tools. It serves as a Datadog-like solution specifically designed for LLM applications.

- Freemium

- From 7$

-

39

Inference.net Run AI Models, Save Money

Inference.net Run AI Models, Save MoneyInference.net provides fast, scalable, pay-per-token APIs for leading AI models like DeepSeek V3 and Llama 3.1, offering significant cost savings and easy integration.

- Usage Based

-

40

AIML API One API, 200+ AI Models with 99% Uptime

AIML API One API, 200+ AI Models with 99% UptimeAIML API is a comprehensive AI model marketplace offering seamless integration of 200+ AI models through a single API endpoint, featuring models from leading providers like OpenAI, Anthropic, Google, and Meta AI.

- Usage Based

-

41

/ML The full-stack AI infra

/ML The full-stack AI infra/ML offers a full-stack AI infrastructure for serving large language models, training multi-modal models on GPUs, and hosting AI applications such as Streamlit, Gradio, and Dash, while providing cost observability.

- Contact for Pricing

-

42

yourchat.app Chat With Your Self-Hosted AI LLM

yourchat.app Chat With Your Self-Hosted AI LLMYourChat is a cross-platform client enabling users to chat securely with their own self-hosted Large Language Models (LLMs), offering customization and privacy.

- Contact for Pricing

-

43

VESSL AI Operationalize Full Spectrum AI & LLMs

VESSL AI Operationalize Full Spectrum AI & LLMsVESSL AI provides a full-stack cloud infrastructure for AI, enabling users to train, deploy, and manage AI models and workflows with ease and efficiency.

- Usage Based

-

44

OpenRouter A unified interface for LLMs

OpenRouter A unified interface for LLMsOpenRouter provides a unified interface for accessing and comparing various Large Language Models (LLMs), offering users the ability to find optimal models and pricing for their specific prompts.

- Usage Based

-

45

AIPal Experience the Future of Web Browsing with AIPal!

AIPal Experience the Future of Web Browsing with AIPal!AIPal is an AI-powered browser extension integrating models like GPT-3.5, GPT-4, and Claude 3 to enhance online activities such as chatting, writing, translating, and summarizing directly on any webpage.

- Freemium

-

46

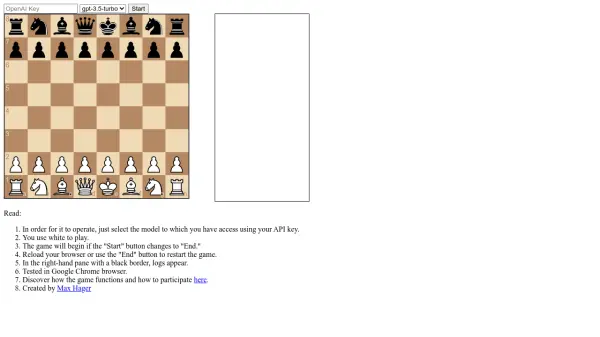

LLM Chess Play Chess Against Advanced Language Models

LLM Chess Play Chess Against Advanced Language ModelsLLM Chess allows users to play chess against AI language models like gpt-3.5-turbo and gpt-4 using their own API key.

- Free

-

47

Prompteus One Platform to Rule AI.

Prompteus One Platform to Rule AI.Prompteus enables users to build, manage, and scale production-ready AI workflows efficiently, offering observability, intelligent routing, and cost optimization.

- Freemium

-

48

Viinyx AI The most useful all-in-one AI assistant for your browser

Viinyx AI The most useful all-in-one AI assistant for your browserViinyx AI is a comprehensive browser extension that integrates multiple AI models like ChatGPT-4, Gemini 1.5, and Claude 3 to enhance web browsing and content creation workflows. It offers features like chatbox, writing assistance, and image generation across any website.

- Freemium

- From 45$

-

49

Puma Browser A Private, AI-Powered Mobile Browser with Local LLM Integration

Puma Browser A Private, AI-Powered Mobile Browser with Local LLM IntegrationPuma Browser is a cutting-edge mobile browser featuring built-in local AI models for private conversations and content summarization, prioritizing user privacy and data security.

- Free

-

50

Unify Build AI Your Way

Unify Build AI Your WayUnify provides tools to build, test, and optimize LLM pipelines with custom interfaces and a unified API for accessing all models across providers.

- Freemium

- From 40$

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?