What is LLM Token Counter?

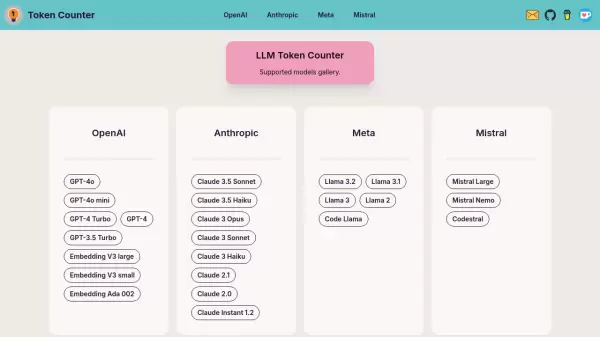

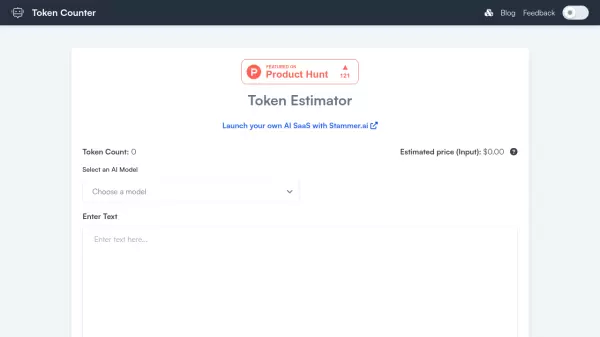

A sophisticated tool engineered to provide accurate token counting for various Large Language Models (LLMs), implementing Transformers.js technology for secure, client-side processing. Supporting an extensive range of models including GPT-4, Claude-3, and Llama-3, it ensures precise token management without compromising data privacy.

The tool leverages the Hugging Face Transformers library's JavaScript implementation, offering fast and efficient token calculations directly in the browser. This approach eliminates the need for server-side processing, guaranteeing that sensitive prompt information remains confidential while providing accurate token counts essential for optimal LLM interactions.

Features

- Client-side Processing: Secure token counting without server transmission

- Multi-model Support: Compatible with GPT, Claude, Llama, and Mistral models

- Fast Performance: Efficient processing using Rust-implemented Transformers library

- Privacy Protection: Complete data confidentiality with browser-based calculations

Use Cases

- Optimizing prompts for LLM token limits

- Preventing token limit overflow in AI applications

- Ensuring efficient use of API tokens

- Managing prompt length for multiple AI models

FAQs

-

What is LLM Token Counter?

LLM Token Counter is a sophisticated tool designed to help users manage token limits for various Language Models including GPT-3.5, GPT-4, Claude-3, Llama-3, and others, with continuous updates and support. -

Why use an LLM Token Counter?

It's crucial to ensure your prompt's token count falls within the specified token limit, as exceeding this limit may result in unexpected or undesirable outputs from the LLM. -

How does the LLM Token Counter work?

It uses Transformers.js, a JavaScript implementation of the Hugging Face Transformers library, loading tokenizers directly in your browser for client-side token count calculation. -

Will I leak my prompt?

No, the token count calculation is performed client-side, ensuring your prompt remains secure and confidential without transmission to any server or external entity.

Related Queries

Helpful for people in the following professions

LLM Token Counter Uptime Monitor

Average Uptime

99.71%

Average Response Time

182.1 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.