LLM evaluation tools - AI tools

-

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

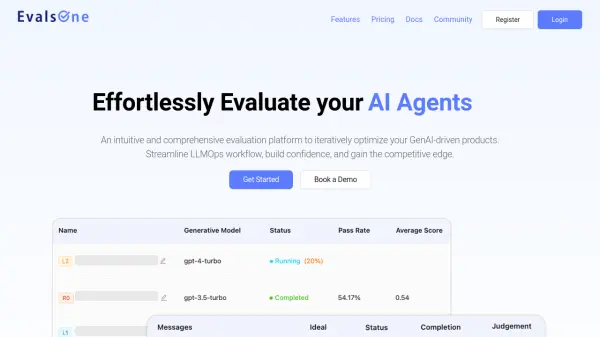

EvalsOne Evaluate LLMs & RAG Pipelines Quickly

EvalsOne Evaluate LLMs & RAG Pipelines QuicklyEvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

-

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

-

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

Hegel AI Developer Platform for Large Language Model (LLM) Applications

Hegel AI Developer Platform for Large Language Model (LLM) ApplicationsHegel AI provides a developer platform for building, monitoring, and improving large language model (LLM) applications, featuring tools for experimentation, evaluation, and feedback integration.

- Contact for Pricing

-

Parea Test and Evaluate your AI systems

Parea Test and Evaluate your AI systemsParea is a platform for testing, evaluating, and monitoring Large Language Model (LLM) applications, helping teams track experiments, collect human feedback, and deploy prompts confidently.

- Freemium

- From 150$

-

neutrino AI Multi-model AI Infrastructure for Optimal LLM Performance

neutrino AI Multi-model AI Infrastructure for Optimal LLM PerformanceNeutrino AI provides multi-model AI infrastructure to optimize Large Language Model (LLM) performance for applications. It offers tools for evaluation, intelligent routing, and observability to enhance quality, manage costs, and ensure scalability.

- Usage Based

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

speech to text for multiple languages 26 tools

-

Online meeting recorder 9 tools

-

email generator online 15 tools

-

ai powered technical documentation tool 43 tools

-

dating message help 11 tools

-

serverless AI API platform 21 tools

-

SaaS customer support software 44 tools

-

AI app development without coding 60 tools

-

Unique QR code generator 21 tools

Didn't find tool you were looking for?