LLM development tool - AI tools

-

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

Hegel AI Developer Platform for Large Language Model (LLM) Applications

Hegel AI Developer Platform for Large Language Model (LLM) ApplicationsHegel AI provides a developer platform for building, monitoring, and improving large language model (LLM) applications, featuring tools for experimentation, evaluation, and feedback integration.

- Contact for Pricing

-

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

LM Studio Discover, download, and run local LLMs on your computer

LM Studio Discover, download, and run local LLMs on your computerLM Studio is a desktop application that allows users to run Large Language Models (LLMs) locally and offline, supporting various architectures including Llama, Mistral, Phi, Gemma, DeepSeek, and Qwen 2.5.

- Free

-

Literal AI Ship reliable LLM Products

Literal AI Ship reliable LLM ProductsLiteral AI streamlines the development of LLM applications, offering tools for evaluation, prompt management, logging, monitoring, and more to build production-grade AI products.

- Freemium

-

LMQL A programming language for LLMs.

LMQL A programming language for LLMs.LMQL is a programming language designed for large language models, offering robust and modular prompting with types, templates, and constraints.

- Free

-

Flowise Build LLM Apps Easily - Open Source Low-Code Tool for LLM Orchestration

Flowise Build LLM Apps Easily - Open Source Low-Code Tool for LLM OrchestrationFlowise is an open-source low-code platform that enables developers to build customized LLM orchestration flows and AI agents through a drag-and-drop interface.

- Freemium

- From 35$

-

Lora Integrate local LLM with one line of code.

Lora Integrate local LLM with one line of code.Lora provides an SDK for integrating a fine-tuned, mobile-optimized local Large Language Model (LLM) into applications with minimal setup, offering GPT-4o-mini level performance.

- Freemium

-

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

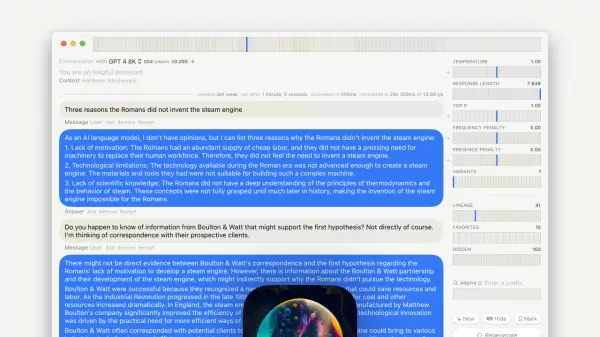

GPT–LLM Playground Your Comprehensive Testing Environment for Language Learning Models

GPT–LLM Playground Your Comprehensive Testing Environment for Language Learning ModelsGPT-LLM Playground is a macOS application designed for advanced experimentation and testing with Language Learning Models (LLMs). It offers features like multi-model support, versioning, and custom endpoints.

- Free

-

Inductor Streamline Production-Ready LLM Applications

Inductor Streamline Production-Ready LLM ApplicationsInductor enables developers to rapidly prototype, evaluate, and improve LLM applications, ensuring high-quality app delivery.

- Freemium

-

lm-studio.me Local LLM Running & Download Platform

lm-studio.me Local LLM Running & Download PlatformLM Studio is a user-friendly desktop application that allows users to run various large language models (LLMs) locally and offline, including Llama 2, PN3, Falcon, Mistral, StarCoder, and GEMMA models from Hugging Face.

- Free

-

PromptMage A Python framework for simplified LLM-based application development

PromptMage A Python framework for simplified LLM-based application developmentPromptMage is a Python framework that streamlines the development of complex, multi-step applications powered by Large Language Models (LLMs), offering version control, testing capabilities, and automated API generation.

- Other

-

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

-

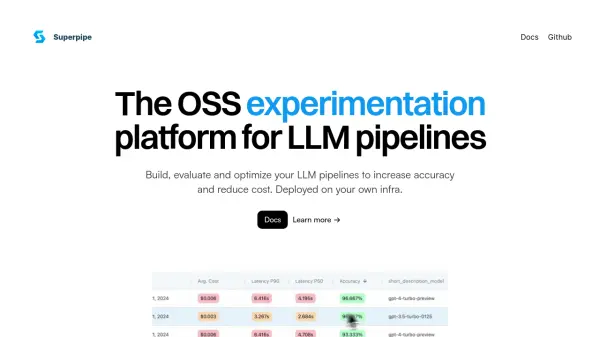

Superpipe The OSS experimentation platform for LLM pipelines

Superpipe The OSS experimentation platform for LLM pipelinesSuperpipe is an open-source experimentation platform designed for building, evaluating, and optimizing Large Language Model (LLM) pipelines to improve accuracy and minimize costs. It allows deployment on user infrastructure for enhanced privacy and security.

- Free

-

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.LlamaEdge is a lightweight and fast local LLM runtime and API server, powered by Rust & WasmEdge, designed for creating cross-platform LLM agents and web services.

- Free

-

Libretto LLM Monitoring, Testing, and Optimization

Libretto LLM Monitoring, Testing, and OptimizationLibretto offers comprehensive LLM monitoring, automated prompt testing, and optimization tools to ensure the reliability and performance of your AI applications.

- Freemium

- From 180$

-

Rig Build Modular and Scalable LLM Applications in Rust

Rig Build Modular and Scalable LLM Applications in RustRig is a Rust-based framework for building modular and scalable LLM applications. It offers a unified LLM interface, Rust-powered performance, and advanced AI workflow abstractions.

- Free

-

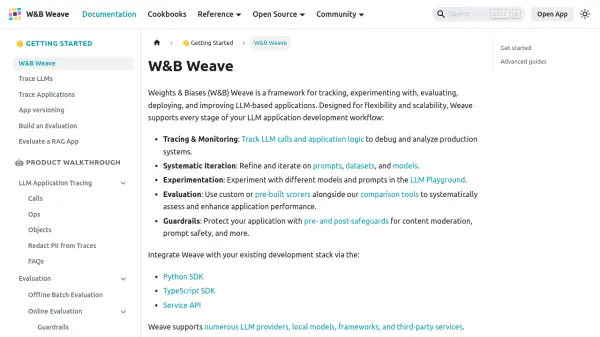

W&B Weave A Framework for Developing and Deploying LLM-Based Applications

W&B Weave A Framework for Developing and Deploying LLM-Based ApplicationsWeights & Biases (W&B) Weave is a comprehensive framework designed for tracking, experimenting with, evaluating, deploying, and enhancing LLM-based applications.

- Other

-

Braintrust The end-to-end platform for building world-class AI apps.

Braintrust The end-to-end platform for building world-class AI apps.Braintrust provides an end-to-end platform for developing, evaluating, and monitoring Large Language Model (LLM) applications. It helps teams build robust AI products through iterative workflows and real-time analysis.

- Freemium

- From 249$

-

Langfuse Open Source LLM Engineering Platform

Langfuse Open Source LLM Engineering PlatformLangfuse provides an open-source platform for tracing, evaluating, and managing prompts to debug and improve LLM applications.

- Freemium

- From 59$

-

aider AI Pair Programming in Your Terminal

aider AI Pair Programming in Your TerminalAider is a command-line tool that enables pair programming with LLMs to edit code in your local git repository. It supports various LLMs and offers top-tier performance on software engineering benchmarks.

- Free

-

GeneratorLLMs Extracts core website content, creates structured text files, improves LLM comprehension, boosts search engine visibility, and delivers quality data for AI training and inference.

GeneratorLLMs Extracts core website content, creates structured text files, improves LLM comprehension, boosts search engine visibility, and delivers quality data for AI training and inference.GeneratorLLMs is a tool that creates standardized `llms.txt` files by extracting core website content. This improves how Large Language Models (LLMs) understand websites and enhances AI visibility.

- Free

-

DimBase Build LLM-powered apps with no code

DimBase Build LLM-powered apps with no codeDimBase is a no-code platform designed for building and deploying applications powered by large language models (LLMs), simplifying AI-driven tool creation.

- Freemium

- From 29$

-

LM-Kit Enterprise-Grade C# Toolkits for AI Agent Integration

LM-Kit Enterprise-Grade C# Toolkits for AI Agent IntegrationLM-Kit provides .NET developers with tools for AI agent customization, creation, and orchestration. It enables multimodal generative AI systems integration in C# and VB.NET applications.

- Paid

- From 1000$

-

Langtail The low-code platform for testing AI apps

Langtail The low-code platform for testing AI appsLangtail is a comprehensive testing platform that enables teams to test and debug LLM-powered applications with a spreadsheet-like interface, offering security features and integration with major LLM providers.

- Freemium

- From 99$

-

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.OneLLM is a no-code platform enabling users to fine-tune, evaluate, and deploy Large Language Models (LLMs) efficiently. Streamline LLM development by creating datasets, integrating API keys, running fine-tuning processes, and comparing model performance.

- Freemium

- From 19$

-

Axolotl AI We make fine-tuning accessible, scalable, fun

Axolotl AI We make fine-tuning accessible, scalable, funAxolotl AI is a free, open-source tool designed to make fine-tuning Large Language Models (LLMs) faster, more accessible, and scalable across various AI models and platforms.

- Free

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

speech to text for multiple languages 26 tools

-

Online meeting recorder 9 tools

-

email generator online 15 tools

-

ai powered technical documentation tool 43 tools

-

dating message help 11 tools

-

serverless AI API platform 21 tools

-

SaaS customer support software 44 tools

-

AI app development without coding 60 tools

-

Unique QR code generator 21 tools

Didn't find tool you were looking for?