What is docs.litellm.ai?

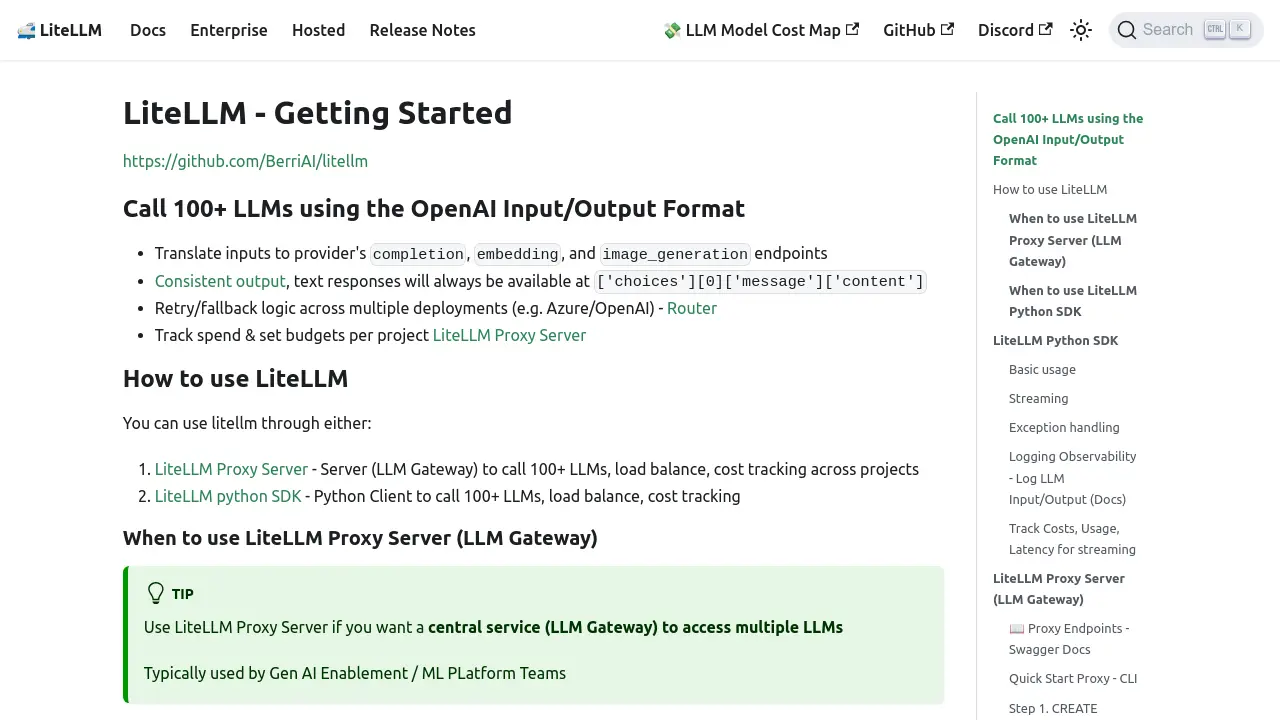

LiteLLM is a versatile tool designed to streamline interactions with over 100 large language models (LLMs). It offers a unified interface, allowing users to access various LLMs through a consistent OpenAI-compatible input/output format. This simplifies the development process and reduces the complexity of integrating multiple LLMs into applications.

LiteLLM offers functionalities like consistent output formatting, retry/fallback logic across deployments, and spend tracking. It can be used via a Python SDK for direct integration into code or as a proxy server (LLM Gateway) for centralized management and access control.

Features

- Unified Interface: Access 100+ LLMs using a consistent OpenAI-compatible format.

- Consistent Output: Text responses are always available at ['choices'][0]['message']['content'].

- Retry/Fallback Logic: Built-in mechanisms for handling failures and switching between deployments (e.g., Azure/OpenAI).

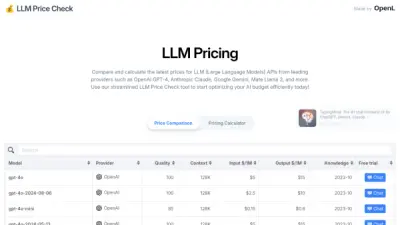

- Cost Tracking: Monitor and set budgets for LLM usage per project.

- Proxy Server (LLM Gateway): Centralized service for managing access to multiple LLMs, including logging, and access control.

- Python SDK: Integrate LiteLLM directly into Python code for streamlined development.

- Streaming Support: Enable streaming for real-time interactions with LLMs.

- Exception Handling: Maps exceptions across providers to OpenAI exception types.

- Observability: Pre-defined callbacks for integrating with MLflow, Lunary, Langfuse, Helicone, and more.

Use Cases

- Developing applications requiring access to multiple LLMs.

- Building LLM-powered features with fallback and redundancy.

- Centralized management of LLM access and usage within an organization.

- Integrating various LLMs into existing Python projects.

- Tracking and controlling costs associated with LLM usage.

- Creating a unified LLM gateway for internal teams.

Helpful for people in the following professions

docs.litellm.ai Uptime Monitor

Average Uptime

100%

Average Response Time

120.29 ms

Featured Tools

Gatsbi

Mimicking a TRIZ-like innovation workflow for research and patent writingBestFaceSwap

Change faces in videos and photos with 3 simple clicksMidLearning

Your ultimate repository for Midjourney sref codes and art inspirationUNOY

Do incredible things with no-code AI-Assistants for business automationFellow

#1 AI Meeting AssistantScreenify

Screen applicants with human-like AI interviewsAngel.ai

Chat with your favourite AI GirlfriendCapMonster Cloud

Highly efficient service for solving captchas using AIJoin Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.