Rhesis AI - Alternatives & Competitors

Open-source test generation SDK for LLM applications

Rhesis AI offers an open-source SDK to generate comprehensive, context-specific test sets for LLM applications, enhancing AI evaluation, reliability, and compliance.

Ranked by Relevance

-

1

RoostGPT Automated Test Case Generation using LLMs for Reliable Software Development

RoostGPT Automated Test Case Generation using LLMs for Reliable Software DevelopmentRoostGPT is an AI-powered testing co-pilot that automates test case generation, providing 100% test coverage while detecting static vulnerabilities. It leverages Large Language Models to enhance software development efficiency and reliability.

- Paid

- From 25000$

-

2

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

3

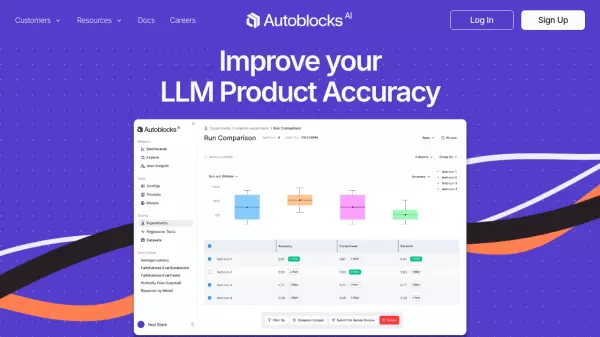

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & Evaluation

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & EvaluationAutoblocks is a collaborative testing and evaluation platform for LLM-based products that automatically improves through user and expert feedback, offering comprehensive tools for monitoring, debugging, and quality assurance.

- Freemium

- From 1750$

-

4

Autumn8 Compliance Assessment for Gen AI Applications

Autumn8 Compliance Assessment for Gen AI ApplicationsAutumn8 streamlines enterprise compliance assessments for Gen AI applications, significantly reducing preparation time and improving acceptance rates through automated document analysis.

- Contact for Pricing

-

5

Hegel AI Developer Platform for Large Language Model (LLM) Applications

Hegel AI Developer Platform for Large Language Model (LLM) ApplicationsHegel AI provides a developer platform for building, monitoring, and improving large language model (LLM) applications, featuring tools for experimentation, evaluation, and feedback integration.

- Contact for Pricing

-

6

Flow AI The data engine for AI agent testing

Flow AI The data engine for AI agent testingFlow AI accelerates AI agent development by providing continuously evolving, validated test data grounded in real-world information and refined by domain experts.

- Contact for Pricing

-

7

Langtail The low-code platform for testing AI apps

Langtail The low-code platform for testing AI appsLangtail is a comprehensive testing platform that enables teams to test and debug LLM-powered applications with a spreadsheet-like interface, offering security features and integration with major LLM providers.

- Freemium

- From 99$

-

8

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidence

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidenceHumanloop is an enterprise-grade platform that provides tools for LLM evaluation, prompt management, and AI observability, enabling teams to develop, evaluate, and deploy trustworthy AI applications.

- Freemium

-

9

NeuralTrust Secure, test, & scale LLMs

NeuralTrust Secure, test, & scale LLMsNeuralTrust offers a unified platform for securing, testing, monitoring, and scaling Large Language Model (LLM) applications, ensuring robust security, regulatory compliance, and operational control for enterprises.

- Contact for Pricing

-

10

Relari Trusting your AI should not be hard

Relari Trusting your AI should not be hardRelari offers a contract-based development toolkit to define, inspect, and verify AI agent behavior using natural language, ensuring robustness and reliability.

- Freemium

- From 1000$

-

11

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

12

promptfoo Test & secure your LLM apps with open-source LLM testing

promptfoo Test & secure your LLM apps with open-source LLM testingpromptfoo is an open-source LLM testing tool designed to help developers secure and evaluate their language model applications, offering features like vulnerability scanning and continuous monitoring.

- Freemium

-

13

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

14

OpenLIT Open Source Platform for AI Engineering

OpenLIT Open Source Platform for AI EngineeringOpenLIT is an open-source observability platform designed to streamline AI development workflows, particularly for Generative AI and LLMs, offering features like prompt management, performance tracking, and secure secrets management.

- Other

-

15

NAVI Policy Driven Safeguards for your LLM Apps

NAVI Policy Driven Safeguards for your LLM AppsNAVI provides policy-driven safeguards for LLM applications, verifying AI inputs and outputs against business policies and facts in real-time to ensure compliance and accuracy.

- Freemium

-

16

Alumnium Bridge the gap between human and automated testing! Translate your test instructions into executable commands using AI.

Alumnium Bridge the gap between human and automated testing! Translate your test instructions into executable commands using AI.Alumnium is an AI-powered tool that translates natural language test instructions into executable commands for browser test automation, integrating with Playwright and Selenium.

- Freemium

-

17

Syntheticus Enabling the Full Potential of AI with Safe Synthetic Data

Syntheticus Enabling the Full Potential of AI with Safe Synthetic DataSyntheticus utilizes Generative AI to produce high-quality, compliant synthetic data at scale, addressing real-world data challenges for AI/LLM, software testing, and analytics.

- Contact for Pricing

-

18

Maihem Enterprise-grade quality control for every step of your AI workflow.

Maihem Enterprise-grade quality control for every step of your AI workflow.Maihem empowers technology leaders and engineering teams to test, troubleshoot, and monitor any (agentic) AI workflow at scale. It offers industry-leading AI testing and red-teaming capabilities.

- Contact for Pricing

-

19

Adaline Ship reliable AI faster

Adaline Ship reliable AI fasterAdaline is a collaborative platform for teams building with Large Language Models (LLMs), enabling efficient iteration, evaluation, deployment, and monitoring of prompts.

- Contact for Pricing

-

20

Reprompt Collaborative prompt testing for confident AI deployment

Reprompt Collaborative prompt testing for confident AI deploymentReprompt is a developer-focused platform that enables efficient testing and optimization of AI prompts with real-time analysis and comparison capabilities.

- Usage Based

-

21

Loadmill Generative AI for Test Automation

Loadmill Generative AI for Test AutomationLoadmill utilizes generative AI to simplify the creation, maintenance, and analysis of automated test scripts, transforming user behavior into robust tests to accelerate development cycles.

- Free Trial

-

22

Reva Use the right LLM for your task

Reva Use the right LLM for your taskReva helps businesses test AI configurations and compare LLM outcomes to ensure optimal performance for their specific tasks, focusing on outcome-driven AI testing and model evaluation.

- Contact for Pricing

-

23

Tumeryk Ensure Trustworthy AI Deployments with Real-Time Scoring and Compliance

Tumeryk Ensure Trustworthy AI Deployments with Real-Time Scoring and ComplianceTumeryk provides AI security solutions, featuring the AI Trust Score™ for real-time trustworthiness assessment and the AI Trust Manager for compliance and remediation, supporting diverse LLMs and deployment environments.

- Freemium

-

24

LatticeFlow AI AI Results You Can Trust

LatticeFlow AI AI Results You Can TrustLatticeFlow AI helps businesses develop performant, trustworthy, and compliant AI applications. The platform focuses on ensuring AI models are reliable and meet regulatory standards.

- Contact for Pricing

-

25

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.Scorecard.io is an evaluation platform designed for testing and validating production-ready Generative AI applications, including LLMs, RAG systems, agents, and chatbots. It supports the entire AI production lifecycle from experiment design to continuous evaluation.

- Contact for Pricing

-

26

ValidMind AI Risk Management for the Modern Enterprise

ValidMind AI Risk Management for the Modern EnterpriseValidMind is a comprehensive platform for AI and Model Risk Management, enabling teams to test, document, validate, and govern AI models with speed and confidence.

- Contact for Pricing

-

27

TestAI Automated AI Voice Agent Testing

TestAI Automated AI Voice Agent TestingTestAI is an automated platform that ensures the performance, accuracy, and reliability of voice and chat agents. It offers real-world simulations, scenario testing, and trust & safety reporting, delivering flawless AI evaluations in minutes.

- Paid

- From 12$

-

28

RamenLegal AI for Legal Documentation

RamenLegal AI for Legal DocumentationRamenLegal is an AI-powered platform that automates the creation of critical business documents, saving time and reducing legal risk. It offers customizable templates and AI tools for drafting, research, and analysis.

- Freemium

- From 19$

-

29

Great Wave AI An operating system for agentic GenAI in government and regulated industries

Great Wave AI An operating system for agentic GenAI in government and regulated industriesGreat Wave AI provides a platform for building and managing GenAI agents, focused on quality, trust, usability, control, and security & compliance for government and regulated industries.

- Contact for Pricing

-

30

DataMaker Your AI-powered test data assistant.

DataMaker Your AI-powered test data assistant.DataMaker is an AI-powered tool that generates realistic synthetic test data using natural language prompts, integrating directly with enterprise systems to speed up development.

- Contact for Pricing

-

31

useflowtest.ai Unleash the power of APIs with GenAI

useflowtest.ai Unleash the power of APIs with GenAIFlowTestAI is a GenAI-powered Open Source IDE designed for crafting, visualizing, and managing API-first workflows. It offers a fast, lightweight, and localized solution for seamless API integration and enhanced privacy.

- Other

-

32

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

33

Lintrule Let the LLM review your code

Lintrule Let the LLM review your codeLintrule is a command-line tool that uses large language models to perform automated code reviews, enforce coding policies, and detect bugs beyond traditional linting capabilities.

- Usage Based

-

34

EvalsOne Evaluate LLMs & RAG Pipelines Quickly

EvalsOne Evaluate LLMs & RAG Pipelines QuicklyEvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

-

35

Tenjin Unify Web, Mobile, API, and Database testing under one AI-powered automation platform.

Tenjin Unify Web, Mobile, API, and Database testing under one AI-powered automation platform.Tenjin is an AI-powered test automation platform unifying Web, Mobile, API, and Database testing. It simplifies QA, accelerates releases, and improves CX using AI-assisted test design and codeless automation.

- Freemium

- From 399$

-

36

AIShield AI Security Delivered

AIShield AI Security DeliveredAIShield provides comprehensive AI security solutions, protecting AI/ML and LLM applications from development to deployment. It offers automated vulnerability assessments, real-time threat mitigation, and compliance reporting.

- Contact for Pricing

-

37

Protect AI The Platform for AI Security

Protect AI The Platform for AI SecurityProtect AI offers a comprehensive platform to secure AI systems, enabling organizations to manage security risks and defend against AI-specific threats.

- Contact for Pricing

-

38

Bot Test Automated testing to build quality, reliability, and safety into your AI-based chatbot — with no code.

Bot Test Automated testing to build quality, reliability, and safety into your AI-based chatbot — with no code.Bot Test offers automated, no-code testing solutions for AI-based chatbots, ensuring quality, reliability, and security. It provides comprehensive testing, smart evaluation, and enterprise-level scalability.

- Freemium

- From 25$

-

39

Dynamo AI Manage AI Risk. Productionize Use-Cases at Scale.

Dynamo AI Manage AI Risk. Productionize Use-Cases at Scale.Dynamo AI provides auditable AI guardrails, hallucination checks, red-teaming, and observability to help businesses productionize AI with confidence, addressing security and compliance gaps.

- Contact for Pricing

-

40

Centrox AI Ship Production-Ready Gen AI Faster

Centrox AI Ship Production-Ready Gen AI FasterCentrox AI is a full-cycle AI development company specializing in Gen AI solutions, offering services from data curation to deployment across healthcare, fintech, retail, and real estate industries.

- Contact for Pricing

-

41

Lega Large Language Model Governance

Lega Large Language Model GovernanceLega empowers law firms and enterprises to safely explore, assess, and implement generative AI technologies. It provides enterprise guardrails for secure LLM exploration and a toolset to capture and scale critical learnings.

- Contact for Pricing

-

42

Ottic QA for LLM products done right

Ottic QA for LLM products done rightOttic empowers tech and non-technical teams to test LLM applications, ensuring faster product development and enhanced reliability. Streamline your QA process and gain full visibility into your LLM application's behavior.

- Contact for Pricing

-

43

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?