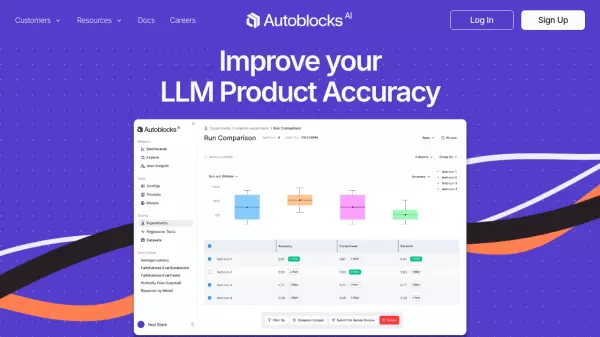

What is Autoblocks?

Autoblocks serves as a comprehensive platform designed to enhance the accuracy and performance of LLM-based products. The platform combines powerful testing capabilities, monitoring tools, and evaluation frameworks to ensure optimal AI product performance.

The solution provides flexible SDKs for seamless integration, enabling teams to trace events, test application behavior, and manage prompts and configurations. With its human-in-the-loop feedback system, Autoblocks empowers both technical and non-technical stakeholders to contribute to product improvement while maintaining robust security standards.

Features

- Test Suite Management: Comprehensive testing and evaluation framework

- Human Feedback Integration: Expert-driven evaluation system

- Observability Tools: Production monitoring and analytics

- Dataset Curation: High-quality test dataset management

- RAG Optimization: Context pipeline engineering tools

- Prompt Management: Collaborative prompt versioning and testing

- Security Compliance: Enterprise-grade privacy and security features

- SDK Integration: Flexible development tools for any codebase

Use Cases

- LLM Product Testing and Evaluation

- AI Performance Monitoring

- Collaborative Prompt Engineering

- Quality Assurance for AI Applications

- Production System Debugging

- Context Pipeline Optimization

- User Feedback Collection and Analysis

FAQs

-

What are the key differences between the Free and Full-Stack LLMOps plans?

The Free plan includes 3 reviewer seats and 5 test suites with unlimited review jobs, while the Full-Stack LLMOps plan offers 10 editor seats, comprehensive evaluation framework, LLM observability, dataset management, and white glove support. -

How does Autoblocks integrate with existing AI development workflows?

Autoblocks provides flexible SDKs that can integrate with any codebase or framework, allowing teams to trace events, test behavior, and manage prompts while maintaining code as the source of truth. -

What security measures does Autoblocks implement?

Autoblocks is engineered to satisfy rigorous privacy and security requirements, making it suitable for enterprise-level implementations and sensitive data handling.

Related Queries

Helpful for people in the following professions

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.