Top AI tools for evaluation

-

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and Observability

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and ObservabilityFreeplay provides comprehensive tools for AI teams to run experiments, evaluate model performance, and monitor production, streamlining the development process.

- Paid

- From 500$

-

Ottic QA for LLM products done right

Ottic QA for LLM products done rightOttic empowers tech and non-technical teams to test LLM applications, ensuring faster product development and enhanced reliability. Streamline your QA process and gain full visibility into your LLM application's behavior.

- Contact for Pricing

-

Arize Unified Observability and Evaluation Platform for AI

Arize Unified Observability and Evaluation Platform for AIArize is a comprehensive platform designed to accelerate the development and improve the production of AI applications and agents.

- Freemium

- From 50$

-

Coval Ship reliable AI Agents faster

Coval Ship reliable AI Agents fasterCoval provides simulation and evaluation tools for voice and chat AI agents, enabling faster development and deployment. It leverages AI-powered simulations and comprehensive evaluation metrics.

- Contact for Pricing

-

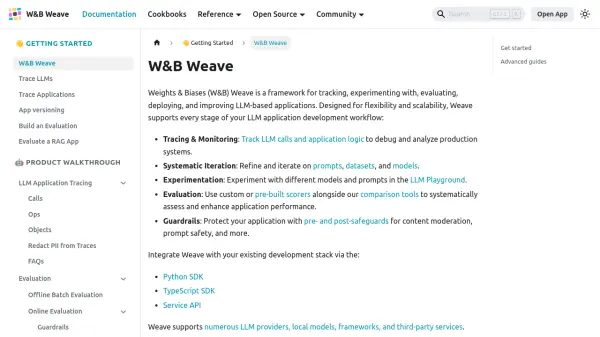

W&B Weave A Framework for Developing and Deploying LLM-Based Applications

W&B Weave A Framework for Developing and Deploying LLM-Based ApplicationsWeights & Biases (W&B) Weave is a comprehensive framework designed for tracking, experimenting with, evaluating, deploying, and enhancing LLM-based applications.

- Other

-

Align The Analytics Engine For Your Gen-AI Product

Align The Analytics Engine For Your Gen-AI ProductAlign is an analytics solution that enables organizations to analyze and evaluate data from LLM-based conversational products, improving chatbot performance and user interactions.

- Contact for Pricing

-

phoenix.arize.com Open-source LLM tracing and evaluation

phoenix.arize.com Open-source LLM tracing and evaluationPhoenix accelerates AI development with powerful insights, allowing seamless evaluation, experimentation, and optimization of AI applications in real time.

- Freemium

-

Basalt Integrate AI in your product in seconds

Basalt Integrate AI in your product in secondsBasalt is an AI building platform that helps teams quickly create, test, and launch reliable AI features. It offers tools for prototyping, evaluating, and deploying AI prompts.

- Freemium

-

Langfuse Open Source LLM Engineering Platform

Langfuse Open Source LLM Engineering PlatformLangfuse provides an open-source platform for tracing, evaluating, and managing prompts to debug and improve LLM applications.

- Freemium

- From 59$

-

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

Gooey.AI AI for Global Impact

Gooey.AI AI for Global ImpactGooey.AI is a workflow platform that empowers organizations to build and deploy AI solutions rapidly, focusing on frontline worker productivity and global impact.

- Freemium

- From 20$

-

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

-

Unify Build AI Your Way

Unify Build AI Your WayUnify provides tools to build, test, and optimize LLM pipelines with custom interfaces and a unified API for accessing all models across providers.

- Freemium

- From 40$

-

Maxim Simulate, evaluate, and observe your AI agents

Maxim Simulate, evaluate, and observe your AI agentsMaxim is an end-to-end evaluation and observability platform designed to help teams ship AI agents reliably and more than 5x faster.

- Paid

- From 29$

-

MLflow ML and GenAI made simple

MLflow ML and GenAI made simpleMLflow is an open-source, end-to-end MLOps platform for building better models and generative AI apps. It simplifies complex ML and generative AI projects, offering comprehensive management from development to production.

- Free

-

Oumi The Open Platform for Building, Evaluating, and Deploying AI Models

Oumi The Open Platform for Building, Evaluating, and Deploying AI ModelsOumi provides an open, collaborative platform for researchers and developers to build, evaluate, and deploy state-of-the-art AI models, from data preparation to production.

- Contact for Pricing

-

Transformer Lab The Open Source Platform for Training Advanced AI Models

Transformer Lab The Open Source Platform for Training Advanced AI ModelsTransformer Lab is an open-source platform enabling researchers, ML engineers, and developers to collaboratively build, train, evaluate, and deploy AI models with features like provenance, reproducibility, and transparency.

- Free

-

Relari Trusting your AI should not be hard

Relari Trusting your AI should not be hardRelari offers a contract-based development toolkit to define, inspect, and verify AI agent behavior using natural language, ensuring robustness and reliability.

- Freemium

- From 1000$

-

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidence

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidenceHumanloop is an enterprise-grade platform that provides tools for LLM evaluation, prompt management, and AI observability, enabling teams to develop, evaluate, and deploy trustworthy AI applications.

- Freemium

-

klu.ai Next-gen LLM App Platform for Confident AI Development

klu.ai Next-gen LLM App Platform for Confident AI DevelopmentKlu is an all-in-one LLM App Platform that enables teams to experiment, version, and fine-tune GPT-4 Apps with collaborative prompt engineering and comprehensive evaluation tools.

- Freemium

- From 30$

-

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

Prompt Mixer Open source tool for prompt engineering

Prompt Mixer Open source tool for prompt engineeringPrompt Mixer is a desktop application for teams to create, test, and manage AI prompts and chains across different language models, featuring version control and comprehensive evaluation tools.

- Freemium

- From 29$

-

DECipher Your AI-Powered Partner in Maximizing Development Impact

DECipher Your AI-Powered Partner in Maximizing Development ImpactDECipher is an innovative AI platform that synthesizes insights from 75 years of global development data, providing tailored recommendations for international development challenges based on over 13,000 documents from the Development Experience Clearinghouse.

- Free

-

HoneyHive AI Observability and Evaluation Platform for Building Reliable AI Products

HoneyHive AI Observability and Evaluation Platform for Building Reliable AI ProductsHoneyHive is a comprehensive platform that provides AI observability, evaluation, and prompt management tools to help teams build and monitor reliable AI applications.

- Freemium

-

evAIuate An expert pitch coach in your pocket

evAIuate An expert pitch coach in your pocketevAIuate is an AI-powered pitch deck evaluation tool that uses GPT-4 technology to analyze, score, and provide detailed feedback on presentations across various industries.

- Freemium

- From 10$

-

Agenta End-to-End LLM Engineering Platform

Agenta End-to-End LLM Engineering PlatformAgenta is an LLM engineering platform offering tools for prompt engineering, versioning, evaluation, and observability in a single, collaborative environment.

- Freemium

- From 49$

-

Latitude Open-source prompt engineering platform for reliable AI product delivery

Latitude Open-source prompt engineering platform for reliable AI product deliveryLatitude is an open-source platform that helps teams track, evaluate, and refine their AI prompts using real data, enabling confident deployment of AI products.

- Freemium

- From 99$

-

LangWatch Monitor, Evaluate & Optimize your LLM performance with 1-click

LangWatch Monitor, Evaluate & Optimize your LLM performance with 1-clickLangWatch empowers AI teams to ship 10x faster with quality assurance at every step. It provides tools to measure, maximize, and easily collaborate on LLM performance.

- Paid

- From 59$

-

GreetAI Build Interview Simulations with AI Voice Agents

GreetAI Build Interview Simulations with AI Voice AgentsGreetAI offers AI-powered voice agents for screening, training, and evaluating candidates through customizable interview simulations. It provides detailed reports and insights to streamline the hiring process.

- Freemium

-

Negotyum Validate & Improve Business Ideas Instantly

Negotyum Validate & Improve Business Ideas InstantlyNegotyum is an AI-powered platform for entrepreneurs to evaluate the quality, risk, and financial viability of business ideas quickly and securely.

- Freemium

-

Maya AI Interview Effortless AI-Powered Screening and Interviews

Maya AI Interview Effortless AI-Powered Screening and InterviewsMaya AI Interview automates candidate screening and interviews based on your job listings and evaluation criteria, streamlining the hiring process.

- Paid

- From 59$

-

Helicone Ship your AI app with confidence

Helicone Ship your AI app with confidenceHelicone is an all-in-one platform for monitoring, debugging, and improving production-ready LLM applications. It provides tools for logging, evaluating, experimenting, and deploying AI applications.

- Freemium

- From 20$

-

Just a Human Gamified 3D Asset Evaluation and Labeling

Just a Human Gamified 3D Asset Evaluation and LabelingJust a Human offers a gamified platform for 3D asset evaluation and labeling, rewarding players with game credits, GenAI service provider credits, or crypto.

- Free

-

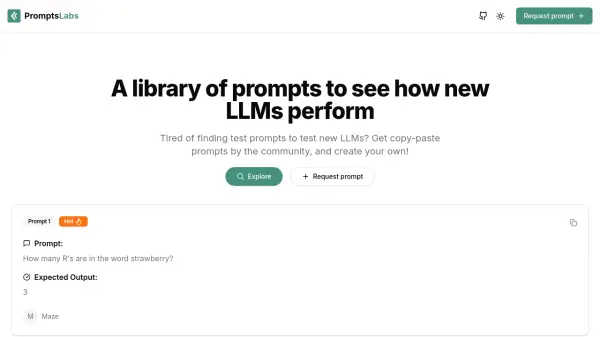

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?