Membase-MCP Server

Decentralized memory layer server for AI agents using the Model Context Protocol.

Key Features

Use Cases

README

membase mcp server

Description

Membase is the first decentralized memory layer for AI agents, powered by Unibase. It provides secure, persistent storage for conversation history, interaction records, and knowledge — ensuring agent continuity, personalization, and traceability.

The Membase-MCP Server enables seamless integration with the Membase protocol, allowing agents to upload and retrieve memory from the Unibase DA network for decentralized, verifiable storage.

Functions

Messages or memoiries can be visit at: https://testnet.hub.membase.io/

- get_conversation_id: Get the current conversation id.

- switch_conversation: Switch to a different conversation.

- save_message: Save a message/memory into the current conversation.

- get_messages: Get the last n messages from the current conversation.

Installation

git clone https://github.com/unibaseio/membase-mcp.git

cd membase-mcp

uv run src/membase_mcp/server.py

Environment variables

- MEMBASE_ACCOUNT: your account to upload

- MEMBASE_CONVERSATION_ID: your conversation id, should be unique, will preload its history

- MEMBASE_ID: your instance id

Configuration on Claude/Windsurf/Cursor/Cline

{

"mcpServers": {

"membase": {

"command": "uv",

"args": [

"--directory",

"path/to/membase-mcp",

"run",

"src/membase_mcp/server.py"

],

"env": {

"MEMBASE_ACCOUNT": "your account, 0x...",

"MEMBASE_CONVERSATION_ID": "your conversation id, should be unique",

"MEMBASE_ID": "your sub account, any string"

}

}

}

}

Usage

call functions in llm chat

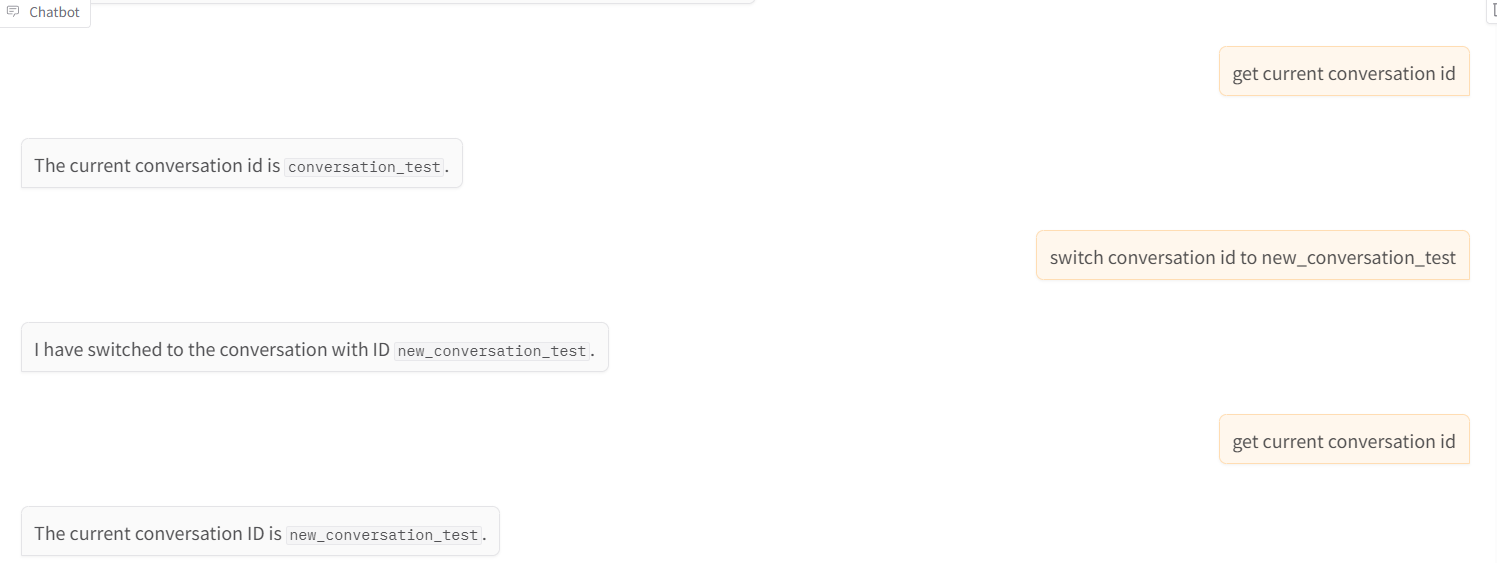

- get conversation id and switch conversation

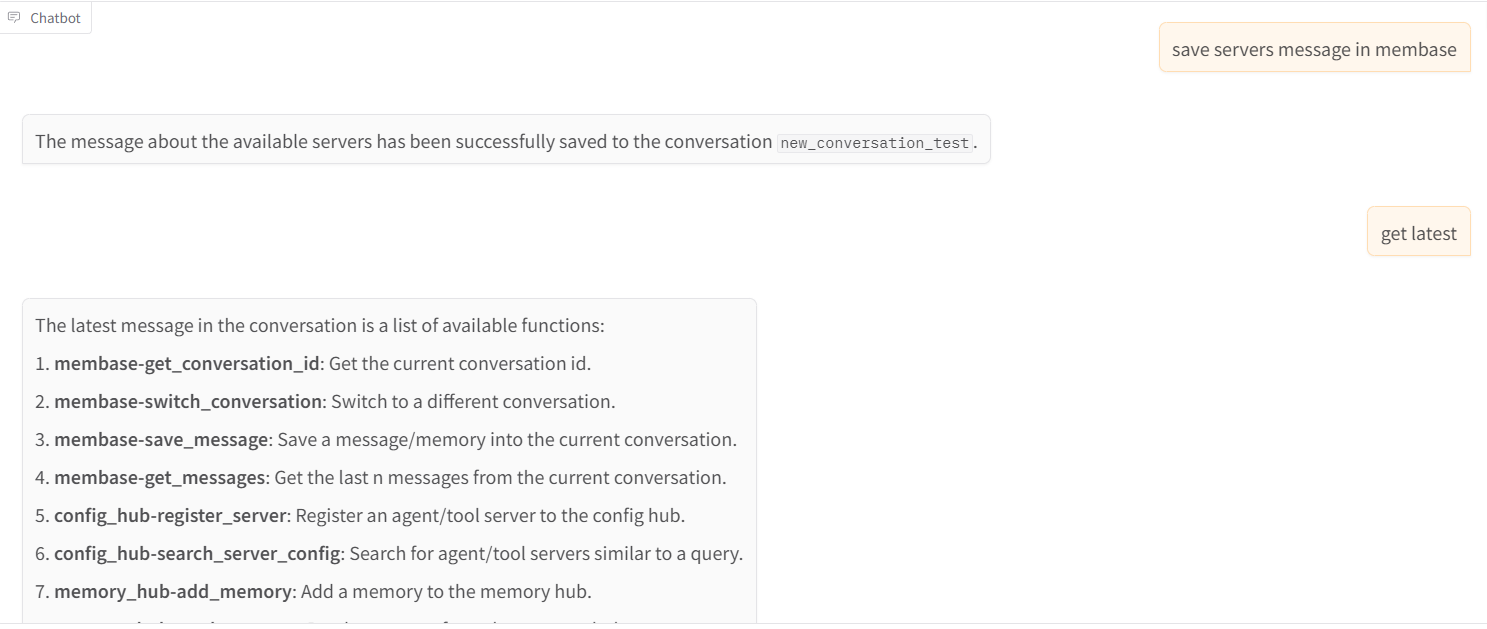

- save message and get messages

Star History

Repository Owner

Organization

Repository Details

Programming Languages

Tags

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

Memory MCP

A Model Context Protocol server for managing LLM conversation memories with intelligent context window caching.

Memory MCP provides a Model Context Protocol (MCP) server for logging, retrieving, and managing memories from large language model (LLM) conversations. It offers features such as context window caching, relevance scoring, and tag-based context retrieval, leveraging MongoDB for persistent storage. The system is designed to efficiently archive, score, and summarize conversational context, supporting external orchestration and advanced memory management tools. This enables seamless handling of conversation history and dynamic context for enhanced LLM applications.

- ⭐ 10

- MCP

- JamesANZ/memory-mcp

Agentic Long-Term Memory with Notion Integration

Production-ready agentic long-term memory and Notion integration with Model Context Protocol support.

Agentic Long-Term Memory with Notion Integration enables AI agents to incorporate advanced long-term memory capabilities using both vector and graph databases. It offers comprehensive Notion workspace integration along with a production-ready Model Context Protocol (MCP) server supporting HTTP and stdio transports. The tool facilitates context management, tool discovery, and advanced function chaining for complex agentic workflows.

- ⭐ 4

- MCP

- ankitmalik84/Agentic_Longterm_Memory

Stape MCP Server

An MCP server implementation for integrating Stape with AI model context protocols.

Stape MCP Server provides an implementation of the Model Context Protocol server tailored for the Stape platform. It enables secure and standardized access to model context capabilities, allowing integration with tools such as Claude Desktop and Cursor AI. Users can easily configure and authenticate MCP connections using provided configuration samples, while managing context and credentials securely. The server is open source and maintained by the Stape Team under the Apache 2.0 license.

- ⭐ 4

- MCP

- stape-io/stape-mcp-server

Daisys MCP server

A beta server implementation for the Model Context Protocol supporting audio context with Daisys integration.

Daisys MCP server provides a beta implementation of the Model Context Protocol (MCP), enabling seamless integration between the Daisys AI platform and various MCP clients. It allows users to connect MCP-compatible clients to Daisys by configurable authentication and environment settings, with out-of-the-box support for audio file storage and playback. The server is designed to be extensible, including support for both user-level deployments and developer contributions, with best practices for secure authentication and dependency management.

- ⭐ 10

- MCP

- daisys-ai/daisys-mcp

anki-mcp

MCP server for seamless integration with Anki via AnkiConnect.

An MCP server that bridges Anki flashcards with the Model Context Protocol, exposing AnkiConnect functionalities as standardized MCP tools. It organizes Anki actions into intuitive services covering decks, notes, cards, and models for easy access and automation. Designed for integration with AI assistants and other MCP-compatible clients, it enables operations like creating, modifying, and organizing flashcards through a unified protocol.

- ⭐ 6

- MCP

- ujisati/anki-mcp

IDA Pro MCP

Enabling Model Context Protocol server integration with IDA Pro for collaborative reverse engineering.

IDA Pro MCP provides a Model Context Protocol (MCP) server that connects the IDA Pro reverse engineering platform to clients supporting the MCP standard. It exposes a wide array of program analysis and manipulation functionalities such as querying metadata, accessing functions, globals, imports, and strings, decompiling code, disassembling, renaming variables, and more, in a standardized way. This enables seamless integration of AI-powered or remote tools with IDA Pro to enhance the reverse engineering workflow.

- ⭐ 4,214

- MCP

- mrexodia/ida-pro-mcp

Didn't find tool you were looking for?