LLM evaluation platform - AI tools

PromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

Laminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

Autoblocks is a collaborative testing and evaluation platform for LLM-based products that automatically improves through user and expert feedback, offering comprehensive tools for monitoring, debugging, and quality assurance.

- Freemium

- From 1750$

Agenta is an LLM engineering platform offering tools for prompt engineering, versioning, evaluation, and observability in a single, collaborative environment.

- Freemium

- From 49$

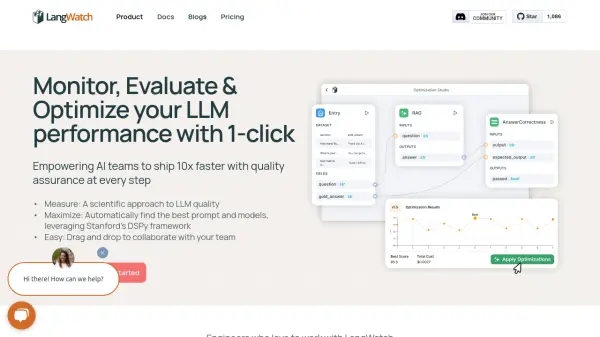

LangWatch empowers AI teams to ship 10x faster with quality assurance at every step. It provides tools to measure, maximize, and easily collaborate on LLM performance.

- Paid

- From 59$

Humanloop is an enterprise-grade platform that provides tools for LLM evaluation, prompt management, and AI observability, enabling teams to develop, evaluate, and deploy trustworthy AI applications.

- Freemium

Libretto offers comprehensive LLM monitoring, automated prompt testing, and optimization tools to ensure the reliability and performance of your AI applications.

- Freemium

- From 180$

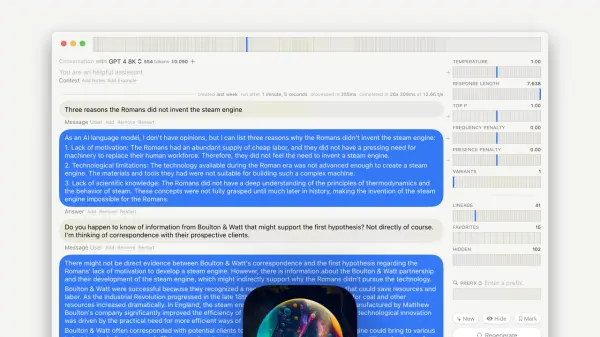

GPT-LLM Playground is a macOS application designed for advanced experimentation and testing with Language Learning Models (LLMs). It offers features like multi-model support, versioning, and custom endpoints.

- Free

Langfuse provides an open-source platform for tracing, evaluating, and managing prompts to debug and improve LLM applications.

- Freemium

- From 59$

EleutherAI is a research institute focused on advancing and democratizing open-source AI, particularly in language modeling, interpretability, and alignment. They train, release, and evaluate powerful open-source LLMs.

- Free

Featured Tools

Angel.ai

Chat with your favourite AI GirlfriendSophiie AI

Your Virtual Receptionist, PerfectedImage Upscaler

Upscale Your Photos With AI Without Losing QualityCapMonster Cloud

Highly efficient service for solving captchas using AIGoStudio

Professional Headshots Using Your SelfiesAdola

AI-powered voice assistants for seamless business communicationSend AI

Secure Document Processing with AIProducti AI

Unleash the Power of AIBoosted.ai

Artificial intelligence software that helps investment managers save time, improve portfolio metrics, and make better, data-driven decisionsDidn't find tool you were looking for?