AI model evaluation platform - AI tools

-

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and Observability

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and ObservabilityFreeplay provides comprehensive tools for AI teams to run experiments, evaluate model performance, and monitor production, streamlining the development process.

- Paid

- From 500$

-

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered products

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered productsEvidently AI is a comprehensive AI observability platform that helps teams evaluate, test, and monitor LLM and ML models in production, offering data drift detection, quality assessment, and performance monitoring capabilities.

- Freemium

- From 50$

-

Arize Unified Observability and Evaluation Platform for AI

Arize Unified Observability and Evaluation Platform for AIArize is a comprehensive platform designed to accelerate the development and improve the production of AI applications and agents.

- Freemium

- From 50$

-

Oumi The Open Platform for Building, Evaluating, and Deploying AI Models

Oumi The Open Platform for Building, Evaluating, and Deploying AI ModelsOumi provides an open, collaborative platform for researchers and developers to build, evaluate, and deploy state-of-the-art AI models, from data preparation to production.

- Contact for Pricing

-

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidence

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidenceHumanloop is an enterprise-grade platform that provides tools for LLM evaluation, prompt management, and AI observability, enabling teams to develop, evaluate, and deploy trustworthy AI applications.

- Freemium

-

Future AGI World’s first comprehensive evaluation and optimization platform to help enterprises achieve 99% accuracy in AI applications across software and hardware.

Future AGI World’s first comprehensive evaluation and optimization platform to help enterprises achieve 99% accuracy in AI applications across software and hardware.Future AGI is a comprehensive evaluation and optimization platform designed to help enterprises build, evaluate, and improve AI applications, aiming for high accuracy across software and hardware.

- Freemium

- From 50$

-

Braintrust The end-to-end platform for building world-class AI apps.

Braintrust The end-to-end platform for building world-class AI apps.Braintrust provides an end-to-end platform for developing, evaluating, and monitoring Large Language Model (LLM) applications. It helps teams build robust AI products through iterative workflows and real-time analysis.

- Freemium

- From 249$

-

Lisapet.ai AI Prompt testing suite for product teams

Lisapet.ai AI Prompt testing suite for product teamsLisapet.ai is an AI development platform designed to help product teams prototype, test, and deploy AI features efficiently by automating prompt testing.

- Paid

- From 9$

-

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

teammately.ai The AI Agent for AI Engineers that autonomously builds AI Products, Models and Agents

teammately.ai The AI Agent for AI Engineers that autonomously builds AI Products, Models and AgentsTeammately is an autonomous AI agent that self-iterates AI products, models, and agents to meet specific objectives, operating beyond human-only capabilities through scientific methodology and comprehensive testing.

- Freemium

-

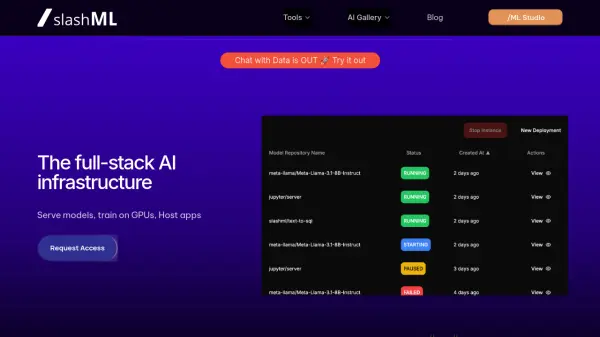

/ML The full-stack AI infra

/ML The full-stack AI infra/ML offers a full-stack AI infrastructure for serving large language models, training multi-modal models on GPUs, and hosting AI applications such as Streamlit, Gradio, and Dash, while providing cost observability.

- Contact for Pricing

-

Nat.dev An AI Playground for Everyone

Nat.dev An AI Playground for EveryoneNat.dev is an online AI playground allowing users to compare various large language models (LLMs) like GPT-4, Claude 3, and Llama 3 side-by-side using the same prompt. Evaluate and experiment with different AI model responses in one interface.

- Free

-

Adaline Ship reliable AI faster

Adaline Ship reliable AI fasterAdaline is a collaborative platform for teams building with Large Language Models (LLMs), enabling efficient iteration, evaluation, deployment, and monitoring of prompts.

- Contact for Pricing

-

Intura Compare, Choose, and Save on AI & LLMs

Intura Compare, Choose, and Save on AI & LLMsIntura helps businesses experiment with, compare, and deploy AI and LLM models side-by-side to optimize performance and cost before full-scale implementation.

- Freemium

-

Coherence AI-Augmented Testing and Deployment Platform

Coherence AI-Augmented Testing and Deployment PlatformCoherence provides AI-augmented testing for evaluating AI responses and prompts, alongside a platform for streamlined cloud deployment and infrastructure management.

- Freemium

- From 35$

-

LastMile AI Ship generative AI apps to production with confidence.

LastMile AI Ship generative AI apps to production with confidence.LastMile AI empowers developers to seamlessly transition generative AI applications from prototype to production with a robust developer platform.

- Contact for Pricing

- API

-

EvalsOne Evaluate LLMs & RAG Pipelines Quickly

EvalsOne Evaluate LLMs & RAG Pipelines QuicklyEvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

-

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

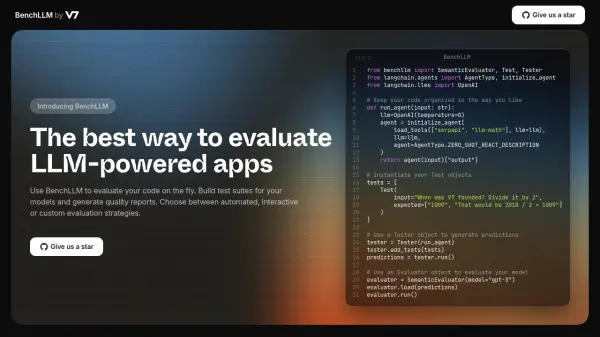

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

AiPortalX Discover, Compare and Leverage AI Models Effortlessly

AiPortalX Discover, Compare and Leverage AI Models EffortlesslyAiPortalX is a comprehensive platform for discovering, comparing, and exploring AI models based on various criteria like task, domain, company, and country.

- Freemium

- From 15$

-

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

-

HoneyHive AI Observability and Evaluation Platform for Building Reliable AI Products

HoneyHive AI Observability and Evaluation Platform for Building Reliable AI ProductsHoneyHive is a comprehensive platform that provides AI observability, evaluation, and prompt management tools to help teams build and monitor reliable AI applications.

- Freemium

-

Hegel AI Developer Platform for Large Language Model (LLM) Applications

Hegel AI Developer Platform for Large Language Model (LLM) ApplicationsHegel AI provides a developer platform for building, monitoring, and improving large language model (LLM) applications, featuring tools for experimentation, evaluation, and feedback integration.

- Contact for Pricing

-

forefront.ai Build with open-source AI - Your data, your models, your AI.

forefront.ai Build with open-source AI - Your data, your models, your AI.Forefront is a comprehensive platform that enables developers to fine-tune, evaluate, and deploy open-source AI models with a familiar experience, offering complete control and transparency over AI implementations.

- Freemium

- From 99$

-

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & Evaluation

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & EvaluationAutoblocks is a collaborative testing and evaluation platform for LLM-based products that automatically improves through user and expert feedback, offering comprehensive tools for monitoring, debugging, and quality assurance.

- Freemium

- From 1750$

-

AI Model Trend Discover Trending AI Models on Replicate and Hugging Face

AI Model Trend Discover Trending AI Models on Replicate and Hugging FaceAI Model Trend tracks the latest and most popular AI models from Replicate and Hugging Face, providing insights into current trends.

- Free

-

ech0 Hybrid Human-AI Testing for Safer AI Deployments

ech0 Hybrid Human-AI Testing for Safer AI Deploymentsech0 provides comprehensive, scalable testing for AI agents, identifying security vulnerabilities, consistency issues, and policy compliance before production deployment.

- Freemium

-

aixblock.io Productize AI using Decentralized Resources with Flexibility and Full Privacy Control

aixblock.io Productize AI using Decentralized Resources with Flexibility and Full Privacy ControlAIxBlock is a decentralized platform for AI development and deployment, offering access to computing power, AI models, and human validators. It ensures privacy, scalability, and cost savings through its decentralized infrastructure.

- Freemium

- From 69$

-

Parea Test and Evaluate your AI systems

Parea Test and Evaluate your AI systemsParea is a platform for testing, evaluating, and monitoring Large Language Model (LLM) applications, helping teams track experiments, collect human feedback, and deploy prompts confidently.

- Freemium

- From 150$

-

Mozilla.ai Empowering Developers with Trustworthy AI

Mozilla.ai Empowering Developers with Trustworthy AIMozilla.ai is dedicated to making AI trustworthy, accessible, and open-source, providing tools for developers to integrate and innovate on responsible AI solutions.

- Free

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

screen time management tool 16 tools

-

AI stock market prediction 9 tools

-

Lead qualification phone AI 60 tools

-

No-code AI agent generator 59 tools

-

Generate Webflow sites with AI 22 tools

-

Cross-platform Markdown software 9 tools

-

employee rewards and recognition platform 13 tools

-

AI monetization platform 45 tools

-

character design AI tool 24 tools

Didn't find tool you were looking for?