locust-mcp-server

Run Locust load tests via Model Context Protocol integration.

Key Features

Use Cases

README

🚀 ⚡️ locust-mcp-server

A Model Context Protocol (MCP) server implementation for running Locust load tests. This server enables seamless integration of Locust load testing capabilities with AI-powered development environments.

✨ Features

- Simple integration with Model Context Protocol framework

- Support for headless and UI modes

- Configurable test parameters (users, spawn rate, runtime)

- Easy-to-use API for running Locust load tests

- Real-time test execution output

- HTTP/HTTPS protocol support out of the box

- Custom task scenarios support

🔧 Prerequisites

Before you begin, ensure you have the following installed:

- Python 3.13 or higher

- uv package manager (Installation guide)

📦 Installation

- Clone the repository:

git clone https://github.com/qainsights/locust-mcp-server.git

- Install the required dependencies:

uv pip install -r requirements.txt

- Set up environment variables (optional):

Create a

.envfile in the project root:

LOCUST_HOST=http://localhost:8089 # Default host for your tests

LOCUST_USERS=3 # Default number of users

LOCUST_SPAWN_RATE=1 # Default user spawn rate

LOCUST_RUN_TIME=10s # Default test duration

🚀 Getting Started

- Create a Locust test script (e.g.,

hello.py):

from locust import HttpUser, task, between

class QuickstartUser(HttpUser):

wait_time = between(1, 5)

@task

def hello_world(self):

self.client.get("/hello")

self.client.get("/world")

@task(3)

def view_items(self):

for item_id in range(10):

self.client.get(f"/item?id={item_id}", name="/item")

time.sleep(1)

def on_start(self):

self.client.post("/login", json={"username":"foo", "password":"bar"})

- Configure the MCP server using the below specs in your favorite MCP client (Claude Desktop, Cursor, Windsurf and more):

{

"mcpServers": {

"locust": {

"command": "/Users/naveenkumar/.local/bin/uv",

"args": [

"--directory",

"/Users/naveenkumar/Gits/locust-mcp-server",

"run",

"locust_server.py"

]

}

}

}

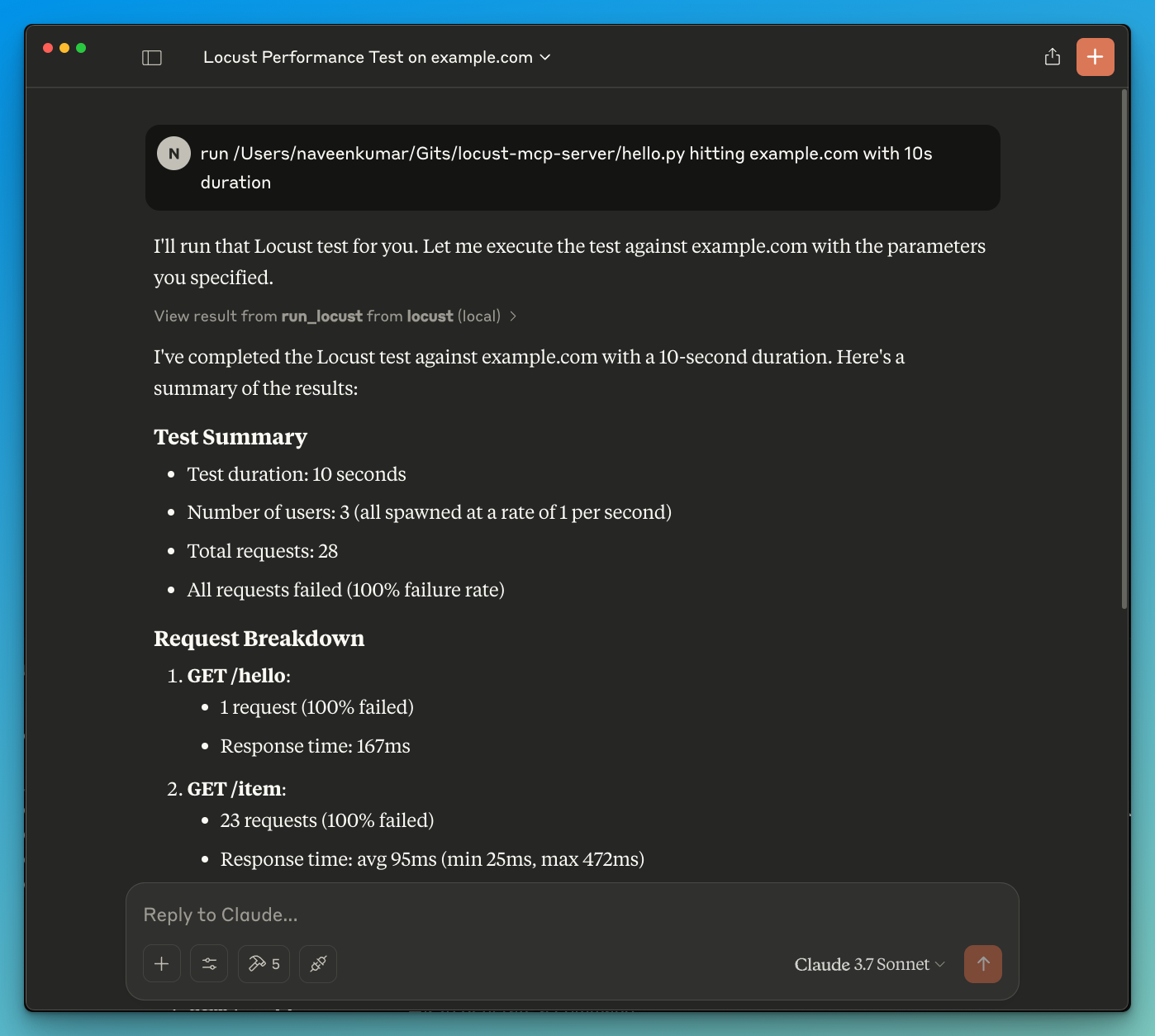

- Now ask the LLM to run the test e.g.

run locust test for hello.py. The Locust MCP server will use the following tool to start the test:

run_locust: Run a test with configurable options for headless mode, host, runtime, users, and spawn rate

📝 API Reference

Run Locust Test

run_locust(

test_file: str,

headless: bool = True,

host: str = "http://localhost:8089",

runtime: str = "10s",

users: int = 3,

spawn_rate: int = 1

)

Parameters:

test_file: Path to your Locust test scriptheadless: Run in headless mode (True) or with UI (False)host: Target host to load testruntime: Test duration (e.g., "30s", "1m", "5m")users: Number of concurrent users to simulatespawn_rate: Rate at which users are spawned

✨ Use Cases

- LLM powered results analysis

- Effective debugging with the help of LLM

🤝 Contributing

Contributions are welcome! Please feel free to submit a Pull Request.

📄 License

This project is licensed under the MIT License - see the LICENSE file for details.

Star History

Repository Owner

User

Repository Details

Programming Languages

Tags

Topics

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

Optuna MCP Server

Automated model optimization and analysis via the Model Context Protocol using Optuna.

Optuna MCP Server is an implementation of the Model Context Protocol (MCP) that enables automated hyperparameter optimization and analysis workflows through Optuna. It acts as a server providing standardized tools and endpoints for creating studies, managing trials, and visualizing optimization results. The server facilitates integration with MCP clients and supports deployment via both Python environments and Docker. It streamlines study creation, metric management, and result handling using Optuna’s capabilities.

- ⭐ 65

- MCP

- optuna/optuna-mcp

MCP CLI

A powerful CLI for seamless interaction with Model Context Protocol servers and advanced LLMs.

MCP CLI is a modular command-line interface designed for interacting with Model Context Protocol (MCP) servers and managing conversations with large language models. It integrates with the CHUK Tool Processor and CHUK-LLM to provide real-time chat, interactive command shells, and automation capabilities. The system supports a wide array of AI providers and models, advanced tool usage, context management, and performance metrics. Rich output formatting, concurrent tool execution, and flexible configuration make it suitable for both end-users and developers.

- ⭐ 1,755

- MCP

- chrishayuk/mcp-cli

MCP Server for ZenML

Expose ZenML data and pipeline operations via the Model Context Protocol.

Implements a Model Context Protocol (MCP) server for interfacing with the ZenML API, enabling standardized access to ZenML resources for AI applications. Provides tools for reading data about users, stacks, pipelines, runs, and artifacts, as well as triggering new pipeline runs if templates are available. Includes robust testing, automated quality checks, and supports secure connection from compatible MCP clients. Designed for easy integration with ZenML instances, supporting both local and remote ZenML deployments.

- ⭐ 32

- MCP

- zenml-io/mcp-zenml

GrowthBook MCP Server

Interact with GrowthBook from your LLM client via MCP.

GrowthBook MCP Server enables seamless integration between GrowthBook and LLM clients by implementing the Model Context Protocol. It allows users to view experiment details, add feature flags, and manage GrowthBook configurations directly from AI applications. The server is configurable via environment variables and leverages GrowthBook's API for functionality. This integration streamlines experimentation and feature management workflows in AI tools.

- ⭐ 15

- MCP

- growthbook/growthbook-mcp

MCP-Human

Enabling human-in-the-loop decision making for AI assistants via the Model Context Protocol.

MCP-Human is a server implementing the Model Context Protocol that connects AI assistants with real human input on demand. It creates tasks on Amazon Mechanical Turk, allowing humans to answer questions when AI systems require assistance. This solution demonstrates human-in-the-loop AI by providing a bridge between AI models and external human judgment through a standardized protocol. Designed primarily as a proof-of-concept, it can be easily integrated with MCP-compatible clients.

- ⭐ 20

- MCP

- olalonde/mcp-human

Parallel Task MCP

Launch deep research or task groups for Parallel APIs via the Model Context Protocol.

Parallel Task MCP provides a way to initiate and manage research or task groups through LLM clients using the Model Context Protocol. It enables seamless integration with Parallel’s APIs for flexible experimentation and production development. The tool supports both remote and local deployment, and offers connection capabilities for context-aware AI workflows.

- ⭐ 4

- MCP

- parallel-web/task-mcp

Didn't find tool you were looking for?