Optuna MCP Server

Automated model optimization and analysis via the Model Context Protocol using Optuna.

Key Features

Use Cases

README

Optuna MCP Server

A Model Context Protocol (MCP) server that automates optimization and analysis using Optuna.

Use Cases

The Optuna MCP Server can be used in the following use cases, for example.

- Automated hyperparameter optimization by LLMs

- Interactive analysis of Optuna's optimization results via chat interface

- Optimize input and output of other MCP tools

For details, see the Examples section.

Installation

The Optuna MCP server can be installed using uv or Docker.

This section explains how to install the Optuna MCP server, using Claude Desktop as an example MCP client.

Usage with uv

Before starting the installation process, install uv from Astral.

Then, add the Optuna MCP server configuration to the MCP client.

To include it in Claude Desktop, go to Claude > Settings > Developer > Edit Config > claude_desktop_config.json

and add the following:

{

"mcpServers": {

"Optuna": {

"command": "/path/to/uvx",

"args": [

"optuna-mcp"

]

}

}

}

Additionally, you can specify the Optuna storage with the --storage argument to persist the results.

{

"mcpServers": {

"Optuna": {

"command": "/path/to/uvx",

"args": [

"optuna-mcp",

"--storage",

"sqlite:///optuna.db"

]

}

}

}

After adding this, please restart Claude Desktop application. For more information about Claude Desktop, check out the quickstart page.

Usage with Docker

You can also run the Optuna MCP server using Docker. Make sure you have Docker installed and running on your machine.

{

"mcpServers": {

"Optuna": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"--net=host",

"-v",

"/PATH/TO/LOCAL/DIRECTORY/WHICH/INCLUDES/DB/FILE:/app/workspace",

"optuna/optuna-mcp:latest",

"--storage",

"sqlite:////app/workspace/optuna.db"

]

}

}

}

Tools provided by Optuna MCP

The Optuna MCP provides the following tools. Specifically, it offers primitive functions of Optuna such as Study, Trial, Visualization, and Dashboard. Since MCP clients know the list of tools and the details of each tool, users do not need to remember those details.

Study

- create_study - Create a new Optuna study with the given study_name and directions.

If the study already exists, it will be simply loaded.

study_name: name of the study (string, required).directions: The directions of optimization (list of literal strings minimize/maximize, optional).

- set_sampler - Set the sampler for the study.

name: the name of the sampler (string, required).

- get_all_study_names - Get all study names from the storage.

- set_metric_names - Set metric_names. Metric_names are labels used to distinguish what each objective value is.

metric_names: The list of metric names for each objective (list of strings, required).

- get_metric_names - Get metric_names.

- No parameters required.

- get_directions - Get the directions of the study.

- No parameters required.

- get_trials - Get all trials in a CSV format.

- No parameters required.

- best_trial - Get the best trial.

- No parameters required.

- best_trials - Return trials located at the Pareto front in the study.

- No parameters required.

Trial

- ask - Suggest new parameters using Optuna.

search_space: the search space for Optuna (dictionary, required).

- tell - Report the result of a trial.

trial_number: the trial number (integer, required).values: the result of the trial (float or list of floats, required).

- set_trial_user_attr - Set user attributes for a trial.

trial_number: the trial number (integer, required).key: the key of the user attribute (string, required).value: the value of the user attribute (any type, required).

- get_trial_user_attrs - Get user attributes in a trial.

trial_number: the trial number (integer, required).

Visualization

- plot_optimization_history - Return the optimization history plot as an image.

target: index to specify which value to display (integer, optional).target_name: target’s name to display on the axis label (string, optional).

- plot_hypervolume_history - Return the hypervolume history plot as an image.

reference_point: a list of reference points to calculate the hypervolume (list of floats, required).

- plot_pareto_front - Return the Pareto front plot as an image for multi-objective optimization.

target_names: objective name list used as the axis titles (list of strings, optional).include_dominated_trials: a flag to include all dominated trial's objective values (boolean, optional).targets: a list of indices to specify the objective values to display. (list of integers, optional).

- plot_contour - Return the contour plot as an image.

params: parameter list to visualize (list of strings, optional).target: an index to specify the value to display (integer, required).target_name: target’s name to display on the color bar (string, required).

- plot_parallel_coordinate - Return the parallel coordinate plot as an image.

params: parameter list to visualize (list of strings, optional).target: an index to specify the value to display (integer, required).target_name: target’s name to display on the axis label and the legend (string, required).

- plot_slice - Return the slice plot as an image.

params: parameter list to visualize (list of strings, optional).target: an index to specify the value to display (integer, required).target_name: target’s name to display on the axis label (string, required).

- plot_param_importances - Return the parameter importances plot as an image.

params: parameter list to visualize (list of strings, optional).target: an index to specify the value to display (integer/null, optional).target_name: target’s name to display on the legend (string, required).

- plot_edf - Return the EDF plot as an image.

target: an index to specify the value to display (integer, required).target_name: target’s name to display on the axis label (string, required).

- plot_timeline - Return the timeline plot as an image.

- No parameters required.

- plot_rank - Return the rank plot as an image.

params: parameter list to visualize (list of strings, optional).target: an index to specify the value to display (integer, required).target_name: target’s name to display on the color bar (string, required).

Web Dashboard

- launch_optuna_dashboard - Launch the Optuna dashboard.

port: server port (integer, optional, default: 58080).

Examples

- Optimizing the 2D-Sphere function

- Starting the Optuna dashboard and analyzing optimization results

- Optimizing the FFmpeg encoding parameters

- Optimizing the Cookie Recipe

- Optimizing the Matplotlib Configuration

Optimizing the 2D-Sphere Function

Here we present a simple example of optimizing the 2D-Sphere function, along with example prompts and the summary of the LLM responses.

| User prompt | Output in Claude |

|---|---|

| (Launch Claude Desktop) | |

| Please create an Optuna study named "Optimize-2D-Sphere" for minimization. | |

| Please suggest two float parameters x, y in [-1, 1]. | |

| Please report the objective value x**2 + y**2. To calculate the value, please use the JavaScript interpreter and do not round the values. | |

| Please suggest another parameter set and evaluate it. | |

| Please plot the optimization history so far. |

Starting the Optuna Dashboard and Analyzing Optimization Results

You can also start the Optuna dashboard via the MCP server to analyze the optimization results interactively.

| User prompt | Output in Claude |

|---|---|

| Please launch the Optuna dashboard. |

By default, the Optuna dashboard will be launched on port 58080.

You can access it by navigating to http://localhost:58080 in your web browser as shown below:

Optuna dashboard provides various visualizations to analyze the optimization results, such as optimization history, parameter importances, and more.

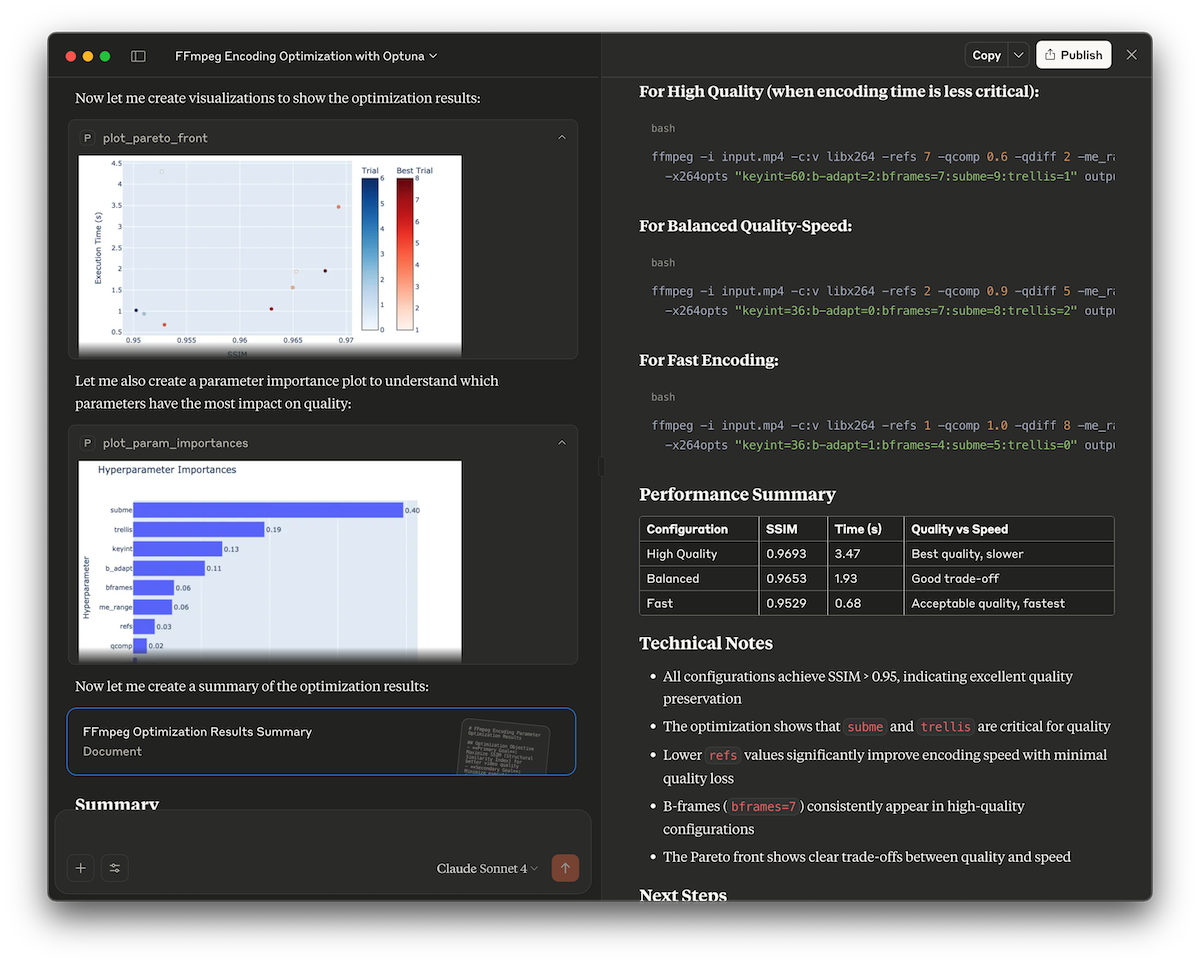

Optimizing the FFmpeg Encoding Parameters

This demo showcases how to use the Optuna MCP server to automatically find optimal FFmpeg encoding parameters. It optimizes x264 encoding options to maximize video quality (measured by the SSIM score) while keeping encoding time reasonable.

Check out examples/ffmpeg for details.

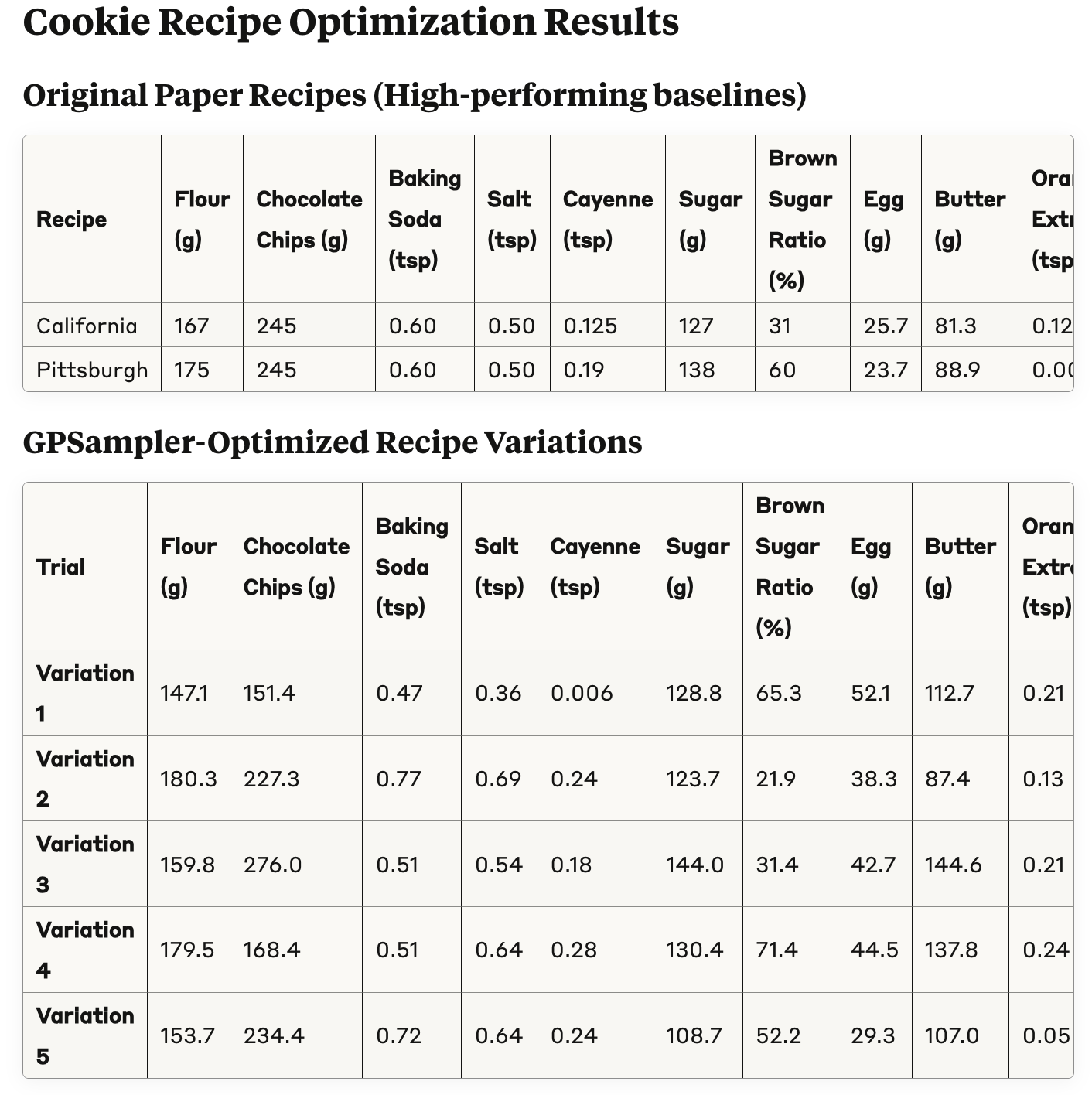

Optimizing the Cookie Recipe

In this example, we will optimize a cookie recipe, referencing the paper titled "Bayesian Optimization for a Better Dessert".

Check out examples/cookie-recipe for details.

Optimizing the Matplotlib Configuration

This example optimizes a Matplotlib configuration.

Check out examples/auto-matplotlib for details.

License

MIT License (see LICENSE).

Star History

Repository Owner

Organization

Repository Details

Programming Languages

Tags

Topics

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

OpenAI MCP Server

Bridge between Claude and OpenAI models using the MCP protocol.

OpenAI MCP Server enables direct querying of OpenAI language models from Claude via the Model Context Protocol (MCP). It provides a configurable Python server that exposes OpenAI APIs as MCP endpoints. The server is designed for seamless integration, requiring simple configuration updates and environment variable setup. Automated testing is supported to verify connectivity and response from the OpenAI API.

- ⭐ 77

- MCP

- pierrebrunelle/mcp-server-openai

Dune Analytics MCP Server

Bridge Dune Analytics data seamlessly to AI agents via a Model Context Protocol server.

Dune Analytics MCP Server provides a Model Context Protocol-compliant server that allows AI agents to access and interact with Dune Analytics data. It exposes tools to fetch the latest results of Dune queries and execute arbitrary queries, returning results in CSV format. The server is easily deployable, supports integration with platforms like Claude Desktop, and requires a Dune Analytics API key for operation.

- ⭐ 31

- MCP

- kukapay/dune-analytics-mcp

VictoriaMetrics MCP Server

Model Context Protocol server enabling advanced monitoring and observability for VictoriaMetrics.

VictoriaMetrics MCP Server implements the Model Context Protocol (MCP) to provide seamless integration with VictoriaMetrics, allowing advanced monitoring, data exploration, and observability. It offers access to almost all read-only APIs, as well as embedded documentation for offline usage. The server facilitates comprehensive metric querying, cardinality analysis, alert and rule testing, and automation capabilities for engineers and tools.

- ⭐ 87

- MCP

- VictoriaMetrics-Community/mcp-victoriametrics

MCP Server for Data Exploration

Interactive Data Exploration and Analysis via Model Context Protocol

MCP Server for Data Exploration enables users to interactively explore and analyze complex datasets using prompt templates and tools within the Model Context Protocol ecosystem. Designed as a personal Data Scientist assistant, it facilitates the conversion of raw data into actionable insights without manual intervention. Users can load CSV datasets, run Python scripts, and generate tailored reports and visualizations through an AI-powered interface. The server integrates directly with Claude Desktop, supporting rapid setup and seamless usage for both macOS and Windows.

- ⭐ 503

- MCP

- reading-plus-ai/mcp-server-data-exploration

Meta Ads MCP

AI-powered Meta Ads campaign analysis and management via MCP

Meta Ads MCP is a Model Context Protocol (MCP) server for managing, analyzing, and optimizing Meta advertising campaigns. It enables AI interfaces, such as LLMs, to retrieve ad performance data, visualize creatives, and provide strategic insights across Facebook, Instagram, and related platforms. The solution supports integration with platforms like Claude and Cursor, leveraging Meta's public APIs to deliver actionable insights and campaign management capabilities. Authentication options include interactive login and token-based flows for various MCP clients.

- ⭐ 342

- MCP

- pipeboard-co/meta-ads-mcp

LlamaCloud MCP Server

Connect multiple LlamaCloud indexes as tools for your MCP client.

LlamaCloud MCP Server is a TypeScript-based implementation of a Model Context Protocol server that allows users to connect multiple managed indexes from LlamaCloud as separate tools in MCP-compatible clients. Each tool is defined via command-line parameters, enabling flexible and dynamic access to different document indexes. The server automatically generates tool interfaces, each capable of querying its respective LlamaCloud index, with customizable parameters such as index name, description, and result limits. Designed for seamless integration, it works with clients like Claude Desktop, Windsurf, and Cursor.

- ⭐ 82

- MCP

- run-llama/mcp-server-llamacloud

Didn't find tool you were looking for?