LINE Bot MCP Server

MCP server connecting LINE Messaging API with AI agents

Key Features

Use Cases

README

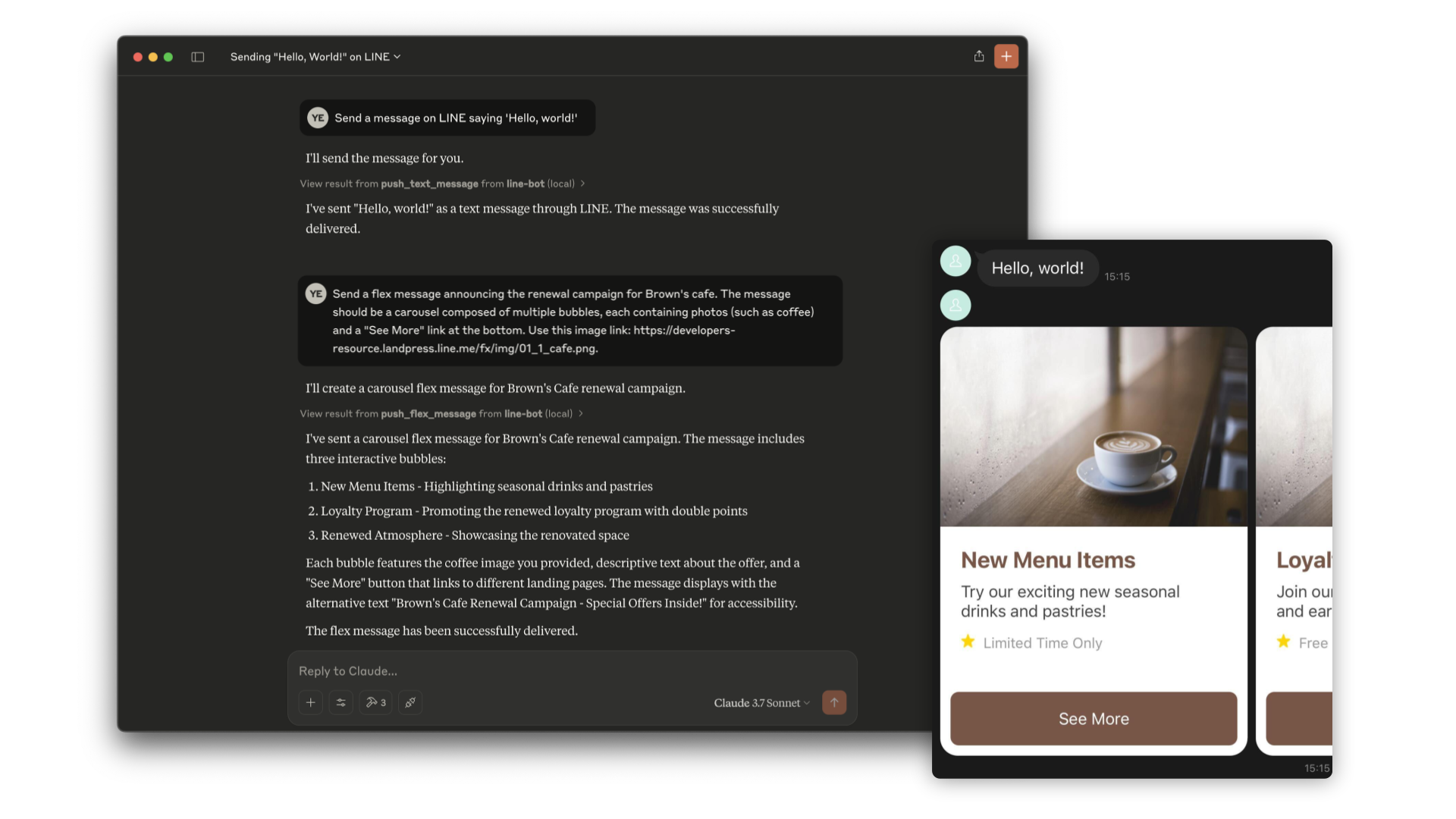

LINE Bot MCP Server

Model Context Protocol (MCP) server implementation that integrates the LINE Messaging API to connect an AI Agent to the LINE Official Account.

[!NOTE] This repository is provided as a preview version. While we offer it for experimental purposes, please be aware that it may not include complete functionality or comprehensive support.

Tools

- push_text_message

- Push a simple text message to a user via LINE.

- Inputs:

userId(string?): The user ID to receive a message. Defaults to DESTINATION_USER_ID. EitheruserIdorDESTINATION_USER_IDmust be set.message.text(string): The plain text content to send to the user.

- push_flex_message

- Push a highly customizable flex message to a user via LINE.

- Inputs:

userId(string?): The user ID to receive a message. Defaults to DESTINATION_USER_ID. EitheruserIdorDESTINATION_USER_IDmust be set.message.altText(string): Alternative text shown when flex message cannot be displayed.message.contents(any): The contents of the flex message. This is a JSON object that defines the layout and components of the message.message.contents.type(enum): Type of the container. 'bubble' for single container, 'carousel' for multiple swipeable bubbles.

- broadcast_text_message

- Broadcast a simple text message via LINE to all users who have followed your LINE Official Account.

- Inputs:

message.text(string): The plain text content to send to the users.

- broadcast_flex_message

- Broadcast a highly customizable flex message via LINE to all users who have added your LINE Official Account.

- Inputs:

message.altText(string): Alternative text shown when flex message cannot be displayed.message.contents(any): The contents of the flex message. This is a JSON object that defines the layout and components of the message.message.contents.type(enum): Type of the container. 'bubble' for single container, 'carousel' for multiple swipeable bubbles.

- get_profile

- Get detailed profile information of a LINE user including display name, profile picture URL, status message and language.

- Inputs:

userId(string?): The ID of the user whose profile you want to retrieve. Defaults to DESTINATION_USER_ID.

- get_message_quota

- Get the message quota and consumption of the LINE Official Account. This shows the monthly message limit and current usage.

- Inputs:

- None

- get_rich_menu_list

- Get the list of rich menus associated with your LINE Official Account.

- Inputs:

- None

- delete_rich_menu

- Delete a rich menu from your LINE Official Account.

- Inputs:

richMenuId(string): The ID of the rich menu to delete.

- set_rich_menu_default

- Set a rich menu as the default rich menu.

- Inputs:

richMenuId(string): The ID of the rich menu to set as default.

- cancel_rich_menu_default

- Cancel the default rich menu.

- Inputs:

- None

- create_rich_menu

- Create a rich menu based on the given actions. Generate and upload an image. Set as default.

- Inputs:

chatBarText(string): Text displayed in chat bar, also used as rich menu name.actions(array): The actions of the rich menu. You can specify minimum 1 to maximum 6 actions. Each action can be one of the following types:postback: For sending a postback actionmessage: For sending a text messageuri: For opening a URLdatetimepicker: For opening a date/time pickercamera: For opening the cameracameraRoll: For opening the camera rolllocation: For sending the current locationrichmenuswitch: For switching to another rich menuclipboard: For copying text to clipboard

Installation (Using npx)

requirements:

- Node.js v20 or later

Step 1: Create LINE Official Account

This MCP server utilizes a LINE Official Account. If you do not have one, please create it by following this instructions.

If you have a LINE Official Account, enable the Messaging API for your LINE Official Account by following this instructions.

Step 2: Configure AI Agent

Please add the following configuration for an AI Agent like Claude Desktop or Cline.

Set the environment variables or arguments as follows:

CHANNEL_ACCESS_TOKEN: (required) Channel Access Token. You can confirm this by following this instructions.DESTINATION_USER_ID: (optional) The default user ID of the recipient. If the Tool's input does not includeuserId,DESTINATION_USER_IDis required. You can confirm this by following this instructions.

{

"mcpServers": {

"line-bot": {

"command": "npx",

"args": [

"@line/line-bot-mcp-server"

],

"env": {

"CHANNEL_ACCESS_TOKEN" : "FILL_HERE",

"DESTINATION_USER_ID" : "FILL_HERE"

}

}

}

}

Installation (Using Docker)

Step 1: Create LINE Official Account

This MCP server utilizes a LINE Official Account. If you do not have one, please create it by following this instructions.

If you have a LINE Official Account, enable the Messaging API for your LINE Official Account by following this instructions.

Step 2: Build line-bot-mcp-server image

Clone this repository:

git clone git@github.com:line/line-bot-mcp-server.git

Build the Docker image:

docker build -t line/line-bot-mcp-server .

Step 3: Configure AI Agent

Please add the following configuration for an AI Agent like Claude Desktop or Cline.

Set the environment variables or arguments as follows:

mcpServers.args: (required) The path toline-bot-mcp-server.CHANNEL_ACCESS_TOKEN: (required) Channel Access Token. You can confirm this by following this instructions.DESTINATION_USER_ID: (optional) The default user ID of the recipient. If the Tool's input does not includeuserId,DESTINATION_USER_IDis required. You can confirm this by following this instructions.

{

"mcpServers": {

"line-bot": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"CHANNEL_ACCESS_TOKEN",

"-e",

"DESTINATION_USER_ID",

"line/line-bot-mcp-server"

],

"env": {

"CHANNEL_ACCESS_TOKEN" : "FILL_HERE",

"DESTINATION_USER_ID" : "FILL_HERE"

}

}

}

}

Local Development with Inspector

You can use the MCP Inspector to test and debug the server locally.

Prerequisites

- Clone the repository:

git clone git@github.com:line/line-bot-mcp-server.git

cd line-bot-mcp-server

- Install dependencies:

npm install

- Build the project:

npm run build

Run the Inspector

After building the project, you can start the MCP Inspector:

npx @modelcontextprotocol/inspector node dist/index.js \

-e CHANNEL_ACCESS_TOKEN="YOUR_CHANNEL_ACCESS_TOKEN" \

-e DESTINATION_USER_ID="YOUR_DESTINATION_USER_ID"

This will start the MCP Inspector interface where you can interact with the LINE Bot MCP Server tools and test their functionality.

Versioning

This project respects semantic versioning

Contributing

Please check CONTRIBUTING before making a contribution.

Star History

Repository Owner

Organization

Repository Details

Programming Languages

Tags

Topics

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

Offorte MCP Server

Bridge AI agents with Offorte proposal automation via the Model Context Protocol.

Offorte MCP Server enables external AI models to create and send proposals through Offorte by implementing the Model Context Protocol. It facilitates automation workflows between AI agents and Offorte's proposal engine, supporting seamless integration with chat interfaces and autonomous systems. The server provides a suite of tools for managing contacts, proposals, templates, and automation sets, streamlining the proposal creation and delivery process via standardized context handling. Designed for extensibility and real-world automation, it leverages Offorte's public API to empower intelligent business proposals.

- ⭐ 4

- MCP

- offorte/offorte-mcp-server

Insforge MCP Server

A Model Context Protocol server for seamless integration with Insforge and compatible AI clients.

Insforge MCP Server implements the Model Context Protocol (MCP), enabling smooth integration with various AI tools and clients. It allows users to configure and manage connections to the Insforge platform, providing automated and manual installation methods. The server supports multiple AI clients such as Claude Code, Cursor, Windsurf, Cline, Roo Code, and Trae via standardized context management. Documentation and configuration guidelines are available for further customization and usage.

- ⭐ 3

- MCP

- InsForge/insforge-mcp

Godot MCP

A Model Context Protocol (MCP) server implementation using Godot and Node.js.

Godot MCP implements the Model Context Protocol (MCP) as a server, leveraging the Godot game engine along with Node.js and TypeScript technologies. Designed for seamless integration and efficient context management, it aims to facilitate standardized communication between AI models and applications. This project offers a ready-to-use MCP server for developers utilizing Godot and modern JavaScript stacks.

- ⭐ 1,071

- MCP

- Coding-Solo/godot-mcp

MCP CLI

A powerful CLI for seamless interaction with Model Context Protocol servers and advanced LLMs.

MCP CLI is a modular command-line interface designed for interacting with Model Context Protocol (MCP) servers and managing conversations with large language models. It integrates with the CHUK Tool Processor and CHUK-LLM to provide real-time chat, interactive command shells, and automation capabilities. The system supports a wide array of AI providers and models, advanced tool usage, context management, and performance metrics. Rich output formatting, concurrent tool execution, and flexible configuration make it suitable for both end-users and developers.

- ⭐ 1,755

- MCP

- chrishayuk/mcp-cli

Flipt MCP Server

MCP server for Flipt, enabling AI assistants to manage and evaluate feature flags.

Flipt MCP Server is an implementation of the Model Context Protocol (MCP) that provides AI assistants with the ability to interact with Flipt feature flags. It enables listing, creating, updating, and deleting various flag-related entities, as well as flag evaluation and management. The server supports multiple transports, is configurable via environment variables, and can be deployed via npm or Docker. Designed for seamless integration with MCP-compatible AI clients.

- ⭐ 2

- MCP

- flipt-io/mcp-server-flipt

Flowcore Platform MCP Server

A standardized MCP server for managing and interacting with Flowcore Platform resources.

Flowcore Platform MCP Server provides an implementation of the Model Context Protocol (MCP) for seamless interaction and management of Flowcore resources. It enables AI assistants to query and control the Flowcore Platform using a structured API, allowing for enhanced context handling and data access. The server supports easy deployment with npx, npm, or Bun and requires user authentication using Flowcore credentials.

- ⭐ 9

- MCP

- flowcore-io/mcp-flowcore-platform

Didn't find tool you were looking for?