imagen3-mcp

Google Imagen 3.0 image generation service with MCP protocol integration.

Key Features

Use Cases

README

Imagen3-MCP

基于 Google 的 Imagen 3.0 的图像生成工具,通过 MCP(Model Control Protocol)提供服务。

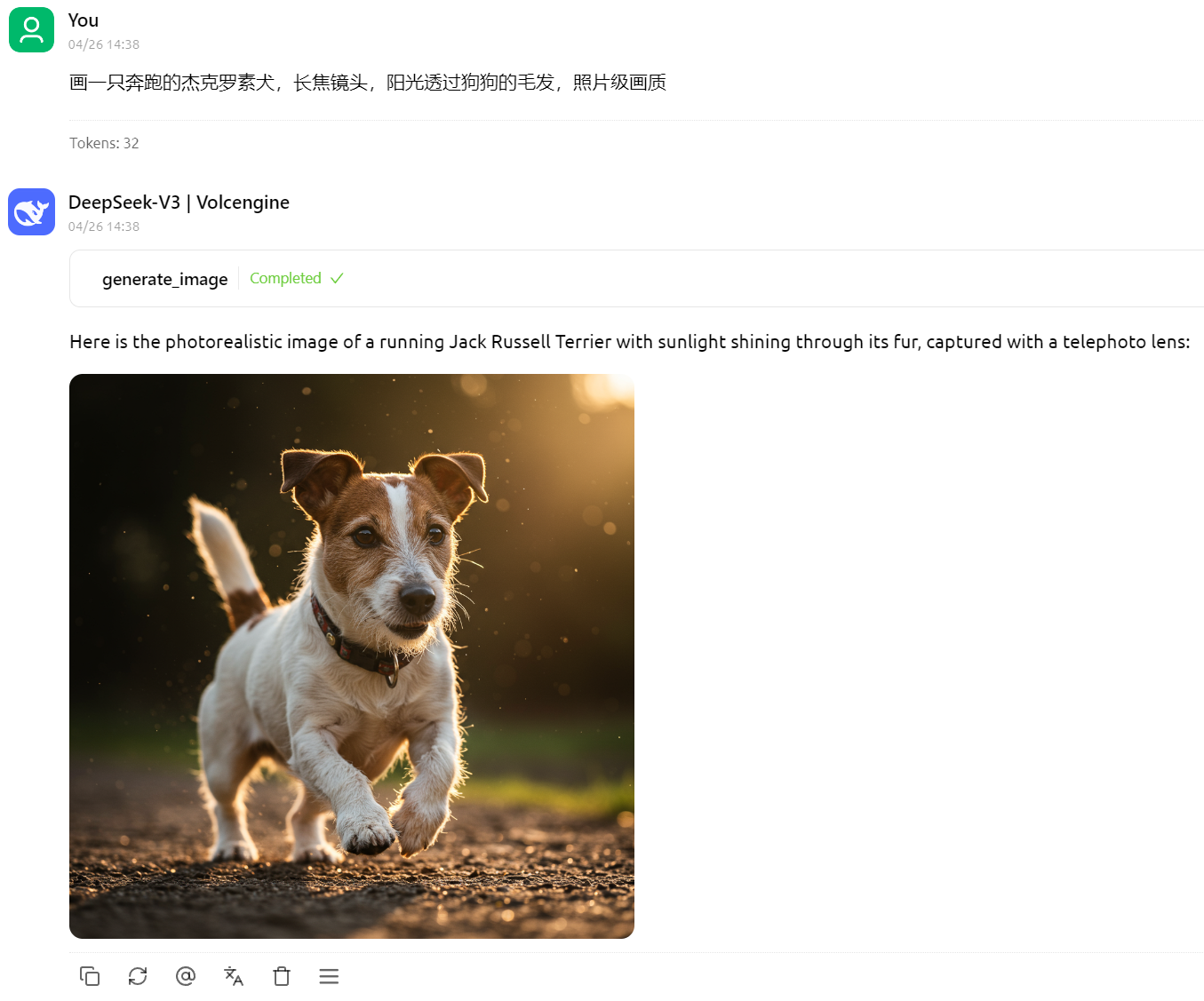

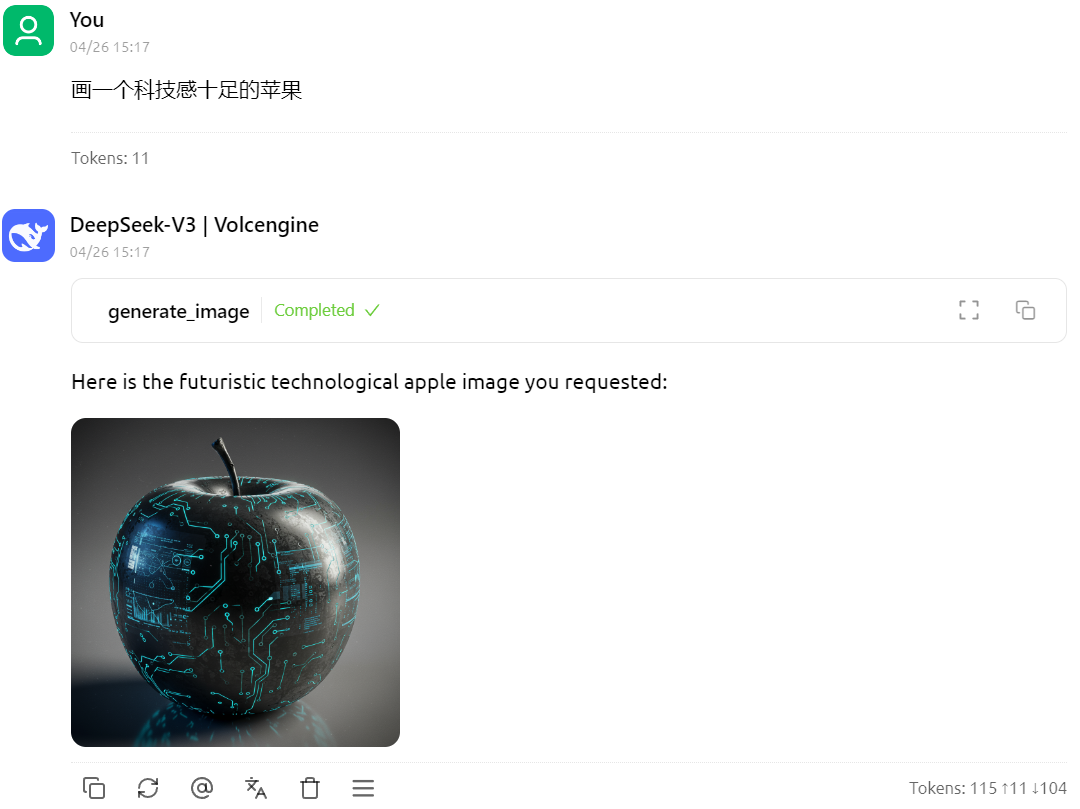

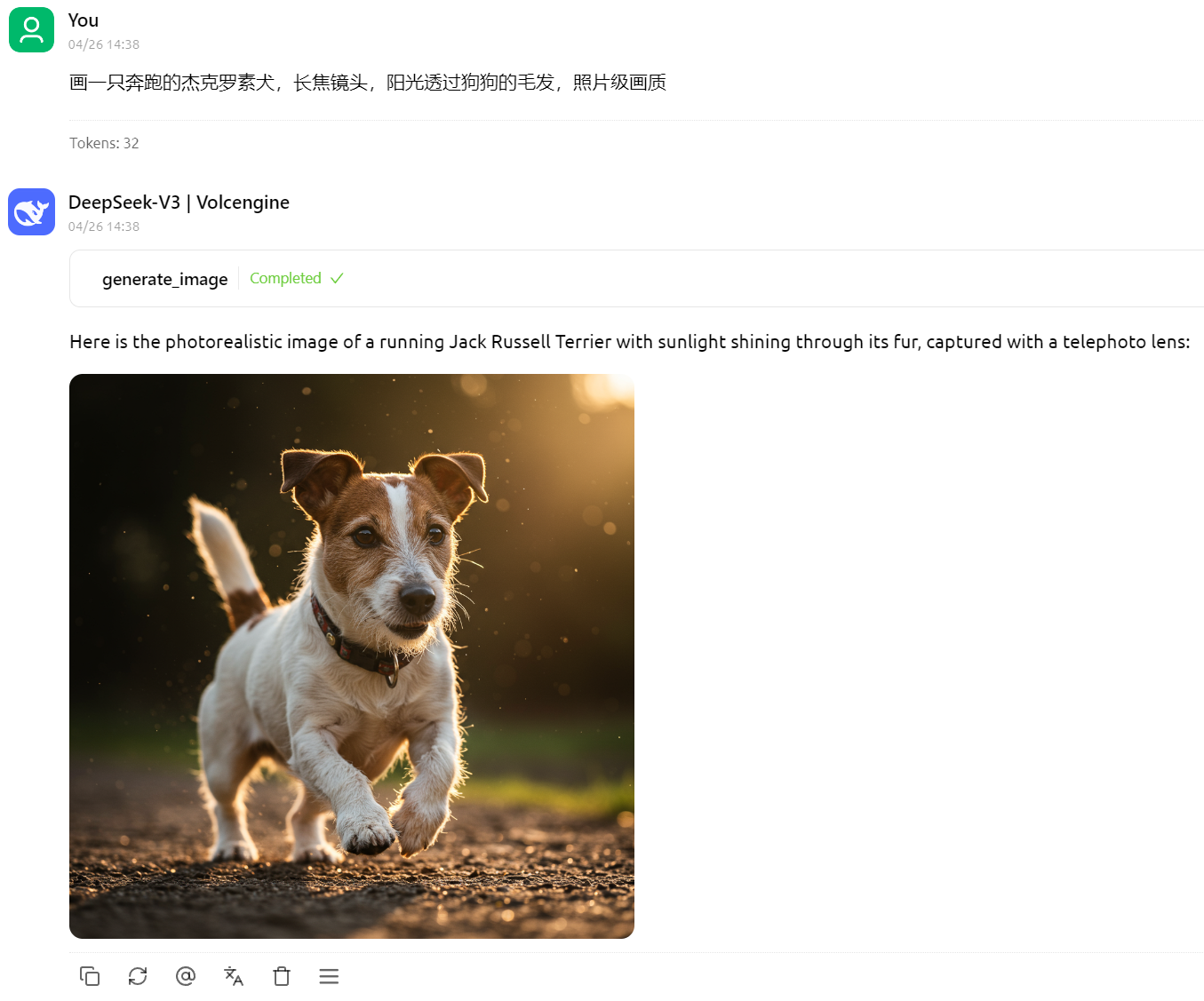

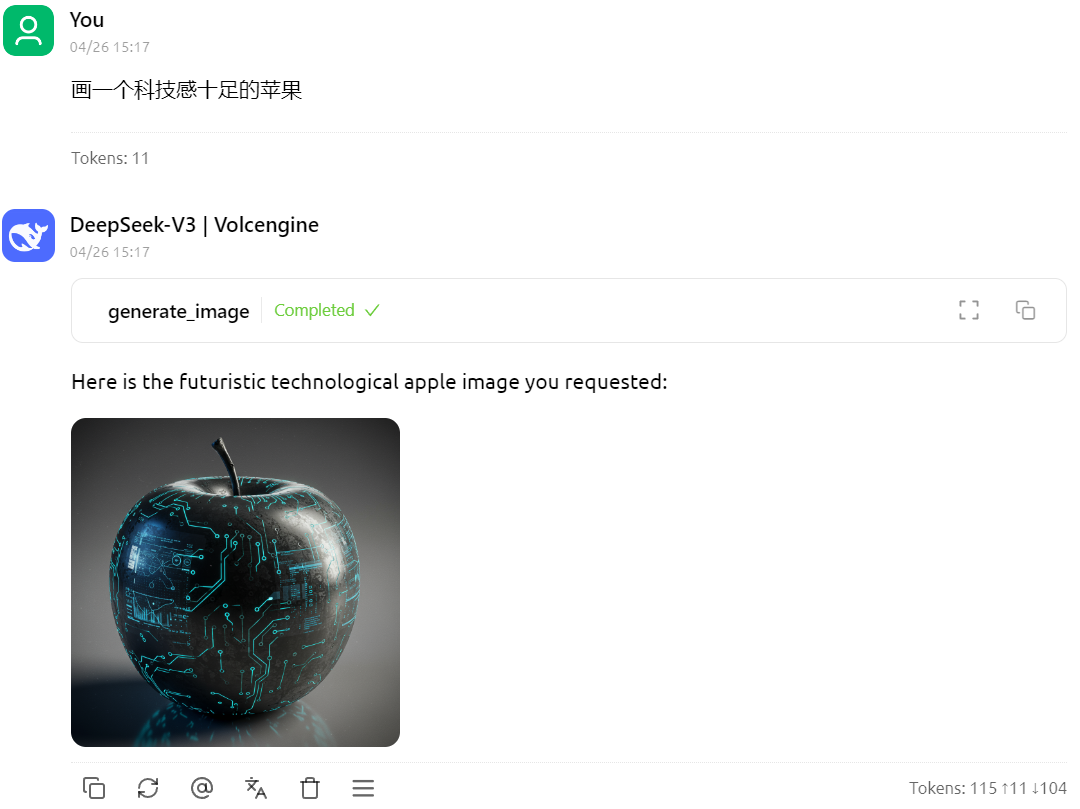

效果

画一只奔跑的杰克罗素犬,长焦镜头,阳光透过狗狗的毛发,照片级画质

画一个科技感十足的苹果

安装要求

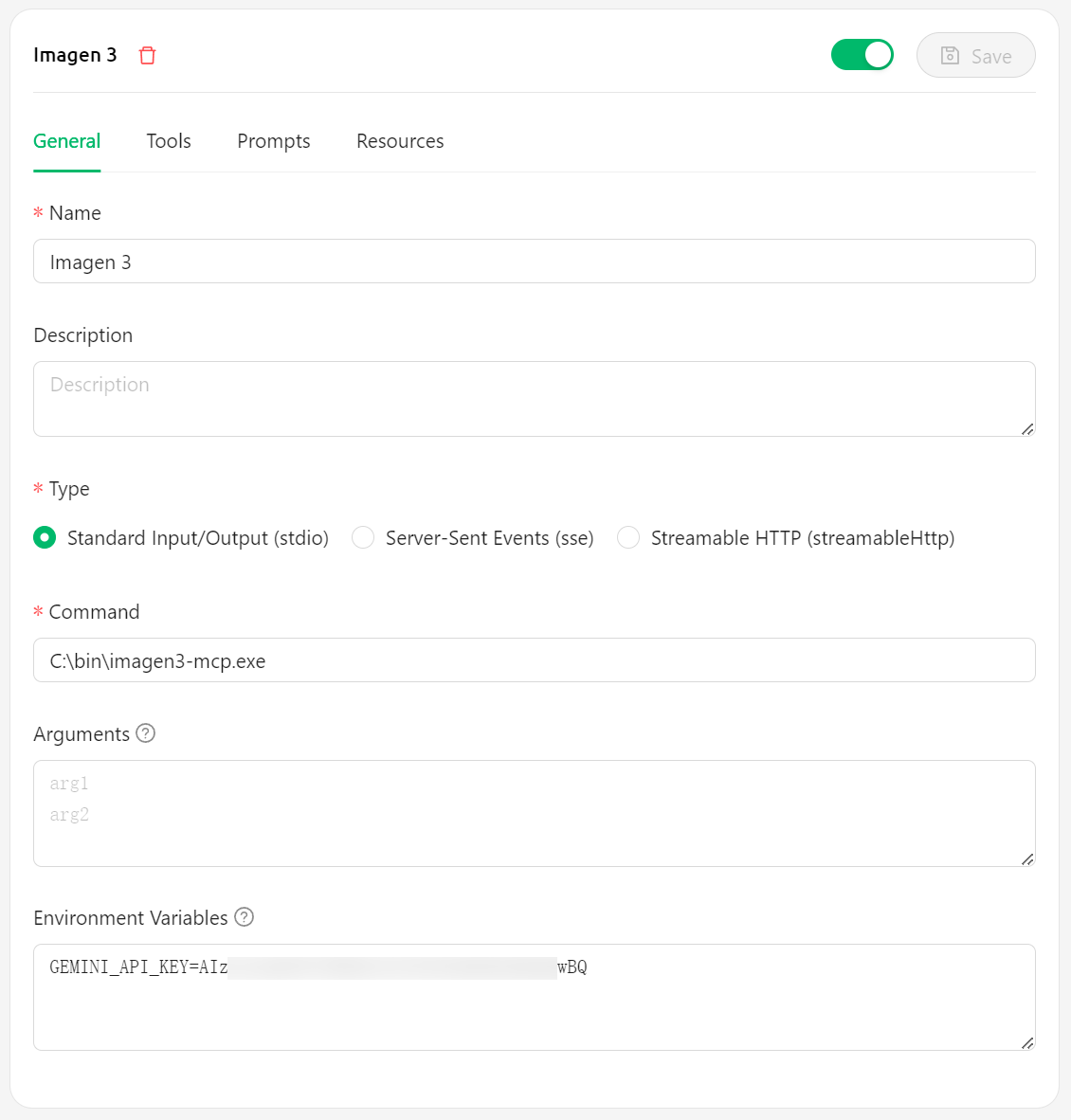

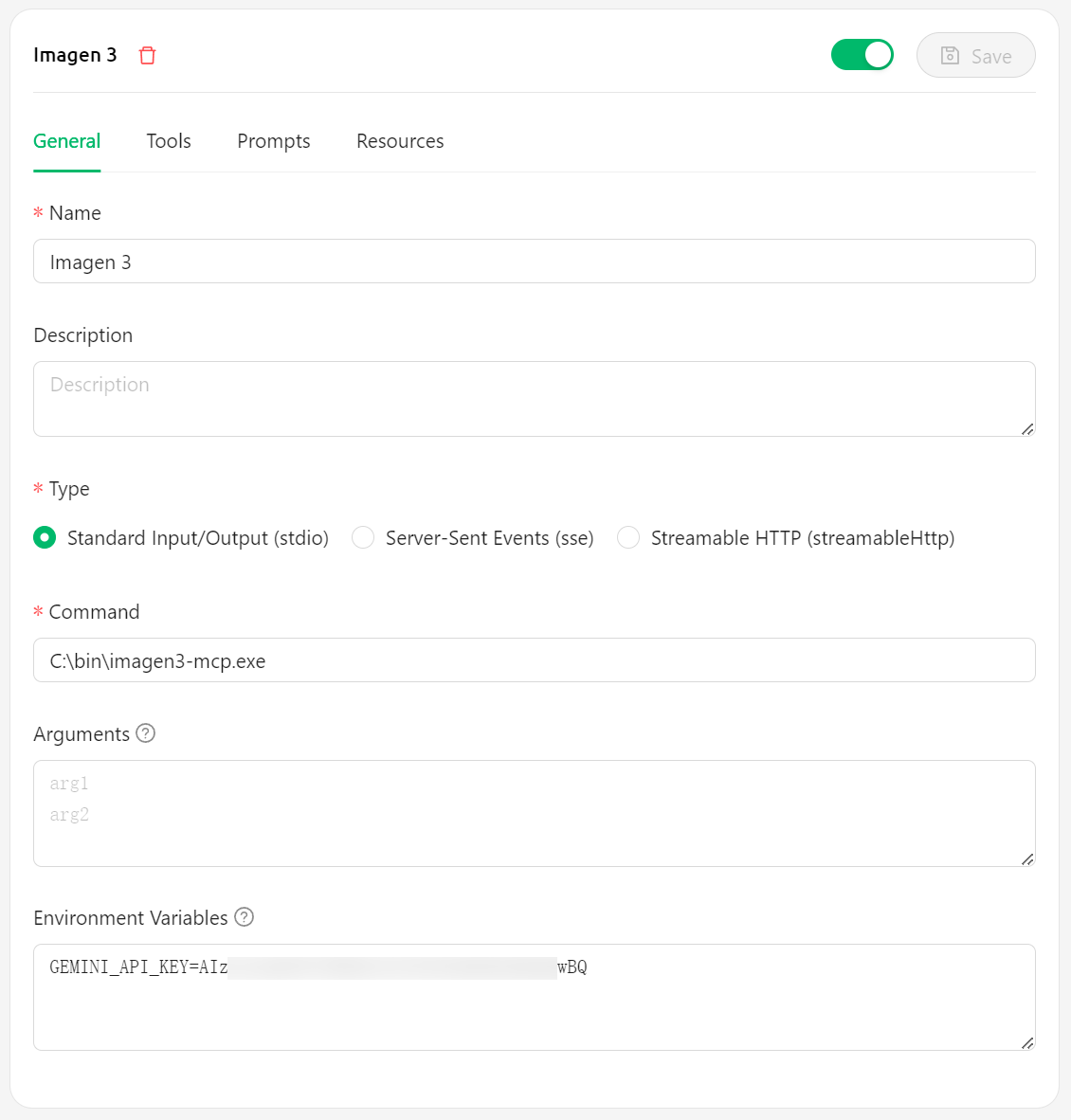

安装步骤——Cherry Studio

- 从 GitHub Releases 下载最新版本的可执行文件

- 将下载的可执行文件放置在系统中的任意位置,例如

C:\bin\imagen3-mcp.exe - 在 Cherry Studio 中配置:

- Command 字段填写可执行文件路径,例如

C:\bin\imagen3-mcp.exe - 环境变量

GEMINI_API_KEY中填写你的 Gemini API 密钥 - [可选] 环境变量

BASE_URL中填写代理地址,例如https://lingxi-proxy.hamflx.dev/api/provider/google(这个地址可以解决 GFW 的问题,但是解决不了 Google 对 IP 的限制问题,因此还是得挂梯子)。 - [可选] 环境变量

SERVER_LISTEN_ADDR:设置服务器监听的 IP 地址(默认为127.0.0.1)。 - [可选] 环境变量

SERVER_PORT:设置服务器监听的端口和图片 URL 使用的端口(默认为9981)。 - [可选] 环境变量

IMAGE_RESOURCE_SERVER_ADDR:设置图片 URL 中使用的服务器地址(默认为127.0.0.1)。这在服务器运行在容器或远程机器上时很有用。

- Command 字段填写可执行文件路径,例如

安装步骤——Cursor

{

"mcpServers": {

"imagen3": {

"command": "C:\\bin\\imagen3-mcp.exe",

"env": {

"GEMINI_API_KEY": "<GEMINI_API_KEY>"

// Optional environment variables:

// "BASE_URL": "<PROXY_URL>",

// "SERVER_LISTEN_ADDR": "0.0.0.0", // Example: Listen on all interfaces

// "SERVER_PORT": "9981",

// "IMAGE_RESOURCE_SERVER_ADDR": "your.domain.com" // Example: Use a domain name for image URLs

}

}

}

}

许可证

MIT

Imagen3-MCP (English)

An image generation tool based on Google's Imagen 3.0, providing services through MCP (Model Control Protocol).

Examples

A running Jack Russell Terrier, telephoto lens, sunlight filtering through the dog's fur, photorealistic quality

A high-tech apple

Requirements

- Valid Google Gemini API key

Installation Steps—Cherry Studio

- Download the latest executable from GitHub Releases

- Place the downloaded executable anywhere in your system, e.g.,

C:\bin\imagen3-mcp.exe - Configure in Cherry Studio:

- Fill in the Command field with the executable path, e.g.,

C:\bin\imagen3-mcp.exe - Enter your Gemini API key in the

GEMINI_API_KEYenvironment variable - [Optional] Enter a proxy URL in the

BASE_URLenvironment variable, e.g.,https://your-proxy.com. - [Optional] Set the

SERVER_LISTEN_ADDRenvironment variable: The IP address the server listens on (defaults to127.0.0.1). - [Optional] Set the

SERVER_PORTenvironment variable: The port the server listens on and uses for image URLs (defaults to9981). - [Optional] Set the

IMAGE_RESOURCE_SERVER_ADDRenvironment variable: The server address used in the image URLs (defaults to127.0.0.1). Useful if the server runs in a container or remote machine.

- Fill in the Command field with the executable path, e.g.,

Installation Steps—Cursor

{

"mcpServers": {

"imagen3": {

"command": "C:\\bin\\imagen3-mcp.exe",

"env": {

"GEMINI_API_KEY": "<GEMINI_API_KEY>"

// Optional environment variables:

// "BASE_URL": "<PROXY_URL>",

// "SERVER_LISTEN_ADDR": "0.0.0.0", // Example: Listen on all interfaces

// "SERVER_PORT": "9981",

// "IMAGE_RESOURCE_SERVER_ADDR": "your.domain.com" // Example: Use a domain name for image URLs

}

}

}

}

License

MIT

Star History

Repository Owner

User

Repository Details

Programming Languages

Tags

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

@nanana-ai/mcp-server-nano-banana

MCP server for Nanana AI image generation using Gemini's nano banana model.

@nanana-ai/mcp-server-nano-banana serves as an MCP (Model Context Protocol) compatible server for facilitating image generation and transformation powered by the Gemini nano banana model. It enables clients like Claude Desktop to interact with Nanana AI, processing text prompts to generate images or transform existing images. The server can be easily configured with API tokens and integrated into desktop applications. Users can manage credentials, customize endpoints, and monitor credit usage seamlessly.

- ⭐ 3

- MCP

- nanana-app/mcp-server-nano-banana

openai-gpt-image-mcp

MCP-compatible server for image generation and editing via OpenAI APIs.

Provides a Model Context Protocol (MCP) tool server that interfaces with OpenAI's GPT-4o/gpt-image-1 APIs to generate and edit images from text prompts. Supports advanced image editing operations including inpainting, outpainting, and compositing with customizable options. Integrates with MCP-compatible clients such as Claude Desktop, VSCode, Cursor, and Windsurf. Offers both base64 and file output for generated images, with automatic file handling for large images.

- ⭐ 70

- MCP

- SureScaleAI/openai-gpt-image-mcp

Outsource MCP

Unified MCP server for multi-provider AI text and image generation

Outsource MCP is a Model Context Protocol server that bridges AI applications with multiple model providers via a single unified interface. It enables AI tools and clients to access over 20 major providers for both text and image generation, streamlining model selection and API integration. Built on FastMCP and Agno agent frameworks, it supports flexible configuration and is compatible with MCP-enabled AI tools. Authentication is provider-specific, and all interactions use a simple standardized API format.

- ⭐ 26

- MCP

- gwbischof/outsource-mcp

OpenAPI-MCP

Dockerized MCP server that transforms OpenAPI/Swagger specs into MCP-compatible tools.

OpenAPI-MCP is a dockerized server that reads Swagger/OpenAPI specification files and generates corresponding Model Context Protocol (MCP) toolsets. It enables MCP-compatible clients to interact dynamically with APIs described by OpenAPI specs, automatically generating the necessary tool schemas and facilitating secure API key handling. The solution supports both local and remote API specs, offers filtering by tags and operations, and can be easily deployed using Docker.

- ⭐ 150

- MCP

- ckanthony/openapi-mcp

Replicate Flux MCP

MCP-compatible server for high-quality image and SVG generation via Replicate models.

Replicate Flux MCP is an advanced Model Context Protocol (MCP) server designed to enable AI assistants to generate high-quality raster images and vector graphics. It leverages Replicate's Flux Schnell model for image synthesis and Recraft V3 SVG model for vector output, supporting seamless integration with AI platforms like Cursor, Claude Desktop, Smithery, and Glama.ai. Users can generate images and SVGs by simply providing natural language prompts, with support for parameter customization, batch processing, and variant creation.

- ⭐ 66

- MCP

- awkoy/replicate-flux-mcp

Amazon Bedrock MCP Server

Model Control Protocol server for AI image generation via Amazon Bedrock's Nova Canvas.

Amazon Bedrock MCP Server implements the Model Control Protocol to enable advanced AI image generation using Amazon's Nova Canvas model. It provides features like deterministic output through seed control, negative prompts, and configurable quality and image dimensions. Secure and flexible AWS credential management is supported, along with integration for Claude Desktop and robust input validation. The solution ensures high-quality, reproducible image generation workflows tailored for both developers and integrated applications.

- ⭐ 22

- MCP

- zxkane/mcp-server-amazon-bedrock

Didn't find tool you were looking for?