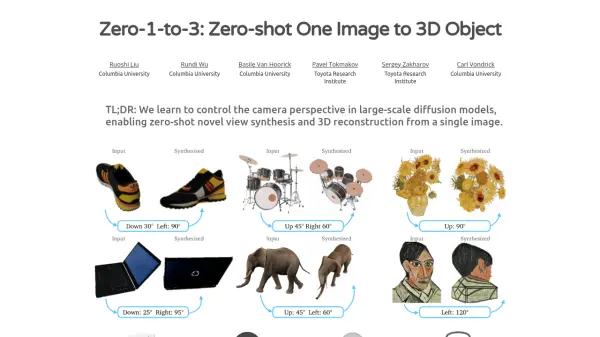

What is Zero-1-to-3?

Zero-1-to-3 introduces a framework capable of altering the camera viewpoint of an object using only a single RGB image. It addresses the challenge of novel view synthesis in this under-constrained scenario by utilizing the geometric understanding inherent in large-scale diffusion models trained on natural images. The core technology is a conditional diffusion model designed to learn control over relative camera viewpoints.

This model, initially trained on a synthetic dataset, learns to generate new images of the same object under specified camera transformations. Despite its synthetic training data, Zero-1-to-3 demonstrates remarkable zero-shot generalization capabilities, performing well on unfamiliar datasets and diverse real-world images, including artistic renderings like paintings. Furthermore, this viewpoint-conditioned diffusion approach can be employed for the task of reconstructing a full 3D model from just one input image.

Features

- Novel View Synthesis: Generates new images of an object from different camera perspectives based on a single input image.

- Single Image 3D Reconstruction: Reconstructs the 3D geometry and texture of an object from one image.

- Zero-Shot Generalization: Applies effectively to out-of-distribution datasets and real-world images without specific retraining.

- Viewpoint Control: Allows specifying the desired camera transformation to generate the new view.

- Diffusion Model Based: Leverages the geometric priors learned by large-scale diffusion models.

Use Cases

- Creating 3D models from single photographs.

- Generating multiple views of an object for product visualization.

- Visualizing objects from different angles for design or analysis.

- Reconstructing 3D scenes or objects from existing 2D images.

- Generating assets for virtual reality or augmented reality applications.

Related Queries

Helpful for people in the following professions

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.