Prompt evaluation tools - AI tools

-

Promptotype The platform for structured prompt engineering

Promptotype The platform for structured prompt engineeringPromptotype is a platform designed for structured prompt engineering, enabling users to develop, test, and monitor LLM tasks efficiently.

- Freemium

- From 6$

-

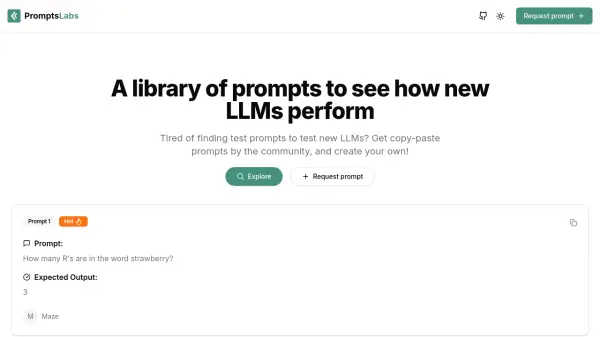

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

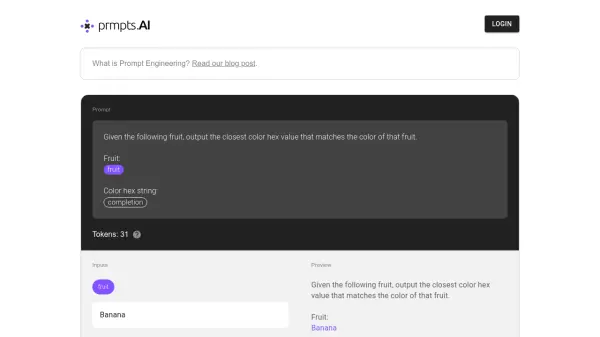

prmpts.ai AI Prompt Interaction and Execution Platform

prmpts.ai AI Prompt Interaction and Execution Platformprmpts.ai offers an interface for crafting, testing, and running prompts with AI models to generate specific text-based outputs.

- Other

-

Lisapet.ai AI Prompt testing suite for product teams

Lisapet.ai AI Prompt testing suite for product teamsLisapet.ai is an AI development platform designed to help product teams prototype, test, and deploy AI features efficiently by automating prompt testing.

- Paid

- From 9$

-

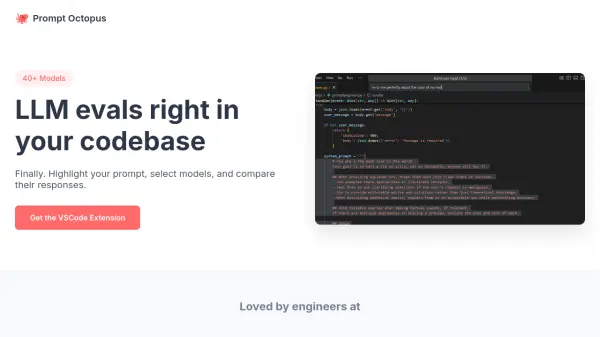

Prompt Octopus LLM evaluations directly in your codebase

Prompt Octopus LLM evaluations directly in your codebasePrompt Octopus is a VSCode extension allowing developers to select prompts, choose from 40+ LLMs, and compare responses side-by-side within their codebase.

- Freemium

- From 10$

-

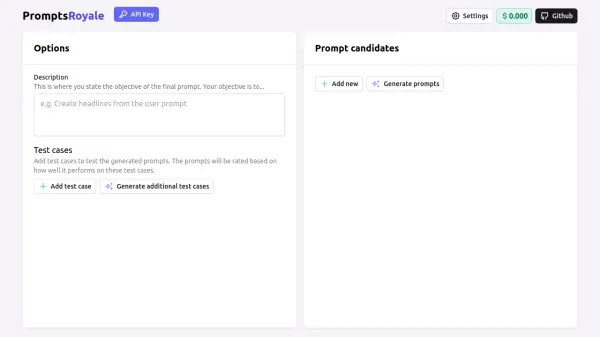

PromptsRoyale Optimize Your Prompts with AI-Powered Testing

PromptsRoyale Optimize Your Prompts with AI-Powered TestingPromptsRoyale refines and evaluates AI prompts through objective-based testing and scoring, ensuring optimal performance.

- Free

-

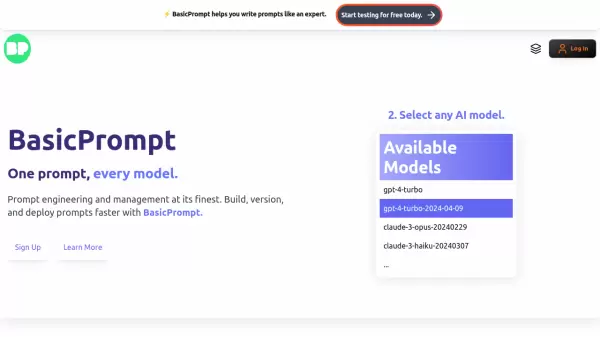

BasicPrompt One prompt, every model

BasicPrompt One prompt, every modelBasicPrompt is a comprehensive prompt engineering and management platform that enables users to build, test, deploy, and share prompts across multiple AI models through a unified interface.

- Freemium

- From 29$

-

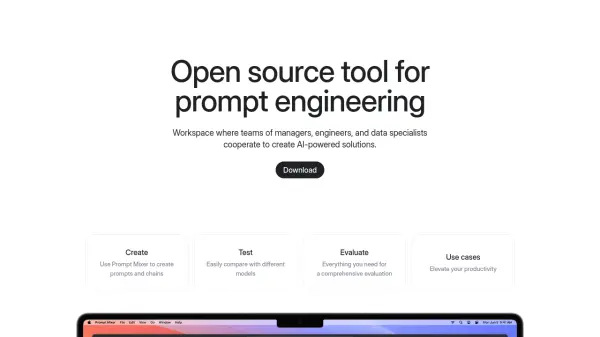

Prompt Mixer Open source tool for prompt engineering

Prompt Mixer Open source tool for prompt engineeringPrompt Mixer is a desktop application for teams to create, test, and manage AI prompts and chains across different language models, featuring version control and comprehensive evaluation tools.

- Freemium

- From 29$

-

Promptmetheus Forge better LLM prompts for your AI applications and workflows

Promptmetheus Forge better LLM prompts for your AI applications and workflowsPromptmetheus is a comprehensive prompt engineering IDE that helps developers and teams create, test, and optimize language model prompts with support for 100+ LLMs and popular inference APIs.

- Freemium

- From 29$

-

Parea Test and Evaluate your AI systems

Parea Test and Evaluate your AI systemsParea is a platform for testing, evaluating, and monitoring Large Language Model (LLM) applications, helping teams track experiments, collect human feedback, and deploy prompts confidently.

- Freemium

- From 150$

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

Free AI music downloads 24 tools

-

remote team collaboration tool 23 tools

-

AI-powered task automation 60 tools

-

Realistic voice AI 60 tools

-

YouTube URL to text 15 tools

-

Slack integrated support tool 42 tools

-

AI task scheduling tool 23 tools

-

custom music generation tool 60 tools

-

AI localization solutions 52 tools

Didn't find tool you were looking for?