SpaceBridge-MCP

A Model Context Protocol server for seamless AI-assisted issue management via SpaceBridge.

Key Features

Use Cases

README

IMPORTANT: This project is deprecated. Spacebridge MCP server is now available over HTTPS. See instructions at https://spacebridge.io

SpaceBridge-MCP

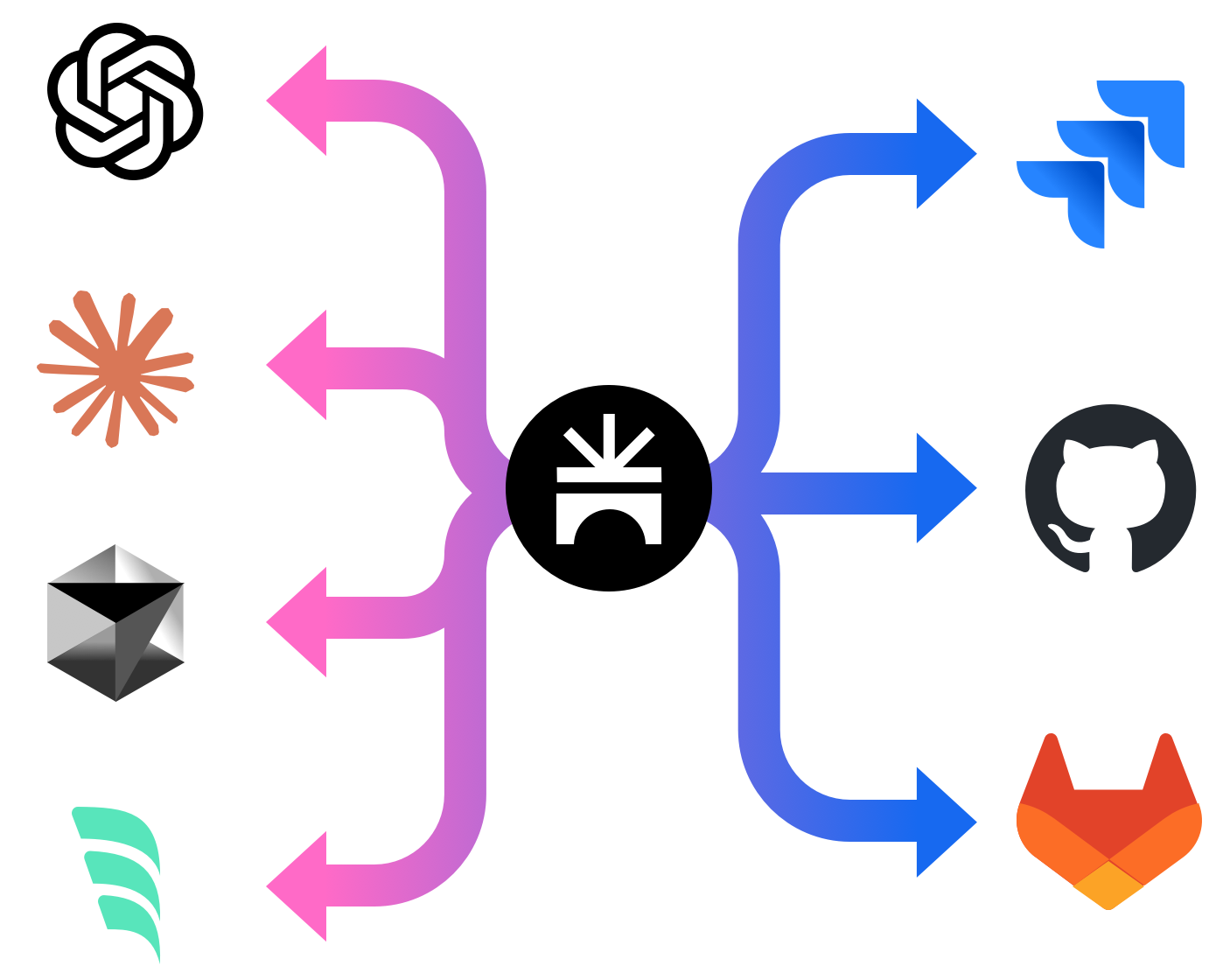

A Model Context Protocol (MCP) server that integrates with the SpaceBridge REST API (https://spacebridge.io/docs) to automate issue management across connected issue trackers.

Overview

SpaceBridge-MCP is a Model Context Protocol (MCP) server designed to integrate AI development tools (like Claude code, Windsurf, Cursor) with the SpaceBridge issue aggregation platform. It allows developers to interact with their issue trackers directly from their coding environment via their AI assistant.

Core Benefits:

- Reduced Context Switching: Manage issues (search, create, view) without leaving the development environment.

- Automated Issue Tracking: Facilitates workflows where AI assistants can automatically find or create relevant issues associated with code changes (e.g., for commit messages).

- Enhanced AI Context: Provides AI assistants with access to issue details (via

get_issue_by_id), enabling more context-aware code generation and analysis. - Centralized Interaction: Leverages SpaceBridge to interact with multiple underlying issue trackers (Jira, GitHub Issues, etc.) through a single interface.

Functionality:

The server exposes MCP tools and resources that translate natural language requests from an AI assistant into SpaceBridge API calls. Key features include intelligent duplicate checking (using similarity search and LLM comparison) before issue creation and flexible handling of organization/project context.

Features

- Resource:

get_issue_by_id: Retrieves details for a specific issue using its SpaceBridge ID. - Tool:

search_issues: Searches for issues based on a query string using either full-text or similarity search. - Tool:

create_issue: Creates a new issue. Before creation, it performs a similarity search for potentially duplicate issues and uses an LLM to compare the top results against the new issue's content. If a likely duplicate is found, it returns the existing issue's ID; otherwise, it creates the new issue. - Tool:

update_issue: Updates an existing issue.

Getting Started

Prerequisites

- Python 3.9+

- pip (Python package installer)

- Access to a SpaceBridge instance and API key.

- OpenAI API Key (for the

create_issuetool's duplicate check).

Installation using pip

- Install the package:

bash

pip install spacebridge-mcp

Installation from source

- Clone the repository:

bash

git clone <repository-url> cd spacebridge-mcp - Create and activate a virtual environment (recommended):

bash

# Use .venv as requested by user python -m venv .venv source .venv/bin/activate # On Windows use `.venv\Scripts\activate` - Install the package in editable mode, including development dependencies (for testing):

bashNote: This installs the package such that changes to the source code are immediately reflected without reinstalling. Including

# Use the specific python from your virtual env if 'pip' isn't found directly .venv/bin/python -m pip install -e ".[dev]" # Or if 'pip' is correctly on PATH for the venv: # pip install -e ".[dev]"[dev]installs packages needed for running tests, likepytestandrespx.

Configuration

The server requires the following configuration values:

- Required:

SPACEBRIDGE_API_KEY: Your API key for authenticating with SpaceBridge.

- Optional (Configuration & Context):

SPACEBRIDGE_ORG_NAME: Explicitly sets the organization context. (Optional).SPACEBRIDGE_PROJECT_NAME: Explicitly sets the project context. (Optional).

- Optional (Duplicate Detection Behavior):

OPENAI_API_KEY: Your OpenAI API key. Required if you want to use OpenAI for duplicate checking. If not provided, the server falls back to threshold-based checking.DUPLICATE_SIMILARITY_THRESHOLD: Sets the similarity score threshold (0.0 to 1.0) used for duplicate detection whenOPENAI_API_KEYis not provided. (Default:0.75).OPENAI_API_BASE: Specifies a custom base URL for the OpenAI API (e.g., for local models or other providers). (Used only ifOPENAI_API_KEYis set).OPENAI_MODEL: Specifies the OpenAI model name to use for duplicate checks. (Default:gpt-4o). (Used only ifOPENAI_API_KEYis set).

These values, along with organization/project context, can be provided in multiple ways. The server determines the final values based on the following order of precedence (highest first):

-

Command-line Arguments: Pass arguments when running the server. These override all other methods.

bashspacebridge-mcp-server \ --spacebridge-api-key "YOUR_SB_KEY" \ --openai-api-key "YOUR_OPENAI_KEY" \ --org-name "YOUR_ORG" \ # Explicit Org Name --project-name "YOUR_PROJECT" # Explicit Project Name # --project-dir "/path/to/your/project" # Optional: Specify dir for Git detection(Use

spacebridge-mcp-server --helpto see all available arguments.) -

Environment Variables: Set standard environment variables. These override

.envfiles and Git detection.bash# Example setting required and some optional vars export SPACEBRIDGE_API_KEY="YOUR_SB_KEY" export OPENAI_API_KEY="YOUR_OPENAI_KEY" # If using OpenAI duplicate check export SPACEBRIDGE_ORG_NAME="YOUR_ORG" export SPACEBRIDGE_PROJECT_NAME="YOUR_PROJECT" # Then run: spacebridge-mcp-server -

.env File: Create a file named

.envin the directory where you run the server. Environment variables and command-line arguments override values in this file.dotenv# .env file content SPACEBRIDGE_API_KEY="YOUR_SB_KEY" OPENAI_API_KEY="YOUR_OPENAI_KEY" SPACEBRIDGE_ORG_NAME="YOUR_ORG" SPACEBRIDGE_PROJECT_NAME="YOUR_PROJECT"The server automatically loads values from this file if it exists.

-

Git Configuration Detection: If organization and project are not set via arguments or environment variables, the server attempts to detect them from the

.git/configfile.- It first checks in the directory specified by the

--project-dirargument (if provided). - If

--project-diris not used, it checks the current working directory where the server was started.

- It first checks in the directory specified by the

Context Handling Priority:

The organization and project context used for API calls is determined as follows:

- Startup Context: The context determined during server startup (using the precedence above: Args > Env Vars > Git Detection) is used by default.

- Tool Parameter Fallback: If (and only if) the server could not determine the context during startup (e.g., no explicit config provided and not running in a Git repository), the optional

org_nameandproject_nameparameters provided in a tool call (search_issues,create_issue,update_issue) will be used as a fallback.

Note on Client Configuration: When configuring MCP clients like Claude code (see "Connecting MCP Clients" section), passing credentials or context via the client's --env flags effectively sets them as environment variables for the server process (Priority 2). This is the recommended way to provide explicit context in environments where Git detection might fail (like Windsurf or Cursor).

Running the Server

Once installed and configured, you can run the server using the command defined in pyproject.toml:

spacebridge-mcp-server

The server will start listening for MCP connections via standard input/output (stdio) by default.

Connecting MCP Clients

This server uses standard input/output (stdio) for communication. You need to configure your MCP client (e.g., Claude code, Windsurf, Cursor) to launch the spacebridge-mcp-server command and pass the required environment variables.

Configuration Steps:

- Install: Ensure

spacebridge-mcpis installed (see Installation section). Thespacebridge-mcp-servercommand should be available in your environment's path (e.g., inside your virtual environment'sbindirectory if installed from source, or globally if installed viapip install spacebridge-mcp). - Find Server Path: Determine the full path to the

spacebridge-mcp-serverexecutable. If you installed in a virtual environment.venv, it might be/path/to/your/project/.venv/bin/spacebridge-mcp-server. If installed globally, you can often find it withwhich spacebridge-mcp-server(Linux/macOS) orwhere spacebridge-mcp-server(Windows). - Add Server to Client: Use your MCP client's command for adding a new server.

Example using claude mcp add (Claude code):

claude mcp add spacebridge \

/full/path/to/your/spacebridge-mcp-server \

--scope user \

--env SPACEBRIDGE_API_KEY="your-spacebridge-api-key" \

--env OPENAI_API_KEY="your-openai-api-key" \

- Replace

/full/path/to/your/spacebridge-mcp-serverwith the actual path found in step 2. - Replace the placeholder API URL and keys with your actual credentials.

--scope usermakes the server available across all your projects in Claude code. Use--scope projectto limit it to the current project.

Other Clients (Windsurf, Cursor, etc.):

Refer to the specific documentation for your client. The general principle is the same: provide a name for the server and the command to execute it, ensuring the necessary environment variables (SPACEBRIDGE_API_KEY, OPENAI_API_KEY, and optionally SPACEBRIDGE_ORG_NAME, SPACEBRIDGE_PROJECT_NAME) are passed to the command's environment. Some clients might have dedicated fields for environment variables, while others might require a wrapper script. Passing SPACEBRIDGE_ORG_NAME and SPACEBRIDGE_PROJECT_NAME is recommended for clients where the server's working directory might not match the actual project directory (e.g., Windsurf, Cursor).

Usage Tips & Agentic Workflows

- Automated Commit Messages: Configure your AI tool (e.g., via custom instructions or rules in Claude code, Windsurf, Cursor) to automatically reference relevant issue IDs when generating commit messages.

- Example Rule/Instruction: "When generating a commit message, always ask the SpaceBridge-MCP tool to find or create an issue related to the changes and include the resulting issue ID (e.g.,

SB-123) in the commit message footer." - The AI can then use the

search_issuesorcreate_issuetool via this MCP server. If an issue exists, it gets the ID; if not,create_issuemakes one and returns the new ID.

- Example Rule/Instruction: "When generating a commit message, always ask the SpaceBridge-MCP tool to find or create an issue related to the changes and include the resulting issue ID (e.g.,

- Context Augmentation: When asking your AI assistant to work on a feature or bug, provide the SpaceBridge issue ID. The assistant can then use the

get_issue_by_idresource via this MCP server to fetch the full context (description, comments, status) directly from your tracker, leading to more informed code generation or analysis. - Quick Issue Creation: Simply ask your assistant "Create an issue about [brief description]" while coding. The

create_issuetool will handle the similarity search and creation process.

Where to Find Us

Development

Running Tests

- Ensure you have installed the development dependencies:

bash

.venv/bin/python -m pip install -e ".[dev]" - Run pytest from the project root directory:

bash

.venv/bin/python -m pytest -vvv

Contributing

Contributions are welcome! Please follow these steps:

-

Find an Issue or Suggest an Idea:

- Check the GitHub Issues to see if your issue or idea is already being tracked.

- If not, open a new issue to discuss your proposed change or report a bug.

-

Fork the Repository: Create your own fork of the project on GitHub.

-

Create a Branch: Create a new branch in your fork for your changes:

bashgit checkout -b feature/your-feature-name -

Set Up Development Environment:

- Ensure you have Python 3.8+ installed.

- Create and activate a virtual environment (e.g., using

venv):bashpython -m venv .venv source .venv/bin/activate # Linux/macOS # .\.venv\Scripts\activate # Windows - Install the project with development dependencies:

bash

python -m pip install -e ".[dev]"

-

Make Your Changes: Implement your feature or bug fix.

-

Run Tests: Ensure all tests pass:

bashpython -m pytest -vvv -

Lint and Format: Ensure code style consistency (assuming Ruff is used, adjust if needed):

bashruff check . --fix ruff format . -

Commit Your Changes: Commit your changes with a clear and descriptive message.

bashgit commit -m "feat: Add feature X" # Or fix:, chore:, etc. -

Push to Your Fork:

bashgit push origin feature/your-feature-name -

Open a Pull Request: Go to the original repository on GitHub and open a Pull Request from your fork's branch to the

mainbranch of the original repository. Provide a clear description of your changes in the PR. -

Code Review: Wait for maintainers to review your PR. Address any feedback provided.

License

This project is licensed under the MIT License. See the LICENSE file for details.

Star History

Repository Owner

Organization

Repository Details

Programming Languages

Tags

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

Plane MCP Server

Enables LLMs to manage Plane.so projects and issues via the Model Context Protocol.

Plane MCP Server provides a standardized interface to connect large language models with Plane.so project management APIs. It enables LLMs to interact directly with project and issue data, supporting tasks such as listing projects, retrieving detailed information, creating and updating issues, while prioritizing user control and security. Installation is streamlined through tools like Smithery, and configuration supports multiple clients including Claude for Desktop.

- ⭐ 32

- MCP

- kelvin6365/plane-mcp-server

MCP Linear

MCP server for AI-driven control of Linear project management.

MCP Linear is a Model Context Protocol (MCP) server implementation that enables AI assistants to interact with the Linear project management platform. It provides a bridge between AI systems and the Linear GraphQL API, allowing the retrieval and management of issues, projects, teams, and more. With MCP Linear, users can create, update, assign, and comment on Linear issues, as well as manage project and team structures directly through AI interfaces. The tool supports seamless integration via Smithery and can be configured for various AI clients like Cursor and Claude Desktop.

- ⭐ 117

- MCP

- tacticlaunch/mcp-linear

AtomGit MCP Server

AI-powered management and automation of AtomGit repositories via the Model Context Protocol.

AtomGit MCP Server implements the Model Context Protocol to enable AI-driven management and automation of AtomGit open collaboration platform repositories. It offers methods for managing repositories, issues, pull requests, branches, and labels, allowing seamless AI interaction with AtomGit. The server supports integration with platforms like Claude and VSCode, providing a standardized interface for AI to orchestrate complex collaboration workflows. Built with Node.js and easily deployable via npx or from source, it focuses on expanding collaborative capabilities using AI agents.

- ⭐ 7

- MCP

- kaiyuanxiaobing/atomgit-mcp-server

Jira MCP

AI-Ready Jira Server for Real-World Developer Workflows

Jira MCP is an opinionated server implementation of the Model Context Protocol (MCP) tailored for Jira automation and integration. It provides advanced tools to manage Jira issues, sprints, development data, and project versions, reflecting workflows of real engineering and QA teams. Designed for seamless AI and tool integrations, it supports context-rich retrieval of Jira information for enhancing development transparency and automation.

- ⭐ 69

- MCP

- nguyenvanduocit/jira-mcp

GitHub MCP Server

Connect AI tools directly to GitHub for repository, issue, and workflow management via natural language.

GitHub MCP Server enables AI tools such as agents, assistants, and chatbots to interact natively with the GitHub platform. It allows these tools to access repositories, analyze code, manage issues and pull requests, and automate workflows using the Model Context Protocol (MCP). The server supports integration with multiple hosts, including VS Code and other popular IDEs, and can operate both remotely and locally. Built for developers seeking to enhance AI-powered development workflows through seamless GitHub context access.

- ⭐ 24,418

- MCP

- github/github-mcp-server

Kanboard MCP Server

MCP server for seamless AI integration with Kanboard project management.

Kanboard MCP Server is a Go-based server implementing the Model Context Protocol (MCP) for integrating AI assistants with the Kanboard project management system. It enables users to manage projects, tasks, users, and workflows in Kanboard directly via natural language commands through compatible AI tools. With built-in support for secure authentication and high performance, it facilitates streamlined project operations between Kanboard and AI-powered clients like Cursor or Claude Desktop. The server is configurable and designed for compatibility with MCP standards.

- ⭐ 15

- MCP

- bivex/kanboard-mcp

Didn't find tool you were looking for?