Framelink MCP for Figma

Seamlessly connect Figma design data to AI coding agents via the Model Context Protocol

Key Features

Use Cases

README

Give Cursor and other AI-powered coding tools access to your Figma files with this Model Context Protocol server.

When Cursor has access to Figma design data, it's way better at one-shotting designs accurately than alternative approaches like pasting screenshots.

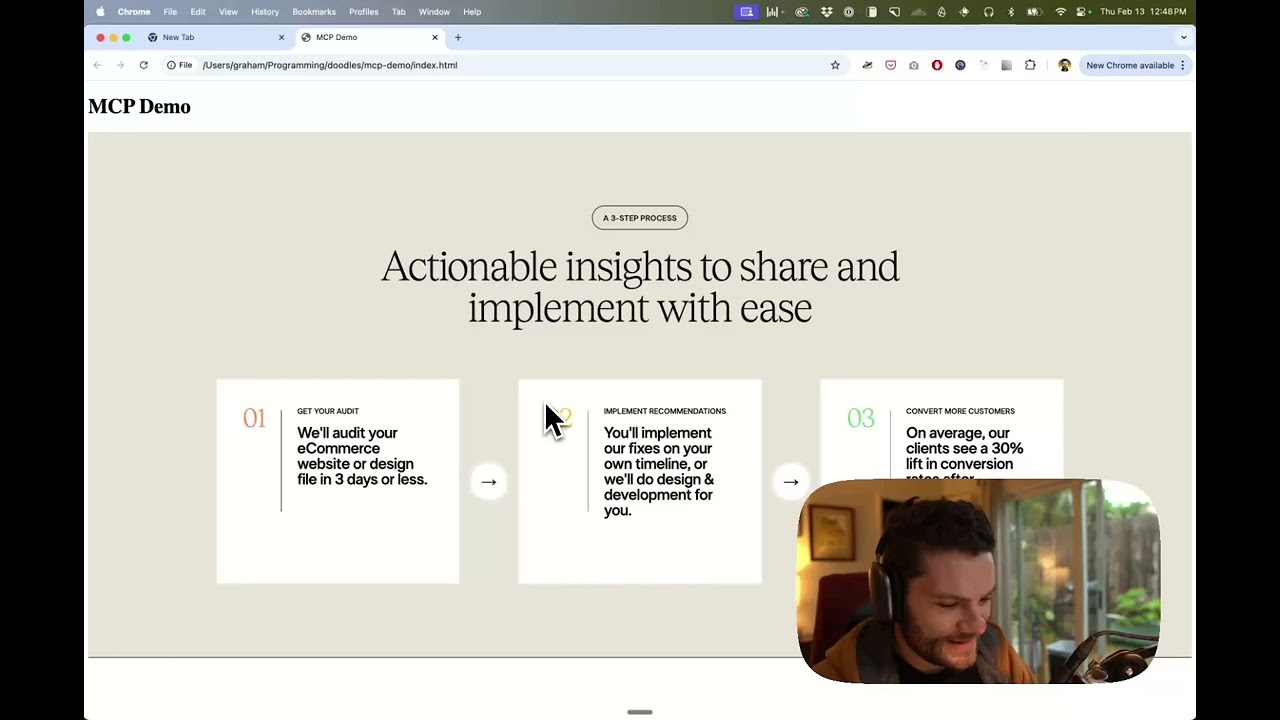

Demo

Watch a demo of building a UI in Cursor with Figma design data

How it works

- Open your IDE's chat (e.g. agent mode in Cursor).

- Paste a link to a Figma file, frame, or group.

- Ask Cursor to do something with the Figma file—e.g. implement the design.

- Cursor will fetch the relevant metadata from Figma and use it to write your code.

This MCP server is specifically designed for use with Cursor. Before responding with context from the Figma API, it simplifies and translates the response so only the most relevant layout and styling information is provided to the model.

Reducing the amount of context provided to the model helps make the AI more accurate and the responses more relevant.

Getting Started

Many code editors and other AI clients use a configuration file to manage MCP servers.

The figma-developer-mcp server can be configured by adding the following to your configuration file.

NOTE: You will need to create a Figma access token to use this server. Instructions on how to create a Figma API access token can be found here.

MacOS / Linux

{

"mcpServers": {

"Framelink MCP for Figma": {

"command": "npx",

"args": ["-y", "figma-developer-mcp", "--figma-api-key=YOUR-KEY", "--stdio"]

}

}

}

Windows

{

"mcpServers": {

"Framelink MCP for Figma": {

"command": "cmd",

"args": ["/c", "npx", "-y", "figma-developer-mcp", "--figma-api-key=YOUR-KEY", "--stdio"]

}

}

}

Or you can set FIGMA_API_KEY and PORT in the env field.

If you need more information on how to configure the Framelink MCP for Figma, see the Framelink docs.

Star History

Learn More

The Framelink MCP for Figma is simple but powerful. Get the most out of it by learning more at the Framelink site.

Star History

Repository Owner

User

Repository Details

Programming Languages

Tags

Topics

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

Bifrost

VSCode Dev Tools exposed via the Model Context Protocol for AI tool integration.

Bifrost is a Visual Studio Code extension that launches a Model Context Protocol (MCP) server, enabling external AI coding assistants to access advanced code navigation, analysis, and manipulation features from VSCode. It exposes language server capabilities, symbol search, semantic code analysis, and refactoring tools through MCP-compatible HTTP and SSE endpoints. The extension is designed for seamless integration with AI assistants, supporting multi-project environments and configuration via JSON files.

- ⭐ 184

- MCP

- biegehydra/BifrostMCP

Webvizio MCP Server

Bridge between Webvizio feedback and AI coding agents via the Model Context Protocol

Webvizio MCP Server is a TypeScript-based server implementing the Model Context Protocol to securely and efficiently interface with the Webvizio API. It transforms web page feedback and bug reports into structured, actionable developer tasks, providing AI coding agents with comprehensive task context and data. It offers methods to fetch project and task details, retrieve logs and screenshots, and manage task statuses. The server standardizes communication between Webvizio and AI agent clients, facilitating automated issue resolution.

- ⭐ 4

- MCP

- Webvizio/mcp

Firefly MCP Server

Seamless resource discovery and codification for Cloud and SaaS with Model Context Protocol integration.

Firefly MCP Server is a TypeScript-based server implementing the Model Context Protocol to enable integration with the Firefly platform for discovering and managing resources across Cloud and SaaS accounts. It supports secure authentication, resource codification into infrastructure as code, and easy integration with tools such as Claude and Cursor. The server can be configured via environment variables or command line and communicates using standardized MCP interfaces. Its features facilitate automation and codification workflows for cloud resource management.

- ⭐ 15

- MCP

- gofireflyio/firefly-mcp

Mindpilot MCP

Visualize and understand code structures with on-demand diagrams for AI coding assistants.

Mindpilot MCP provides AI coding agents with the capability to visualize, analyze, and understand complex codebases through interactive diagrams. It operates as a Model Context Protocol (MCP) server, enabling seamless integration with multiple development environments such as VS Code, Cursor, Windsurf, Zed, and Claude Code. Mindpilot ensures local processing for privacy, supports multi-client connections, and offers robust configuration options for server operation and data management. Users can export diagrams and adjust analytics settings for improved user control.

- ⭐ 61

- MCP

- abrinsmead/mindpilot-mcp

MCP Linear

MCP server for AI-driven control of Linear project management.

MCP Linear is a Model Context Protocol (MCP) server implementation that enables AI assistants to interact with the Linear project management platform. It provides a bridge between AI systems and the Linear GraphQL API, allowing the retrieval and management of issues, projects, teams, and more. With MCP Linear, users can create, update, assign, and comment on Linear issues, as well as manage project and team structures directly through AI interfaces. The tool supports seamless integration via Smithery and can be configured for various AI clients like Cursor and Claude Desktop.

- ⭐ 117

- MCP

- tacticlaunch/mcp-linear

Sequa MCP

Bridge Sequa's advanced context engine to any MCP-capable AI client.

Sequa MCP acts as a seamless integration layer, connecting Sequa’s knowledge engine with various AI coding assistants and IDEs via the Model Context Protocol (MCP). It enables tools to leverage Sequa’s contextual knowledge streams, enhancing code understanding and task execution across multiple repositories. The solution provides a simple proxy command to interface with standardized MCP transports, supporting configuration in popular environments such as Cursor, Claude, VSCode, and others. Its core purpose is to deliver deep, project-specific context to LLM agents through a unified and streamable endpoint.

- ⭐ 16

- MCP

- sequa-ai/sequa-mcp

Didn't find tool you were looking for?