Mindpilot MCP

Visualize and understand code structures with on-demand diagrams for AI coding assistants.

Key Features

Use Cases

README

Mindpilot MCP

See through your agent's eyes. Visualize legacy code, inspect complex flows, understand everything.

Why Mindpilot?

- Visualize Anything: Use your coding agent to generate on-demand architecture, code, and process diagrams to view your code from different perspectives.

- Vibe Checks: AI-generated code can accumulate unused and redundant constructs. Use visualizations to spot areas that need cleanup.

- Local Processing: Diagrams are never sent to the cloud. Everything stays between you, your agent, and your agent's LLM provider(s).

- Export & Share: Export any diagram as a vector image.

Prerequisites

Node.js v20.0.0 or higher.

Quickstart

Claude Code

claude mcp add mindpilot -- npx @mindpilot/mcp@latest

Cursor

Under Settings > Cursor Settings > MCP > Click Add new global MCP server and configure mindpilot in the mcpServers object.

{

"mcpServers": {

"mindpilot": {

"command": "npx",

"args": ["@mindpilot/mcp@latest"]

}

}

}

VS Code

Follow the instructions here for enabling MCPs in VS Code: https://code.visualstudio.com/docs/copilot/chat/mcp-servers

Go to Settings > Features > MCP, then click Edit in settings json

Then add mindpilot to your MCP configuration:

{

"mcp": {

"servers": {

"mindpilot": {

"type": "stdio",

"command": "npx",

"args": ["@mindpilot/mcp@latest"]

}

}

}

}

Windsurf

Under Settings > Windsurf Settings > Manage Plugins, click view raw config and configure mindpilot in the mcpServers object:

{

"mcpServers": {

"mindpilot": {

"command": "npx",

"args": ["@mindpilot/mcp@latest"]

}

}

}

Zed

In the AI Thread panel click on the three dots ..., then click Add Custom Server...

In the Command to run MCPserver field enter npx @mindpilot/mcp@latest and click Add Server.

Configuration Options

- Port: The server defaults to port 4000 but can be configured using the

--portcommand line switch. - Data Path: By default, diagrams are saved to

~/.mindpilot/data/. You can specify a custom location using the--data-pathcommand line switch.

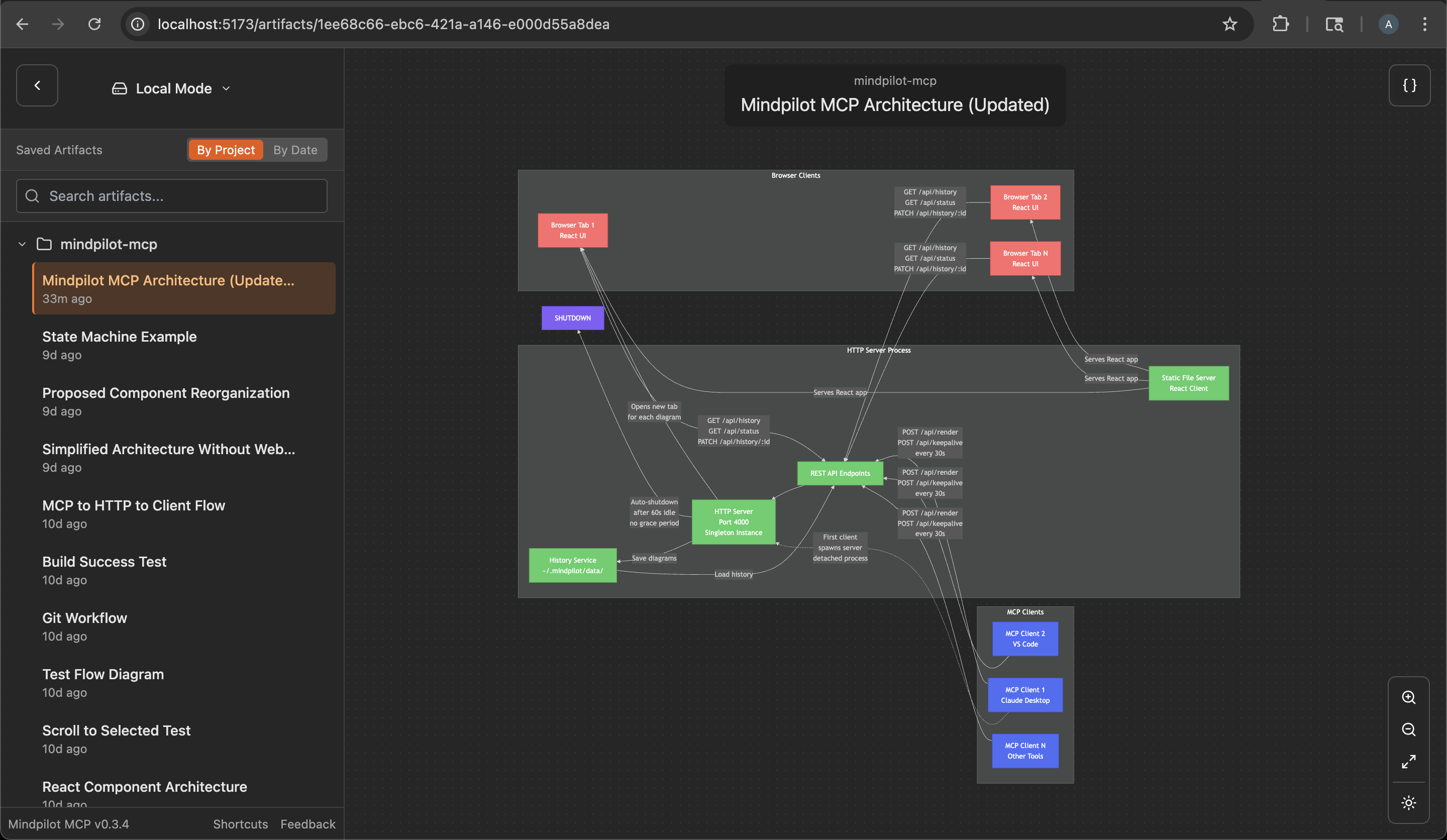

Multi-Client Support

Mindpilot intelligently handles multiple AI assistants running simultaneously. When you have multiple Claude Desktop windows or IDE instances open:

- The first mcp client to use Mindpilot starts a shared web server

- Additional assistants automatically connect to the existing server

- All assistants share the same diagram history and web interface

- The server will automatically shuts down a minute after the last MCP clinet disconnects

This means you can work with multiple MCP hosts at once without port conflicts, and they'll all contribute to the same collection of diagrams.

Anonymous Usage Tracking

Mindpilot MCP collects anonymous usage data to help us understand how the product is being used and improve the user experience.

Disabling Analytics

If you prefer not to share anonymous usage data, you can disable analytics by adding the --disable-analytics flag to your MCP configuration:

Claude Code:

claude mcp add mindpilot -- npx @mindpilot/mcp@latest --disable-analytics

Other IDEs:

Add "--disable-analytics" to the args array in your configuration:

{

"command": "npx",

"args": ["@mindpilot/mcp@latest", "--disable-analytics"]

}

Using the MCP server

After configuring the MCP in your coding agent you can make requests like "create a diagram about x" and it should use the MCP server to render Mermaid diagrams for you in a browser connected to the MCP server.

You can optionally update your agent's rules file to give specific instructions about when to use mindpilot-mcp.

Example requests

- "Show me the state machine for WebSocket connection logic"

- "Create a C4 context diagram of this project's architecture."

- "Show me the OAuth flow as a sequence diagram"

How it works

Frontier LLMs are well trained to generate valid Mermaid syntax. The MCP is designed to accept Mermaid syntax and render diagrams in a web app running on http://localhost:4000 (default port).

Troubleshooting

Port Conflicts

If you use port 4000 for another service you can configure the MCP to use a different port.

Claude Code example:

claude mcp add mindpilot -- npx @mindpilot/mcp@latest --port 5555

Custom Data Path

To save diagrams to a custom location (e.g., for syncing with cloud storage):

Claude Code example:

claude mcp add mindpilot -- npx @mindpilot/mcp@latest --data-path /path/to/custom/location

Other IDEs:

{

"command": "npx",

"args": ["@mindpilot/mcp@latest", "--data-path", "/path/to/custom/location"]

}

asdf Issues

If you use asdf as a version manager and have trouble getting MCPs to work (not just mindpilot), you may need to set a "global" nodejs version from your home directory.

cd

asdf set nodejs x.x.x

Development Configuration

Configure the MCP in your coding agent (using claude in this example)

claude mcp add mindpilot -- npx tsx <path to...>/src/server/server.ts

Run claude with the --debug flag if you need to see MCP errors

Start the development client (Vite) to get hot module reloading while developing.

npm run dev

Open the development client

localhost:5173

Star History

Repository Owner

User

Repository Details

Programming Languages

Tags

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

In Memoria

Persistent memory and instant context for AI coding assistants, integrated via MCP.

In Memoria is an MCP server that enables AI coding assistants such as Claude or Copilot to retain, recall, and provide context about codebases across sessions. It learns patterns, architecture, and conventions from user code, offering persistent intelligence that eliminates repetitive explanations and generic suggestions. Through the Model Context Protocol, it allows AI tools to perform semantic search, smart file routing, and track project-specific decisions efficiently.

- ⭐ 94

- MCP

- pi22by7/In-Memoria

Inspektor Gadget MCP Server

AI-powered Kubernetes troubleshooting via Model Context Protocol.

Inspektor Gadget MCP Server provides an AI-powered debugging and inspection interface for Kubernetes clusters. Leveraging the Model Context Protocol, it enables intelligent output summarization, one-click deployment of Inspektor Gadget, and automated discovery of debugging tools from Artifact Hub. The server integrates seamlessly with VS Code for interactive AI commands, simplifying Kubernetes troubleshooting and monitoring workflows.

- ⭐ 16

- MCP

- inspektor-gadget/ig-mcp-server

FileScopeMCP

Instantly understand and visualize your codebase structure & dependencies.

FileScopeMCP is a TypeScript-based server that implements the Model Context Protocol to analyze codebases, rank file importance, and track dependencies. It provides AI tools with comprehensive insights into file relationships, importance scores, and custom file summaries. The system supports visualization through Mermaid diagrams, persistent storage, and multi-language analysis for easier code comprehension. Seamless integration with Cursor ensures structured model context delivery for enhanced AI-driven code assistance.

- ⭐ 256

- MCP

- admica/FileScopeMCP

AI Distiller (aid)

Efficient codebase summarization and context extraction for AI code generation.

AI Distiller enables efficient distillation of large codebases by extracting essential context, such as public interfaces and data types, discarding method implementations and non-public details by default. It helps AI agents like Claude, Cursor, and other MCP-compatible tools understand project architecture more accurately, reducing hallucinations and code errors. With configurable CLI options, it generates condensed contexts that fit within AI model limitations, improving code generation accuracy and integration with the Model Context Protocol.

- ⭐ 106

- MCP

- janreges/ai-distiller

kibitz

The coding agent for professionals with MCP integration.

kibitz is a coding agent that supports advanced AI collaboration by enabling seamless integration with Model Context Protocol (MCP) servers via WebSockets. It allows users to configure Anthropic API keys, system prompts, and custom context providers for each project, enhancing contextual understanding for coding tasks. The platform is designed for developers and professionals seeking tailored AI-driven coding workflows and provides flexible project-specific configuration.

- ⭐ 104

- MCP

- nick1udwig/kibitz

Web Analyzer MCP

Intelligent web content analysis and summarization via MCP.

Web Analyzer MCP is an MCP-compliant server designed for intelligent web content analysis and summarization. It leverages FastMCP to perform advanced web scraping, content extraction, and AI-powered question-answering using OpenAI models. The tool integrates with various developer IDEs, offering structured markdown output, essential content extraction, and smart Q&A functionality. Its features streamline content analysis workflows and support flexible model selection.

- ⭐ 2

- MCP

- kimdonghwi94/web-analyzer-mcp

Didn't find tool you were looking for?