What is Nexa AI?

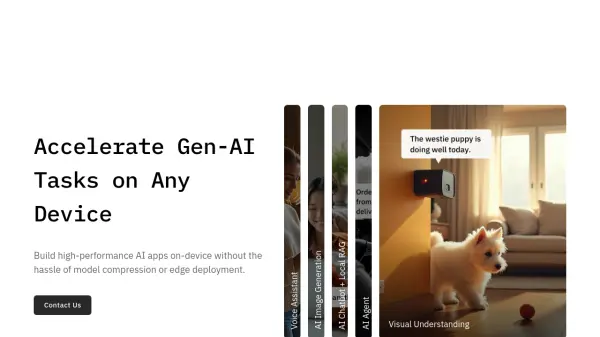

Nexa AI is a comprehensive on-device AI framework that enables local inference across multiple modalities. The platform supports text generation, image generation, vision-language models, audio-language models, speech-to-text, and text-to-speech capabilities through its SDK.

The solution offers multi-device support including CPU, GPU, NPU implementations for PC, mobile, wearables, automobiles, and robotics, while ensuring data privacy and efficient performance through optimized tiny models and local deployment options.

Features

- Multi-Device Support: Compatible with CPU, GPU, NPU, PC, Mobile, Wearables, Automobiles, and Robotics

- Privacy-First Architecture: Keeps sensitive data on device with local processing

- OpenAI-Compatible Server: Supports function calling and streaming with JSON schema

- Interactive UI: Built with Streamlit for easy model interaction

- Customized Model Optimization: Fine-tuning and quantization for efficient deployment

- Cross-Platform Deployment: Supports various hardware and software environments

Use Cases

- Conversational AI with RAG for company data

- Private Meeting Summaries

- Personal Information Organization

- Custom AI Assistants

- End-to-end Local RAG Systems

- Voice-Enabled Personal AI Assistants

- AI Influencer Marketing Automation

FAQs

-

What types of models does Nexa SDK support?

Nexa SDK supports ONNX and GGML models for text generation, image generation, vision-language models, audio-language models, speech-to-text, and text-to-speech capabilities. -

What platforms and hardware are supported?

It supports multiple platforms including CPU, GPU (CUDA, Metal, ROCm, Vulkan), NPU, PC, Mobile, Wearables, Automobiles, and Robotics. -

How does Nexa ensure data privacy?

Nexa processes all data locally on-device, ensuring sensitive information never leaves the user's hardware.

Related Queries

Helpful for people in the following professions

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.