Top AI tools for LLM evaluation

-

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered products

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered productsEvidently AI is a comprehensive AI observability platform that helps teams evaluate, test, and monitor LLM and ML models in production, offering data drift detection, quality assessment, and performance monitoring capabilities.

- Freemium

- From 50$

-

EleutherAI Empowering Open-Source Artificial Intelligence Research

EleutherAI Empowering Open-Source Artificial Intelligence ResearchEleutherAI is a research institute focused on advancing and democratizing open-source AI, particularly in language modeling, interpretability, and alignment. They train, release, and evaluate powerful open-source LLMs.

- Free

-

Kili Technology Build Better Data, Now.

Kili Technology Build Better Data, Now.Kili Technology is a data-centric AI platform providing tools and services for creating high-quality training datasets and evaluating LLMs. It streamlines the data labeling process and offers expert-led project management for machine learning projects.

- Freemium

-

Radicalbit Your ready-to-use MLOps platform for Machine Learning, Computer Vision, and LLMs.

Radicalbit Your ready-to-use MLOps platform for Machine Learning, Computer Vision, and LLMs.Radicalbit is an MLOps and AI Observability platform that accelerates deployment, serving, observability, and explainability of AI models. It offers real-time data exploration, outlier and drift detection, and model monitoring.

- Contact for Pricing

-

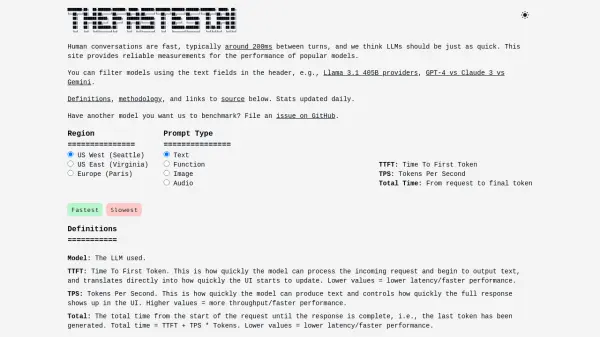

TheFastest.ai Reliable performance measurements for popular LLM models.

TheFastest.ai Reliable performance measurements for popular LLM models.TheFastest.ai provides reliable, daily updated performance benchmarks for popular Large Language Models (LLMs), measuring Time To First Token (TTFT) and Tokens Per Second (TPS) across different regions and prompt types.

- Free

-

Flow AI The data engine for AI agent testing

Flow AI The data engine for AI agent testingFlow AI accelerates AI agent development by providing continuously evolving, validated test data grounded in real-world information and refined by domain experts.

- Contact for Pricing

-

Braintrust The end-to-end platform for building world-class AI apps.

Braintrust The end-to-end platform for building world-class AI apps.Braintrust provides an end-to-end platform for developing, evaluating, and monitoring Large Language Model (LLM) applications. It helps teams build robust AI products through iterative workflows and real-time analysis.

- Freemium

- From 249$

-

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

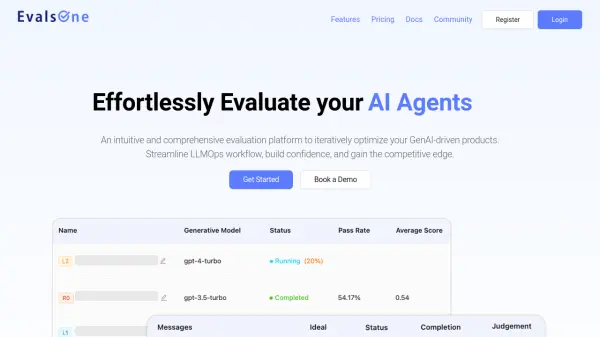

EvalsOne Evaluate LLMs & RAG Pipelines Quickly

EvalsOne Evaluate LLMs & RAG Pipelines QuicklyEvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

-

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.Scorecard.io is an evaluation platform designed for testing and validating production-ready Generative AI applications, including LLMs, RAG systems, agents, and chatbots. It supports the entire AI production lifecycle from experiment design to continuous evaluation.

- Contact for Pricing

-

Parea Test and Evaluate your AI systems

Parea Test and Evaluate your AI systemsParea is a platform for testing, evaluating, and monitoring Large Language Model (LLM) applications, helping teams track experiments, collect human feedback, and deploy prompts confidently.

- Freemium

- From 150$

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?