Top AI tools for hardware acceleration

-

Qualcomm AI Hub The platform for on-device AI: Any model, any device, any runtime. Deploy within minutes.

Qualcomm AI Hub The platform for on-device AI: Any model, any device, any runtime. Deploy within minutes.Qualcomm AI Hub is a comprehensive platform for deploying and optimizing AI models on Qualcomm devices, offering seamless integration with various runtimes and support for multiple industries including mobile, compute, automotive, and IoT.

- Contact for Pricing

-

GEMESYS Next Generation Edge AI-Hardware inspired by the human brain

GEMESYS Next Generation Edge AI-Hardware inspired by the human brainGEMESYS develops a fully analog AI chip that brings both training and inferencing capabilities to edge devices, inspired by human brain architecture to overcome current computing bottlenecks.

- Contact for Pricing

-

Taalas Transforming AI Models into Custom Silicon

Taalas Transforming AI Models into Custom SiliconTaalas offers a platform to convert any AI model into custom silicon, creating Hardcore Models that are 1000x more efficient than software.

- Other

-

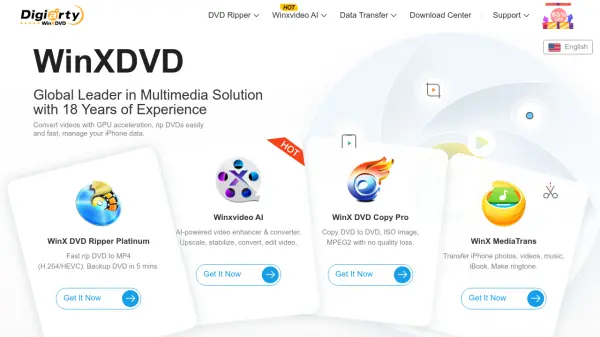

WinXDVD Global Leader in Multimedia Solutions with 18 Years of Experience

WinXDVD Global Leader in Multimedia Solutions with 18 Years of ExperienceWinXDVD offers a suite of multimedia software solutions, including AI-powered video/image enhancement, DVD ripping, and data transfer tools. It provides fast, high-quality results with GPU acceleration.

- Paid

-

Fractile Run the World's Largest Language Models 100x Faster

Fractile Run the World's Largest Language Models 100x FasterFractile is developing hardware to significantly accelerate AI inference. Their technology aims to eliminate memory bottlenecks, enabling large language models to run much faster and at a lower cost.

- Contact for Pricing

-

Groq Fast AI Inference for Openly-Available Models

Groq Fast AI Inference for Openly-Available ModelsGroq provides high-speed AI inference services for leading openly-available large language models (LLMs), automatic speech recognition (ASR), and vision models via its GroqCloud™ platform.

- Usage Based

-

ONNX (Open Neural Network Exchange) The open standard for machine learning interoperability.

ONNX (Open Neural Network Exchange) The open standard for machine learning interoperability.ONNX is an open format for representing machine learning models, enabling interoperability between different AI frameworks, tools, runtimes, and compilers.

- Free

-

NeuReality Purpose-Built Solutions for Deploying and Scaling AI Inference Workflows

NeuReality Purpose-Built Solutions for Deploying and Scaling AI Inference WorkflowsNeuReality provides AI-centric solutions designed to simplify the deployment and scaling of AI inference workflows, overcoming traditional system bottlenecks.

- Contact for Pricing

-

CLIKA Compress and Win with Smaller, Faster AI

CLIKA Compress and Win with Smaller, Faster AICLIKA provides an AI model compression engine to optimize models for speed, size, and hardware deployment, reducing memory footprint and enhancing performance.

- Freemium

-

Waydroid Run Full Android System on Linux with Seamless Integration

Waydroid Run Full Android System on Linux with Seamless IntegrationWaydroid enables users to run a complete Android operating system in a container on Wayland-based GNU/Linux desktops, offering near-native performance and full integration of Android apps alongside Linux applications.

- Free

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?