MCP Alchemy

Directly connect Claude Desktop to SQL databases for expert AI-powered analytics.

Key Features

Use Cases

README

MCP Alchemy

Status: Works great and is in daily use without any known bugs.

Status2: I just added the package to PyPI and updated the usage instructions. Please report any issues :)

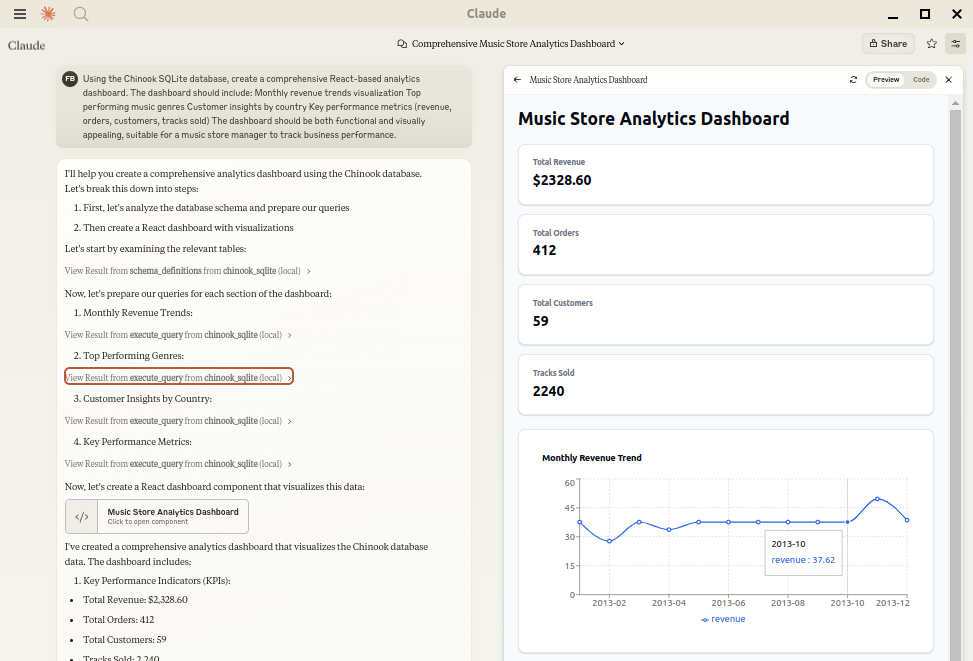

Let Claude be your database expert! MCP Alchemy connects Claude Desktop directly to your databases, allowing it to:

- Help you explore and understand your database structure

- Assist in writing and validating SQL queries

- Displays relationships between tables

- Analyze large datasets and create reports

- Claude Desktop Can analyse and create artifacts for very large datasets using claude-local-files.

Works with PostgreSQL, MySQL, MariaDB, SQLite, Oracle, MS SQL Server, CrateDB, Vertica, and a host of other SQLAlchemy-compatible databases.

Installation

Ensure you have uv installed:

# Install uv if you haven't already

curl -LsSf https://astral.sh/uv/install.sh | sh

Usage with Claude Desktop

Add to your claude_desktop_config.json. You need to add the appropriate database driver in the --with parameter.

Note: After a new version release there might be a period of up to 600 seconds while the cache clears locally cached causing uv to raise a versioning error. Restarting the MCP client once again solves the error.

SQLite (built into Python)

{

"mcpServers": {

"my_sqlite_db": {

"command": "uvx",

"args": ["--from", "mcp-alchemy==2025.8.15.91819",

"--refresh-package", "mcp-alchemy", "mcp-alchemy"],

"env": {

"DB_URL": "sqlite:////absolute/path/to/database.db"

}

}

}

}

PostgreSQL

{

"mcpServers": {

"my_postgres_db": {

"command": "uvx",

"args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "psycopg2-binary",

"--refresh-package", "mcp-alchemy", "mcp-alchemy"],

"env": {

"DB_URL": "postgresql://user:password@localhost/dbname"

}

}

}

}

MySQL/MariaDB

{

"mcpServers": {

"my_mysql_db": {

"command": "uvx",

"args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "pymysql",

"--refresh-package", "mcp-alchemy", "mcp-alchemy"],

"env": {

"DB_URL": "mysql+pymysql://user:password@localhost/dbname"

}

}

}

}

Microsoft SQL Server

{

"mcpServers": {

"my_mssql_db": {

"command": "uvx",

"args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "pymssql",

"--refresh-package", "mcp-alchemy", "mcp-alchemy"],

"env": {

"DB_URL": "mssql+pymssql://user:password@localhost/dbname"

}

}

}

}

Oracle

{

"mcpServers": {

"my_oracle_db": {

"command": "uvx",

"args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "oracledb",

"--refresh-package", "mcp-alchemy", "mcp-alchemy"],

"env": {

"DB_URL": "oracle+oracledb://user:password@localhost/dbname"

}

}

}

}

CrateDB

{

"mcpServers": {

"my_cratedb": {

"command": "uvx",

"args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "sqlalchemy-cratedb>=0.42.0.dev1",

"--refresh-package", "mcp-alchemy", "mcp-alchemy"],

"env": {

"DB_URL": "crate://user:password@localhost:4200/?schema=testdrive"

}

}

}

}

For connecting to CrateDB Cloud, use a URL like

crate://user:password@example.aks1.westeurope.azure.cratedb.net:4200?ssl=true.

Vertica

{

"mcpServers": {

"my_vertica_db": {

"command": "uvx",

"args": ["--from", "mcp-alchemy==2025.8.15.91819", "--with", "vertica-python",

"--refresh-package", "mcp-alchemy", "mcp-alchemy"],

"env": {

"DB_URL": "vertica+vertica_python://user:password@localhost:5433/dbname",

"DB_ENGINE_OPTIONS": "{\"connect_args\": {\"ssl\": false}}"

}

}

}

}

Environment Variables

DB_URL: SQLAlchemy database URL (required)CLAUDE_LOCAL_FILES_PATH: Directory for full result sets (optional)EXECUTE_QUERY_MAX_CHARS: Maximum output length (optional, default 4000)DB_ENGINE_OPTIONS: JSON string containing additional SQLAlchemy engine options (optional)

Connection Pooling

MCP Alchemy uses connection pooling optimized for long-running MCP servers. The default settings are:

pool_pre_ping=True: Tests connections before use to handle database timeouts and network issuespool_size=1: Maintains 1 persistent connection (MCP servers typically handle one request at a time)max_overflow=2: Allows up to 2 additional connections for burst capacitypool_recycle=3600: Refreshes connections older than 1 hour (prevents timeout issues)isolation_level='AUTOCOMMIT': Ensures each query commits automatically

These defaults work well for most databases, but you can override them via DB_ENGINE_OPTIONS:

{

"DB_ENGINE_OPTIONS": "{\"pool_size\": 5, \"max_overflow\": 10, \"pool_recycle\": 1800}"

}

For databases with aggressive timeout settings (like MySQL's 8-hour default), the combination of pool_pre_ping and pool_recycle ensures reliable connections.

API

Tools

-

all_table_names

- Return all table names in the database

- No input required

- Returns comma-separated list of tables

users, orders, products, categories -

filter_table_names

- Find tables matching a substring

- Input:

q(string) - Returns matching table names

Input: "user" Returns: "users, user_roles, user_permissions" -

schema_definitions

- Get detailed schema for specified tables

- Input:

table_names(string[]) - Returns table definitions including:

- Column names and types

- Primary keys

- Foreign key relationships

- Nullable flags

users: id: INTEGER, primary key, autoincrement email: VARCHAR(255), nullable created_at: DATETIME Relationships: id -> orders.user_id -

execute_query

- Execute SQL query with vertical output format

- Inputs:

query(string): SQL queryparams(object, optional): Query parameters

- Returns results in clean vertical format:

1. row id: 123 name: John Doe created_at: 2024-03-15T14:30:00 email: NULL Result: 1 rows- Features:

- Smart truncation of large results

- Full result set access via claude-local-files integration

- Clean NULL value display

- ISO formatted dates

- Clear row separation

Claude Local Files

When claude-local-files is configured:

- Access complete result sets beyond Claude's context window

- Generate detailed reports and visualizations

- Perform deep analysis on large datasets

- Export results for further processing

The integration automatically activates when CLAUDE_LOCAL_FILES_PATH is set.

Developing

First clone the github repository, install the dependencies and your database driver(s) of choice:

git clone git@github.com:runekaagaard/mcp-alchemy.git

cd mcp-alchemy

uv sync

uv pip install psycopg2-binary

Then set this in claude_desktop_config.json:

...

"command": "uv",

"args": ["run", "--directory", "/path/to/mcp-alchemy", "-m", "mcp_alchemy.server", "main"],

...

My Other LLM Projects

- MCP Redmine - Let Claude Desktop manage your Redmine projects and issues.

- MCP Notmuch Sendmail - Email assistant for Claude Desktop using notmuch.

- Diffpilot - Multi-column git diff viewer with file grouping and tagging.

- Claude Local Files - Access local files in Claude Desktop artifacts.

MCP Directory Listings

MCP Alchemy is listed in the following MCP directory sites and repositories:

Contributing

Contributions are warmly welcomed! Whether it's bug reports, feature requests, documentation improvements, or code contributions - all input is valuable. Feel free to:

- Open an issue to report bugs or suggest features

- Submit pull requests with improvements

- Enhance documentation or share your usage examples

- Ask questions and share your experiences

The goal is to make database interaction with Claude even better, and your insights and contributions help achieve that.

License

Mozilla Public License Version 2.0

Star History

Repository Owner

User

Repository Details

Programming Languages

Tags

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

Alkemi MCP Server

Integrate Alkemi Data sources with MCP Clients for seamless, standardized data querying.

Alkemi MCP Server provides a STDIO wrapper for connecting Alkemi data sources—including Snowflake, Google BigQuery, and Databricks—with MCP Clients using the Model Context Protocol. It facilitates context sharing, database metadata management, and query generation through a standardized protocol endpoint. Shared MCP Servers allow teams to maintain consistent, high-quality data querying capabilities without needing to replicate schemas or query knowledge for each agent. Out-of-the-box integration with Claude Desktop and robust debugging tools are also included.

- ⭐ 2

- MCP

- alkemi-ai/alkemi-mcp

Multi-Database MCP Server (by Legion AI)

Unified multi-database access and AI interaction server with MCP integration.

Multi-Database MCP Server enables seamless access and querying of diverse databases via a unified API, with native support for the Model Context Protocol (MCP). It supports popular databases such as PostgreSQL, MySQL, SQL Server, and more, and is built for integration with AI assistants and agents. Leveraging the MCP Python SDK, it exposes databases as resources, tools, and prompts for intelligent, context-aware interactions, while delivering zero-configuration schema discovery and secure credential management.

- ⭐ 76

- MCP

- TheRaLabs/legion-mcp

MCP 数据库工具 (MCP Database Utilities)

A secure bridge enabling AI systems safe, read-only access to multiple databases via unified configuration.

MCP Database Utilities provides a secure, standardized service for AI systems to access and analyze databases like SQLite, MySQL, and PostgreSQL using a unified YAML-based configuration. It enforces strict read-only operations, local processing, and credential protection to ensure data privacy and integrity. The tool is suitable for entities focused on data privacy and minimizes risks by isolating database connections and masking sensitive data. Designed for easy integration, it supports multiple installation options and advanced capabilities such as schema analysis and table browsing.

- ⭐ 85

- MCP

- donghao1393/mcp-dbutils

mcp-server-sql-analyzer

MCP server for SQL analysis, linting, and dialect conversion.

Provides standardized MCP server capabilities for analyzing, linting, and converting SQL queries across multiple dialects using SQLGlot. Supports syntactic validation, dialect transpilation, extraction of table and column references, and offers tools for understanding query structures. Facilitates seamless workflow integration with AI assistants through a set of MCP tools.

- ⭐ 26

- MCP

- j4c0bs/mcp-server-sql-analyzer

XiYan MCP Server

A server enabling natural language queries to SQL databases via the Model Context Protocol.

XiYan MCP Server is a Model Context Protocol (MCP) compliant server that allows users to query SQL databases such as MySQL and PostgreSQL using natural language. It leverages the XiYanSQL model, providing state-of-the-art text-to-SQL translation and supports both general LLMs and local deployment for enhanced security. The server lists available database tables as resources and can read table contents, making it simple to integrate with different applications.

- ⭐ 218

- MCP

- XGenerationLab/xiyan_mcp_server

SkySQL MCP Server

Serverless MariaDB database management with AI-powered agents via Model Context Protocol.

SkySQL MCP Server implements the Model Context Protocol to provide a robust interface for launching and managing serverless MariaDB and MySQL database instances. It offers capabilities to interact with AI-powered agents for intelligent database operations, execute SQL queries, and manage credentials and security settings. Integration with tools like MCP CLI, Smithery.ai, and Cursor.sh is supported for interactive usage. Designed for efficiency and scalability, it enables streamlined database workflows with advanced AI assistance.

- ⭐ 2

- MCP

- skysqlinc/skysql-mcp

Didn't find tool you were looking for?