video-editing-mcp

MCP server for uploading, editing, searching, and generating videos via Video Jungle and LLMs.

Key Features

Use Cases

README

Video Editor MCP server

See a demo here: https://www.youtube.com/watch?v=KG6TMLD8GmA

Upload, edit, search, and generate videos from everyone's favorite LLM and Video Jungle.

You'll need to sign up for an account at Video Jungle in order to use this tool, and add your API key.

Components

Resources

The server implements an interface to upload, generate, and edit videos with:

- Custom vj:// URI scheme for accessing individual videos and projects

- Each project resource has a name, description

- Search results are returned with metadata about what is in the video, and when, allowing for edit generation directly

Prompts

Coming soon.

Tools

The server implements a few tools:

- add-video

- Add a Video File for analysis from a URL. Returns an vj:// URI to reference the Video file

- create-videojungle-project

- Creates a Video Jungle project to contain generative scripts, analyzed videos, and images for video edit generation

- edit-locally

- Creates an OpenTimelineIO project and downloads it to your machine to open in a Davinci Resolve Studio instance (Resolve Studio must already be running before calling this tool.)

- generate-edit-from-videos

- Generates a rendered video edit from a set of video files

- generate-edit-from-single-video

- Generate an edit from a single input video file

- get-project-assets

- Get assets within a project for video edit generation.

- search-videos

- Returns video matches based upon embeddings and keywords

- update-video-edit

- Live update a video edit's information. If Video Jungle is open, edit will be updated in real time.

Using Tools in Practice

In order to use the tools, you'll need to sign up for Video Jungle and add your API key.

add-video

Here's an example prompt to invoke the add-video tool:

can you download the video at https://www.youtube.com/shorts/RumgYaH5XYw and name it fly traps?

This will download a video from a URL, add it to your library, and analyze it for retrieval later. Analysis is multi-modal, so both audio and visual components can be queried against.

search-videos

Once you've got a video downloaded and analyzed, you can then do queries on it using the search-videos tool:

can you search my videos for fly traps?

Search results contain relevant metadata for generating a video edit according to details discovered in the initial analysis.

search-local-videos

You must set the environment variable LOAD_PHOTOS_DB=1 in order to use this tool, as it will make Claude prompt to access your files on your local machine.

Once that's done, you can search through your Photos app for videos that exist on your phone, using Apple's tags.

In my case, when I search for "Skateboard", I get 1903 video files.

can you search my local video files for Skateboard?

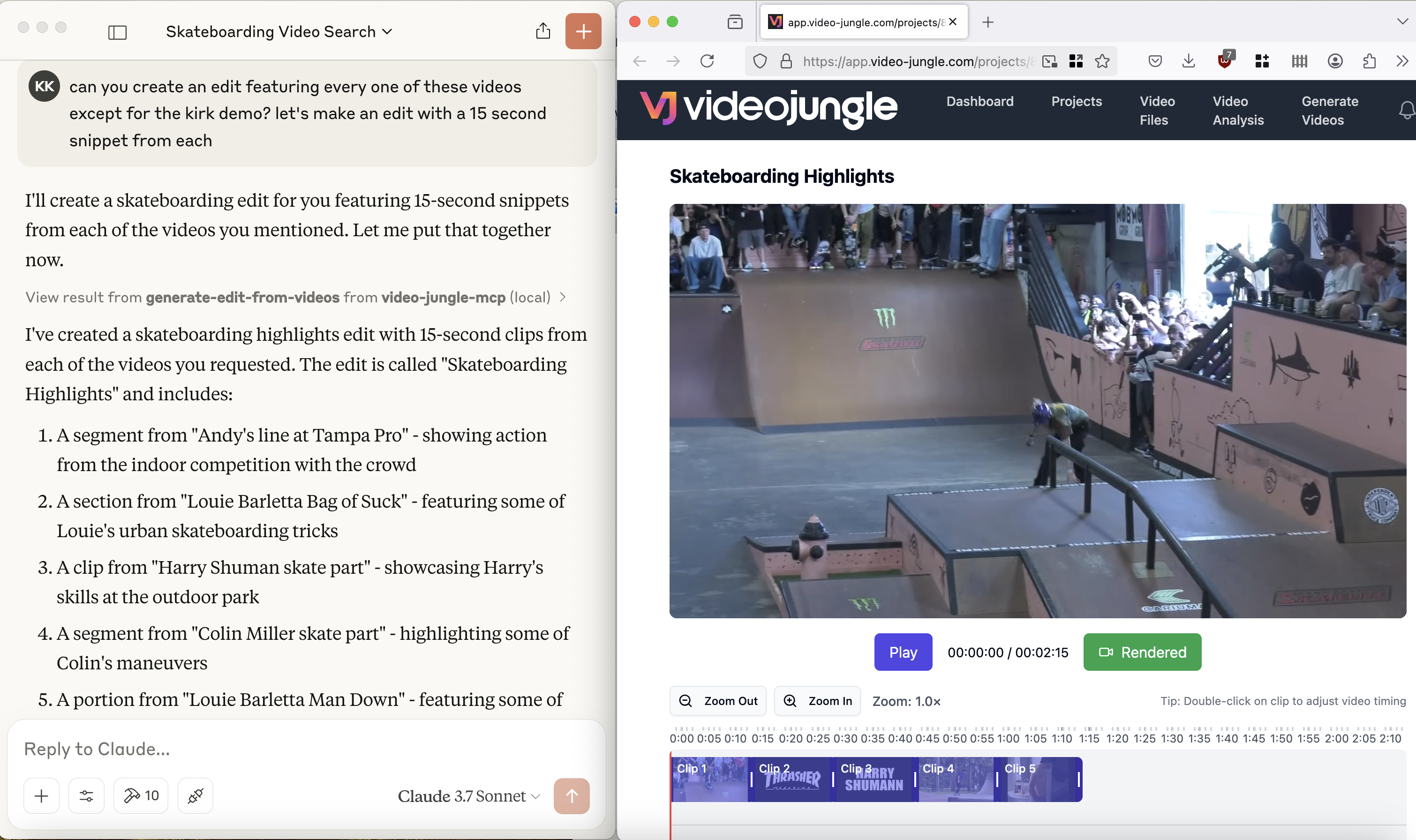

generate-edit-from-videos

Finally, you can use these search results to generate an edit:

can you create an edit of all the times the video says "fly trap"?

(Currently), the video edits tool relies on the context within the current chat.

generate-edit-from-single-video

Finally, you can cut down an edit from a single, existing video:

can you create an edit of all the times this video says the word "fly trap"?

Configuration

You must login to Video Jungle settings, and get your API key. Then, use this to start Video Jungle MCP:

$ uv run video-editor-mcp YOURAPIKEY

To allow this MCP server to search your Photos app on MacOS:

$ LOAD_PHOTOS_DB=1 uv run video-editor-mcp YOURAPIKEY

Quickstart

Install

Installing via Smithery

To install Video Editor for Claude Desktop automatically via Smithery:

npx -y @smithery/cli install video-editor-mcp --client claude

Claude Desktop

You'll need to adjust your claude_desktop_config.json manually:

On MacOS: ~/Library/Application\ Support/Claude/claude_desktop_config.json

On Windows: %APPDATA%/Claude/claude_desktop_config.json

"mcpServers": {

"video-editor-mcp": {

"command": "uvx",

"args": [

"video-editor-mcp",

"YOURAPIKEY"

]

}

}

"mcpServers": {

"video-editor-mcp": {

"command": "uv",

"args": [

"--directory",

"/Users/YOURDIRECTORY/video-editor-mcp",

"run",

"video-editor-mcp",

"YOURAPIKEY"

]

}

}

With local Photos app access enabled (search your Photos app):

"video-jungle-mcp": {

"command": "uv",

"args": [

"--directory",

"/Users/<PATH_TO>/video-jungle-mcp",

"run",

"video-editor-mcp",

"<YOURAPIKEY>"

],

"env": {

"LOAD_PHOTOS_DB": "1"

}

},

Be sure to replace the directories with the directories you've placed the repository in on your computer.

Development

Building and Publishing

To prepare the package for distribution:

- Sync dependencies and update lockfile:

uv sync

- Build package distributions:

uv build

This will create source and wheel distributions in the dist/ directory.

- Publish to PyPI:

uv publish

Note: You'll need to set PyPI credentials via environment variables or command flags:

- Token:

--tokenorUV_PUBLISH_TOKEN - Or username/password:

--username/UV_PUBLISH_USERNAMEand--password/UV_PUBLISH_PASSWORD

Debugging

Since MCP servers run over stdio, debugging can be challenging. For the best debugging experience, we strongly recommend using the MCP Inspector.

You can launch the MCP Inspector via npm with this command:

(Be sure to replace YOURDIRECTORY and YOURAPIKEY with the directory this repo is in, and your Video Jungle API key, found in the settings page.)

npx @modelcontextprotocol/inspector uv run --directory /Users/YOURDIRECTORY/video-editor-mcp video-editor-mcp YOURAPIKEY

Upon launching, the Inspector will display a URL that you can access in your browser to begin debugging.

Additionally, I've added logging to app.log in the project directory. You can add logging to diagnose API calls via a:

logging.info("this is a test log")

A reasonable way to follow along as you're workin on the project is to open a terminal session and do a:

$ tail -n 90 -f app.log

Star History

Repository Owner

User

Repository Details

Programming Languages

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Related MCPs

Discover similar Model Context Protocol servers

memvid-mcp-server

A Streamable HTTP MCP Server for encoding and semantically searching video-based memory.

memvid-mcp-server provides a Model Context Protocol (MCP) compatible HTTP server that leverages memvid to encode text data into videos. It supports adding text chunks as video and performing semantic search over them using standardized MCP actions such as add_chunks and search. The server can be integrated with MCP clients via streamable HTTP and enables fast context retrieval for AI applications.

- ⭐ 8

- MCP

- ferrants/memvid-mcp-server

VideoDB Agent Toolkit

AI Agent toolkit that exposes VideoDB context to LLMs with MCP support

VideoDB Agent Toolkit provides tools for exposing VideoDB context to large language models (LLMs) and agents, enabling integration with AI-driven IDEs and chat agents. It automates context generation, metadata management, and discoverability by offering structured context files like llms.txt and llms-full.txt, and standardized access via the Model Context Protocol (MCP). The toolkit ensures synchronization of SDK versions, comprehensive documentation, and best practices for seamless AI-powered workflows.

- ⭐ 43

- MCP

- video-db/agent-toolkit

VikingDB MCP Server

MCP server for managing and searching VikingDB vector databases.

VikingDB MCP Server is an implementation of the Model Context Protocol (MCP) that acts as a bridge between VikingDB, a high-performance vector database by ByteDance, and AI model context management frameworks. It allows users to store, upsert, and search vectorized information efficiently using standardized MCP commands. The server supports various operations on VikingDB collections and indexes, making it suitable for integrating advanced vector search in AI workflows.

- ⭐ 3

- MCP

- KashiwaByte/vikingdb-mcp-server

piapi-mcp-server

TypeScript-based MCP server for PiAPI media content generation

piapi-mcp-server is a TypeScript implementation of a Model Context Protocol (MCP) server that connects with PiAPI to enable media generation workflows from MCP-compatible applications. It handles image, video, music, TTS, 3D, and voice generation tasks using a wide range of supported models like Midjourney, Flux, Kling, LumaLabs, Udio, and more. Designed for easy integration with clients such as Claude Desktop, it includes an interactive MCP Inspector for development, testing, and debugging.

- ⭐ 62

- MCP

- apinetwork/piapi-mcp-server

mcpmcp-server

Seamlessly discover, set up, and integrate MCP servers with AI clients.

mcpmcp-server enables users to discover, configure, and connect MCP servers with preferred clients, optimizing AI integration into daily workflows. It supports streamlined setup via JSON configuration, ensuring compatibility with various platforms such as Claude Desktop on macOS. The project simplifies the connection process between AI clients and remote Model Context Protocol servers. Users are directed to an associated homepage for further platform-specific guidance.

- ⭐ 17

- MCP

- glenngillen/mcpmcp-server

manim-mcp-server

MCP server for generating Manim animations on demand.

Manim MCP Server allows users to execute Manim Python scripts via a standardized protocol, generating animation videos that are returned as output. It integrates with systems like Claude to dynamically render animation content from user scripts and supports configurable deployment using environment variables. The server handles management of output files and cleanup of temporary resources, designed with portability and ease of integration in mind.

- ⭐ 454

- MCP

- abhiemj/manim-mcp-server

Didn't find tool you were looking for?